TL;DR:

- GenAI models like ChatGPT, Google Bard, and GPT have transformed AI interaction by generating diverse content and impacting communication and problem-solving.

- ChatGPT’s widespread adoption reflects GenAI’s integration into daily digital life, making AI more accessible and intuitive for a broader audience.

- GenAI models have evolved significantly, with each iteration showcasing improvements in language understanding and content generation.

- The sophistication of GenAI models raises ethical concerns, privacy risks, and potential vulnerabilities for malicious exploitation.

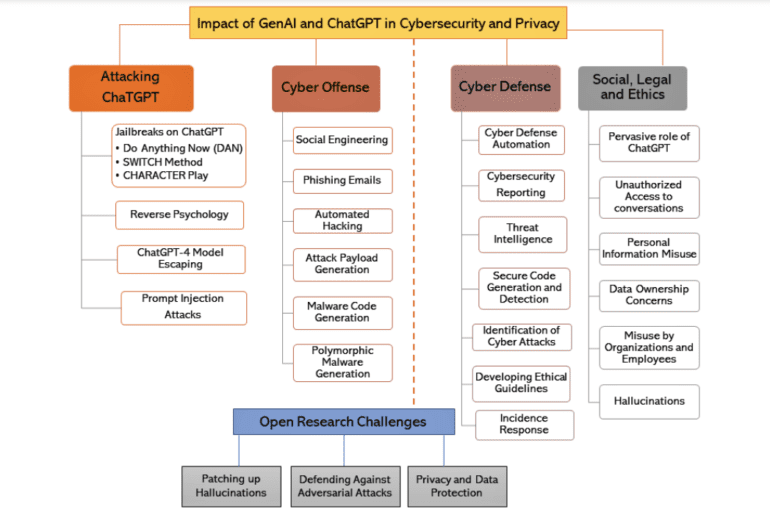

- Recent research examines ChatGPT’s cybersecurity and privacy implications, revealing vulnerabilities, risks, and misuse potential.

- Defensive strategies leveraging GenAI include cyber defense automation, threat intelligence, secure code generation, and ethical guidelines.

- ChatGPT can aid in cyber defense through automation, reporting, threat intelligence, secure coding, attack identification, ethical guidelines, technology integration, incident response, and malware detection.

- Ethical, legal, and social challenges persist in the use of GenAI models in cybersecurity, including biases, privacy breaches, and misuse risks.

- Responsible integration of GenAI models demands addressing biases, enhancing security, and safeguarding user data.

Main AI News:

Generative AI (GenAI) models, including renowned names like ChatGPT, Google Bard, and Microsoft’s GPT, have ushered in a new era of AI interaction. They have left an indelible mark on various domains by effortlessly creating diverse content, spanning text, images, and music. This transformative technology has not only impacted communication but has also presented novel problem-solving opportunities. The widespread adoption of ChatGPT, embraced by millions, signifies GenAI’s seamless integration into our daily digital lives, fundamentally altering our interactions with artificial intelligence. Its remarkable ability to comprehend and generate human-like conversations has democratized AI, making it more accessible and intuitive to a broader audience and reshaping perceptions along the way.

The evolution of GenAI models has been nothing short of spectacular, marked by significant milestones from the inception of GPT-1 to the latest iterations like GPT-4. Each iteration has exhibited substantial advancements in language comprehension, content generation, and the incorporation of multimodal capabilities. However, as GenAI matures, it brings forth a new set of challenges. The heightened sophistication of these models comes with pressing ethical concerns, privacy vulnerabilities, and potential exploits that malevolent actors may seize upon.

In light of these developments, a recent research paper has undertaken a comprehensive examination of GenAI, with a specific focus on ChatGPT, delving deep into the cybersecurity and privacy implications that it presents. This insightful study has uncovered vulnerabilities within ChatGPT that have the potential to compromise ethical boundaries and infringe upon user privacy, a concern that could be exploited by nefarious individuals. The paper elucidates the risks, including Jailbreaks, reverse psychology tactics, and prompt injection attacks, shedding light on the imminent threats associated with these powerful GenAI tools. Furthermore, it explores the avenues through which cyber offenders may misuse GenAI, such as for social engineering attacks, automated hacking endeavors, and the creation of malicious software. Additionally, the paper outlines defensive strategies leveraging GenAI, emphasizing the importance of cyber defense automation, threat intelligence, secure code generation, and ethical guidelines to bolster system defenses against potential cyberattacks.

The research team’s investigations have delved into various methodologies to manipulate ChatGPT, discussing jailbreaking techniques like DAN, SWITCH, and CHARACTER Play, all aimed at circumventing restrictions and ethical constraints. These techniques carry inherent risks, and if wielded by malicious actors, they could result in the generation of harmful content or security breaches. Furthermore, the paper paints a stark picture of potential scenarios where the unchecked capabilities of ChatGPT-4 could lead to breaches of internet restrictions. It dives into the realm of prompt injection attacks, uncovering vulnerabilities in language models like ChatGPT and illustrating examples of generating attack payloads, ransomware/malware code, and CPU-affecting viruses using ChatGPT. These investigations underscore the grave cybersecurity concerns, painting a vivid picture of the potential misuse of AI models like ChatGPT for nefarious purposes, including social engineering, phishing attacks, automated hacking, and the generation of polymorphic malware.

The research team’s exploration has also unveiled several ways in which ChatGPT can actively contribute to the field of cyber defense:

- Automation: ChatGPT aids Security Operations Center (SOC) analysts by analyzing incidents, generating comprehensive reports, and suggesting effective defense strategies.

- Reporting: It excels in creating user-friendly reports based on cybersecurity data, facilitating the identification of threats and risk assessment.

- Threat Intelligence: By processing vast datasets, ChatGPT identifies potential threats, assesses risks, and recommends mitigation strategies.

- Secure Coding: It assists in identifying security vulnerabilities during code reviews and advocates secure coding practices.

- Attack Identification: Through data analysis, ChatGPT describes attack patterns, contributing to a better understanding and prevention of cyberattacks.

- Ethical Guidelines: It synthesizes concise summaries of ethical frameworks for AI systems, ensuring ethical considerations in AI applications.

- Enhancing Technologies: ChatGPT seamlessly integrates with intrusion detection systems, augmenting threat detection capabilities.

- Incident Response: It offers immediate guidance and creates incident response playbooks for swift and effective responses to security incidents.

- Malware Detection: ChatGPT analyzes code patterns to identify potential malware, enhancing cybersecurity defenses.

These applications vividly illustrate how ChatGPT can significantly contribute across various cybersecurity domains, ranging from incident response to threat detection and ethical guideline creation.

Beyond their potential in threat detection, an examination of ChatGPT and similar language models in the realm of cybersecurity underscores the ethical, legal, and social challenges that accompany them, including biases, privacy breaches, and misuse risks. A comparison with Google’s Bard highlights differences in accessibility and data handling, while persistent challenges remain in addressing biases, fortifying security measures, and safeguarding user data. Despite these hurdles, these AI tools hold promise in log analysis and seamless integration with other technological solutions. Nevertheless, responsible integration demands the mitigation of biases, the strengthening of security measures, and the unwavering commitment to safeguarding user data, making them dependable assets in the cybersecurity landscape.

Conclusion:

The integration of GenAI models in cybersecurity presents promising opportunities for threat detection and defense automation. However, ethical and privacy concerns, along with the potential for misuse, require vigilant oversight and mitigation strategies. The market should focus on developing robust ethical frameworks, enhancing security measures, and ensuring data protection to fully harness the potential of GenAI in the cybersecurity landscape.