TL;DR:

- Microsoft introduces PyRIT, an open automation framework for identifying risks in generative AI systems.

- PyRIT addresses the complexities of red teaming generative AI, including simultaneous identification of security and responsible AI risks, probabilistic nature, and diverse architectures.

- PyRIT offers efficiency gains and automation while augmenting manual red teaming efforts.

- Key components of PyRIT include targets, datasets, scoring engine, attack strategy, and memory.

- PyRIT facilitates rapid generation and evaluation of harmful prompts, empowering security professionals to focus on high-risk areas.

Main AI News:

In today’s rapidly evolving technological landscape, the integration of artificial intelligence (AI) into various facets of our lives has become inevitable. However, with the growing reliance on AI systems, ensuring their security and responsible deployment has become paramount. Recognizing this need, Microsoft has taken a significant step forward with the release of PyRIT (Python Risk Identification Toolkit for generative AI), an open automation framework designed to empower security professionals and machine learning engineers in proactively identifying risks within generative AI systems.

Collaboration Between Security and AI Responsibility

At Microsoft, there’s a deep-rooted understanding of the crucial collaboration required between security practices and generative AI responsibilities. This collaboration is not just acknowledged but actively fostered through the development of innovative tools and resources. PyRIT stands as a testament to this commitment, alongside Microsoft’s ongoing investments in red teaming AI since 2019.

The Importance of Automation in AI Red Teaming

Red teaming AI systems is a complex process that demands meticulous attention to detail. Microsoft’s AI Red Team, comprising experts across security, adversarial machine learning, and responsible AI domains, leverages resources from across the Microsoft ecosystem. PyRIT emerges as a critical component in this process, offering automation and efficiency gains that streamline red teaming exercises.

Challenges in Red Teaming Generative AI Systems

Red teaming generative AI systems presents unique challenges compared to traditional approaches. These include the simultaneous identification of security and responsible AI risks, the probabilistic nature of generative AI, and the diverse architectures these systems exhibit. PyRIT addresses these challenges head-on, offering tailored solutions for effective red teaming of generative AI systems.

PyRIT: A Breakthrough Solution

PyRIT, crafted by Microsoft’s AI Red Team, is a battle-tested solution designed specifically for red teaming generative AI systems. Its evolution from a collection of scripts to a robust toolkit highlights its efficiency gains and enhanced capabilities. PyRIT doesn’t replace manual efforts but augments them, allowing security professionals to focus on exploring high-risk areas while automating routine tasks.

Key Components of PyRIT

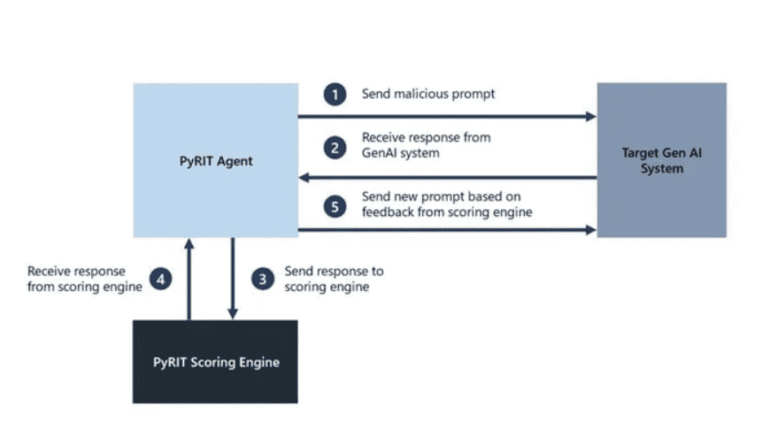

PyRIT’s strength lies in its abstraction, extensibility, and adaptability to diverse generative AI models. Its key components include support for various generative AI target formulations, dynamic datasets, a flexible scoring engine, diverse attack strategies, and a memory feature for in-depth analysis and knowledge sharing.

Conclusion:

With PyRIT, Microsoft reaffirms its commitment to democratizing AI security and responsible deployment practices. By providing security professionals and machine learning engineers with a powerful toolkit tailored to the unique challenges of generative AI systems, we pave the way for a more secure and ethically aligned AI ecosystem. Together, we can harness the potential of AI innovation while safeguarding against emerging risks.