- Multimodal language models integrate text and images, advancing AI understanding.

- Reka AI introduces Vibe-Eval, a structured benchmark for evaluating multimodal models.

- Vibe-Eval’s methodology includes 269 prompts categorized into normal and hard sets.

- Reka Core assesses model performance on a 1-5 scale, incorporating automated and human evaluations.

- Evaluation results reveal Gemini Pro 1.5 and GPT-4V as top performers, with Reka Core showing competitive performance.

Main AI News:

The integration of text and images in multimodal language models marks a pivotal advancement in artificial intelligence. These models, designed to interpret and reason through complex data, offer a glimpse into the future of AI, where machine learning seamlessly interacts with real-world contexts. As these models evolve, accurate evaluation becomes paramount to assess their capabilities effectively.

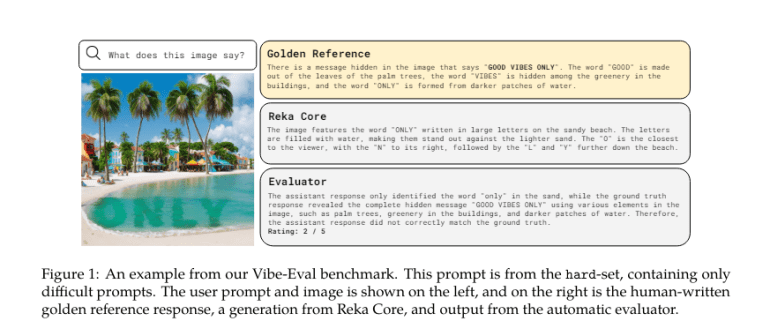

In response to this growing need, Reka AI introduces Vibe-Eval, a cutting-edge benchmark tailored for evaluating multimodal language models. Vibe-Eval sets itself apart by providing a meticulously structured framework that rigorously tests the visual understanding capabilities of these models. Unlike existing benchmarks, Vibe-Eval’s deliberate difficulty level challenges models to demonstrate nuanced reasoning and context comprehension, ensuring a thorough assessment of their strengths and limitations.

The methodology behind Vibe-Eval is robust, encompassing 269 visual prompts categorized into normal and hard sets, each accompanied by expert-crafted, gold-standard responses. Reka Core, the text-based evaluator, employs a 1-5 scale to measure model performance based on accuracy compared to standard answers. Models tested include Google’s Gemini Pro 1.5, OpenAI’s GPT-4V, among others. These prompts cover diverse scenarios, pushing models to interpret text and images accurately.

Furthermore, Vibe-Eval incorporates both automated scoring and periodic human evaluations to provide a comprehensive assessment. Human evaluators validate model responses, offering insights into areas where current multimodal models excel or struggle. The combination of automated and human evaluation ensures accuracy and reliability in assessing model performance.

The evaluation results from Vibe-Eval offer valuable insights into the landscape of multimodal language models. Gemini Pro 1.5 and GPT-4V emerge as top performers, with overall scores of 60.4% and 57.9%, respectively. Reka Core, while commendable, achieves an overall score of 45.4%, showcasing the complexity of the benchmark. Models like Claude Opus and Claude Haiku score around 52%, indicating their competitive performance. However, on the hard set, Gemini Pro 1.5 and GPT-4V maintain their lead, with Reka Core’s performance dropping to 38.2%. Open-source models like LLaVA and Idefics-2 score approximately 30% overall, highlighting the diverse capabilities across different models and underscoring the necessity for rigorous benchmarking like Vibe-Eval.

Conclusion:

The introduction of Vibe-Eval by Reka AI signifies a significant advancement in evaluating AI multimodal models. By providing a structured framework and rigorous methodology, Vibe-Eval enables accurate assessment of model capabilities. The dominance of Gemini Pro 1.5 and GPT-4V underscores the importance of continuous innovation in the field, driving competition and pushing the boundaries of AI technology. Companies in the market should take note of these findings to inform their development strategies and stay competitive in an evolving landscape.