TL;DR:

- Diffusion models have revolutionized AI methods, but their computational requirements pose challenges for mobile devices.

- SnapFusion is a text-to-image diffusion model that generates high-quality images on mobile devices in under 2 seconds.

- It optimizes the UNet architecture, reduces denoising steps, and introduces an evolving training framework.

- SnapFusion employs a data distillation pipeline and a step distillation approach for efficient image generation.

- It achieves impressive results, generating 512×512 images with quality comparable to state-of-the-art models.

Main AI News:

In the ever-evolving landscape of AI, one term has taken center stage: diffusion models. These models have been the driving force behind the revolutionary advancements in generative AI methods, enabling the generation of stunning photorealistic images in a matter of seconds, all through the power of text prompts. They have truly transformed content creation, image editing, super-resolution, video synthesis, and 3D asset generation.

However, there is a catch. The immense computational requirements of diffusion models make them a costly affair. To fully leverage their potential, high-end GPUs are a necessity. While efforts have been made to enable diffusion models to run on local computers, the need for high-end hardware remains. Cloud providers may offer an alternative solution, but privacy concerns can become a roadblock.

Moreover, there’s the on-the-go aspect to consider. Mobile devices have become the primary means of digital interaction for most individuals. Yet, utilizing diffusion models on these devices is a daunting task due to their limited hardware capabilities.

The potential of diffusion models is undeniable, but their complexity must be addressed before they can find practical applications. Previous attempts to optimize inference on mobile devices fell short of delivering a seamless user experience and quantitatively evaluated generation quality. That was until now, with the arrival of SnapFusion.

Introducing SnapFusion, the pioneering text-to-image diffusion model that generates high-quality images on mobile devices in under 2 seconds. This groundbreaking solution optimizes the UNet architecture and streamlines the denoising process, significantly boosting inference speed. But that’s not all—SnapFusion leverages an evolving training framework, introduces data distillation pipelines, and enhances the learning objective to achieve unprecedented performance.

The masterminds behind SnapFusion took a meticulous approach. They began by scrutinizing the architecture redundancy of SD-v1.5, aiming to extract efficient neural networks. However, conventional pruning and architecture search techniques proved challenging due to their high training costs. Altering the architecture risked compromising performance and demanded extensive fine-tuning with substantial computational resources. A roadblock, indeed. Nevertheless, the team persevered and developed innovative solutions to preserve the pre-trained UNet model’s performance while gradually enhancing its efficacy.

SnapFusion’s focus on optimizing the UNet architecture, a bottleneck in the conditional diffusion model, sets it apart. While existing works concentrate on post-training optimizations, SnapFusion identifies architecture redundancies and presents an evolving training framework that surpasses the original Stable Diffusion model while delivering remarkable speed improvements. Furthermore, a data distillation pipeline is introduced to compress and accelerate the image decoder.

The training phase of SnapFusion employs stochastic forward propagation, executing each cross-attention and ResNet block with a certain probability. This robust training augmentation ensures that the network remains resilient to architecture permutations, enabling accurate assessment of each block and stable architectural evolution.

Efficiency in the image decoder is achieved through a distillation pipeline that utilizes synthetic data for training. By employing channel reduction, this compressed decoder boasts significantly fewer parameters and operates faster than its SD-v1.5 counterpart. The distillation process involves generating two images: one from the efficient decoder and the other from SD-v1.5. Text prompts are utilized to extract the latent representation from the UNet of SD-v1.5.

The proposed step distillation approach incorporates a vanilla distillation loss objective, striving to minimize the difference between the student UNet’s prediction and the teacher UNet’s noisy latent representation. Additionally, a CFG-aware distillation loss objective is introduced to enhance the CLIP score. CFG-guided predictions play a pivotal role in both the teacher and student models, where the CFG scale is randomly sampled to strike a balance between FID and CLIP scores during training.

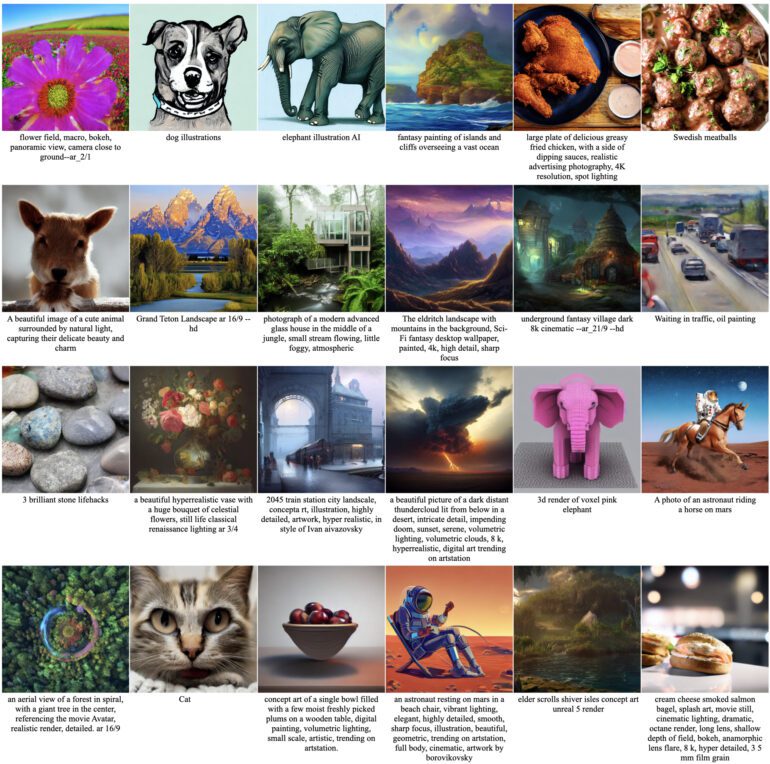

Thanks to the advancements in step distillation and network architecture development, SnapFusion achieves the remarkable feat of generating 512×512 images from text prompts on mobile devices in under 2 seconds. These generated images exhibit a quality on par with the state-of-the-art Stable Diffusion model, solidifying SnapFusion’s position as a game-changer in the world of mobile-powered diffusion models.

Conclusion:

SnapFusion’s breakthrough as a mobile-powered diffusion model opens up new possibilities in the market. By enabling the generation of high-quality images on mobile devices in a fraction of the time, SnapFusion addresses the limitations of diffusion models and offers a practical solution for content creation, image editing, and more. This advancement has the potential to reshape the mobile AI landscape, empowering users with powerful generative capabilities at their fingertips. As a result, businesses operating in the AI and mobile technology sectors should take note of SnapFusion’s potential and explore the opportunities it presents for enhanced user experiences and creative applications.