TL;DR:

- OpenAI’s ChatGPT has gained attention for its human-like language capabilities.

- Language models have contributed to advancements in AI and NLP.

- Models generating code from natural language face limitations.

- Princeton researchers propose InterCode, a lightweight framework for interactive coding.

- InterCode treats code as actions and execution feedback as observations.

- It is language and platform-independent, compatible with existing coding techniques.

- InterCode utilizes Docker environments for safe and repeatable execution.

- Researchers evaluate InterCode using Bash and SQL environments, showcasing its utility.

- Key contributions of InterCode include ease of use, model evaluation, and a standardized benchmark.

- InterCode is a promising addition to AI developments, advancing interactive code generation.

Main AI News:

The release of ChatGPT, the cutting-edge chatbot developed by OpenAI, has made waves in the tech industry. This transformer-based model mimics human-like responses, excelling in tasks such as accurate question answering, generating content for various purposes, language translation, concise summarization, and even code generation. Language models like GPT, BERT, PaLM, and LLaMa have propelled the field of Artificial Intelligence by harnessing the power of Natural Language Processing and Understanding.

Recently, there has been a surge in the development of models capable of automatically generating code from natural language specifications. These models have showcased impressive performance on static benchmarks, benefiting from extensive pre-training on numerous codebases. However, they still face limitations such as typos, gaps between code creation and execution, and minimal human involvement.

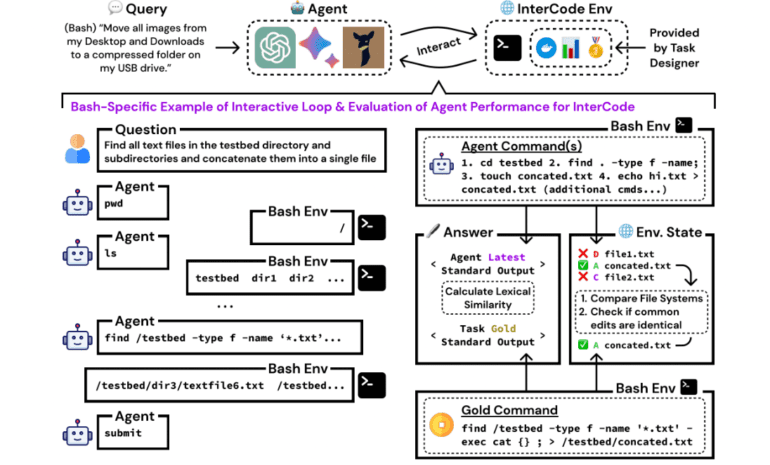

To address these challenges, researchers from Princeton University’s Department of Computer Science have introduced a lightweight and flexible framework known as InterCode. This framework revolutionizes coding by transforming it into a standard reinforcement learning (RL) environment. In InterCode, code is treated as actions, while execution feedback serves as observations. By employing RL, coding becomes an iterative process that is language and platform-independent, adaptable to multiple programming languages and environments.

InterCode also employs independent Docker environments to ensure secure and replicable execution. It seamlessly integrates with conventional sequence-to-sequence (seq2seq) coding techniques, making it easily adaptable and compatible with existing methods. Moreover, it paves the way for the development of novel approaches tailored specifically for interactive code generation.

To showcase the utility of InterCode, the research team has created two interactive code environments utilizing Bash and SQL as the action spaces. They extensively trained and evaluated several state-of-the-art Language Models, employing various prompting tactics such as ReAct and Plan & Solve. Data from the static Spider and NL2Bash datasets facilitated these experiments. The InterCode experiments demonstrated the benefits of interactive code generation, highlighting its potential as a challenging benchmark for enhancing code understanding and generation capabilities.

The key contributions of InterCode can be summarized as follows:

- InterCode introduces a universal framework for interactive code generation, emphasizing ease of use, extensibility, and safety. Its user-friendly nature allows researchers to seamlessly incorporate it into their experiments.

- State-of-the-art models were evaluated using InterCode, revealing several potential enhancements. The framework provides valuable insights into improving code generation capabilities.

- The InterCode benchmark serves as a standardized evaluation platform for interactive code generation tasks, enabling researchers to compare different models’ performance within a common framework. It transforms any static code dataset into interactive activities, broadening the scope for evaluation.

Conclusion:

The introduction of InterCode represents a significant milestone in the field of AI and code generation. This lightweight framework addresses limitations faced by existing models, enabling more interactive and iterative coding experiences. With its compatibility across programming languages and platforms, InterCode has the potential to revolutionize code generation practices. The standardized evaluation platform it offers empowers researchers to compare and improve different models’ performance. The market can anticipate accelerated progress in code understanding and generation, fostering innovation and opening new avenues for AI-driven development.