TL;DR:

- Image segmentation has seen significant advancements in the last decade, particularly in neural networks.

- 3D instance segmentation is a critical task with applications in robotics and augmented reality.

- Existing methods for 3D instance segmentation operate within a closed-set paradigm, limiting their ability to handle novel objects and free-form queries.

- Open-vocabulary approaches offer flexibility and enable zero-shot learning of object categories not present in training data.

- OpenMask3D is a groundbreaking 3D instance segmentation model that overcomes the limitations of closed-vocabulary approaches.

- OpenMask3D uses a two-stage pipeline and leverages RGB-D sequences and 3D reconstructed geometry.

- By computing mask features per object instance, OpenMask3D can retrieve object instance masks based on similarity to any given query.

- OpenMask3D excels in preserving information about novel and long-tail objects, surpassing closed-vocabulary paradigms.

Main AI News:

In the realm of image segmentation, significant progress has been made in recent years, thanks to advancements in neural networks. With the ability to segment multiple objects within complex scenes in mere milliseconds, the accuracy of results has reached impressive levels. However, when it comes to 3D instance segmentation, we are still playing catch-up with the performance achieved in 2D image segmentation.

The field of 3D instance segmentation has emerged as a critical task with wide-ranging applications in robotics and augmented reality. The goal of this task is to predict object instance masks and their corresponding categories within a 3D scene. While notable strides have been made, existing methods primarily operate within a closed-set paradigm. This paradigm limits the set of object categories to a predefined list closely tied to the training datasets.

This limitation presents two fundamental challenges. Firstly, closed-vocabulary approaches struggle to comprehend scenes that go beyond the object categories encountered during training. This can result in difficulties when recognizing novel objects or misclassifying them. Secondly, these methods are inherently limited in their ability to handle free-form queries, hindering their effectiveness in scenarios that require understanding and acting upon specific object properties or descriptions.

To overcome these challenges, open-vocabulary approaches have been proposed. These approaches have the capability to handle free-form queries and enable zero-shot learning of object categories not present in the training data. By adopting a more flexible and expansive approach, open-vocabulary methods offer several advantages in tasks such as scene understanding, robotics, augmented reality, and 3D visual search.

The integration of open-vocabulary 3D instance segmentation has the potential to significantly enhance the flexibility and practicality of applications that rely on understanding and manipulating complex 3D scenes. Enter OpenMask3D, the highly promising 3D instance segmentation model.

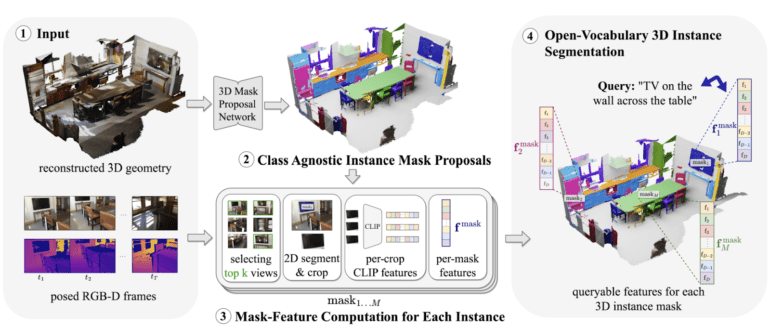

OpenMask3D aims to surpass the limitations of closed-vocabulary approaches by tackling the task of predicting 3D object instance masks and computing mask-feature representations beyond a predefined set of concepts. This innovative model operates on RGB-D sequences and leverages the corresponding 3D reconstructed geometry to achieve its objectives.

The workflow of OpenMask3D consists of a two-stage pipeline, comprised of a class-agnostic mask proposal head and a mask-feature aggregation module. By identifying frames where instances are evident, OpenMask3D extracts CLIP features from the most optimal images for each mask. The resulting feature representation is then aggregated across multiple views and associated with each 3D instance mask. This unique instance-based feature computation approach equips OpenMask3D with the remarkable capability to retrieve object instance masks based on their similarity to any given text query, thereby enabling open-vocabulary 3D instance segmentation and transcending the limitations of closed-vocabulary paradigms.

Through the computation of a mask feature per object instance, OpenMask3D exhibits the ability to retrieve object instance masks based on their similarity to any given query. This empowers the model to perform open-vocabulary 3D instance segmentation. Furthermore, OpenMask3D excels in preserving information about novel and long-tail objects more effectively than trained or fine-tuned counterparts. It goes beyond the constraints of a closed-vocabulary paradigm, enabling the segmentation of object instances based on free-form queries pertaining to object properties such as semantics, geometry, affordances, and material properties.

Conclusion:

The introduction of OpenMask3D, a state-of-the-art 3D instance segmentation model with open-vocabulary capabilities, has significant implications for the market. The ability to handle free-form queries and perform open-vocabulary 3D instance segmentation expands the range of applications in fields such as robotics, augmented reality, and 3D visual search. This breakthrough technology allows for better scene understanding, object recognition, and manipulation in complex 3D scenes. OpenMask3D’s superiority in preserving information about novel and long-tail objects positions it as a game-changer in the market, enabling businesses to unlock new opportunities and develop innovative solutions in various industries.