TL;DR:

- DiffFit: A new technique by Huawei Research for fine-tuning large diffusion models.

- Diffusion Probabilistic Models (DPMs): Mastering intricate probability distributions by learning the inverse of a stochastic process.

- DiffFit adapts proven tuning strategies from NLP research (BitFit) to image generation.

- Strategic modifications throughout the model improve the Frechet Inception Distance (FID) score.

- DiffFit outperforms other parameter-efficient fine-tuning strategies across multiple datasets.

- It enables cost-effective adaptation of low-resolution diffusion models to high-resolution image production.

- DiffFit surpasses prior state-of-the-art diffusion models in FID while using fewer trainable parameters and reducing training time.

- DiffFit sets a powerful baseline for efficient fine-tuning in picture production.

Main AI News:

In the realm of machine learning, grappling with intricate probability distributions remains a pivotal challenge. Enter Diffusion Probabilistic Models (DPMs), an innovative approach that seeks to master the inverse of a well-defined stochastic process, progressively eroding information. Denoising Diffusion Probabilistic Models (DDPMs), with their undeniable value in image synthesis, video production, and 3D editing, have emerged as notable frontrunners in this domain. However, their extensive parameter sizes and the multitude of inference steps per image have given rise to substantial computational costs. Sadly, not all users possess the financial means to shoulder these expenses. Thus, there arises an urgent need to explore strategies for effectively customizing publicly available, pre-trained diffusion models to cater to individual applications.

Addressing this concern, researchers at Huawei Noah’s Ark Lab have conducted a groundbreaking study. Drawing on the foundation of the Diffusion Transformer, they introduce DiffFit, a straightforward and effective fine-tuning technique specifically designed for large diffusion models. Inspired by recent advancements in NLP research, particularly BitFit, which demonstrated that adjusting the bias term could fine-tune pre-trained models for downstream tasks, the researchers sought to adapt these proven tuning strategies for image generation.

The initial step involved the immediate application of BitFit. To enhance feature scaling and generalizability, they incorporated learnable scaling factors into specific layers of the model, defaulting to a value of 1.0, while making dataset-specific adjustments. The empirical results obtained were enlightening. It became evident that strategically placing these modifications throughout the model proved critical in improving the Frechet Inception Distance (FID) score, a key metric for assessing image quality.

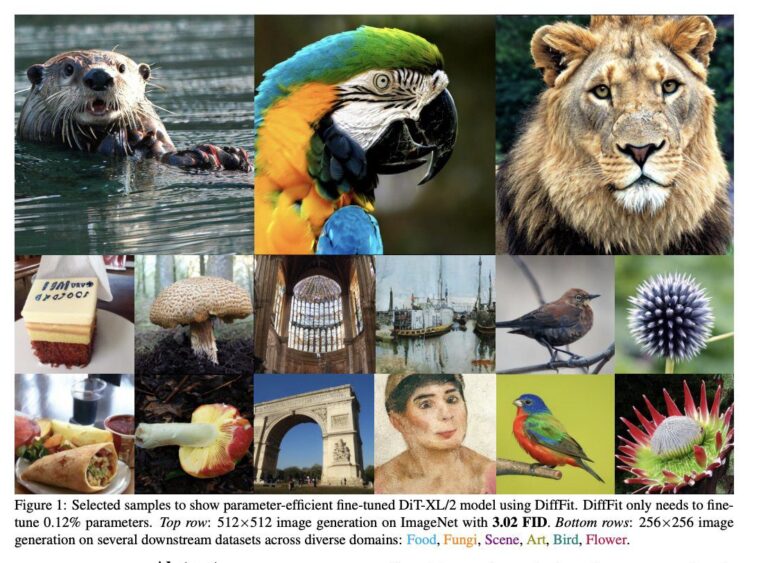

In their quest for parameter-efficient fine-tuning, the research team explored various strategies, including BitFit, AdaptFormer, LoRA, and VPT. By comparing their performance across eight downstream datasets, they made a remarkable discovery—DiffFit outperformed all other techniques. Not only did DiffFit excel in terms of the number of trainable parameters and the FID trade-off, but it also demonstrated impressive adaptability. The researchers found that this fine-tuning strategy could be effortlessly applied to low-resolution diffusion models, enabling seamless adaptation to high-resolution image production at a fraction of the cost. By treating high-resolution images as a distinct domain from their low-resolution counterparts, DiffFit achieved remarkable results.

On ImageNet 512×512, DiffFit eclipsed prior state-of-the-art diffusion models by leveraging a pretrained ImageNet 256×256 checkpoint and fine-tuning DIT for a mere 25 epochs. Astonishingly, DiffFit outperformed the original DiT-XL/2-512 model, boasting 640M trainable parameters and 3M iterations, in terms of FID. What’s more, DiffFit accomplished this feat with a significantly smaller parameter count of approximately 0.9 million, while also reducing training time by 30%.

Conclusion:

Huawei’s DiffFit technique represents a significant advancement in the field of efficient fine-tuning for large diffusion models. By adapting proven tuning strategies from NLP research and strategically incorporating modifications, DiffFit achieves remarkable improvements in image quality. Its superiority over other parameter-efficient fine-tuning strategies establishes it as the go-to approach for practitioners seeking to optimize their models while minimizing computational costs. DiffFit’s ability to seamlessly adapt low-resolution diffusion models to high-resolution image production further expands its market potential. This breakthrough empowers businesses in image synthesis, video production, and 3D editing to achieve higher quality outputs with reduced financial burdens, enhancing competitiveness and unlocking new possibilities in the market.