TL;DR:

- Neuroscientists have made significant progress in understanding the human brain by studying animal brains.

- Animals have limitations in comprehending complex human behaviors like mathematical reasoning.

- Researchers used a deep neural network to approximate the process of human number learning.

- Innate number sense may not be as important as previously thought.

- Spontaneous number neurons in neural networks do not contribute significantly to number learning.

- The study bridges artificial intelligence (AI) and human intelligence, revealing similarities in number processing.

- The network exhibited patterns similar to children’s learning of numbers.

- The model provides a simple approximation of the brain’s complexity, limiting its insights into human biology.

- The network shows promise for studying dyscalculia and exploring potential neural mechanisms and interventions.

Main AI News:

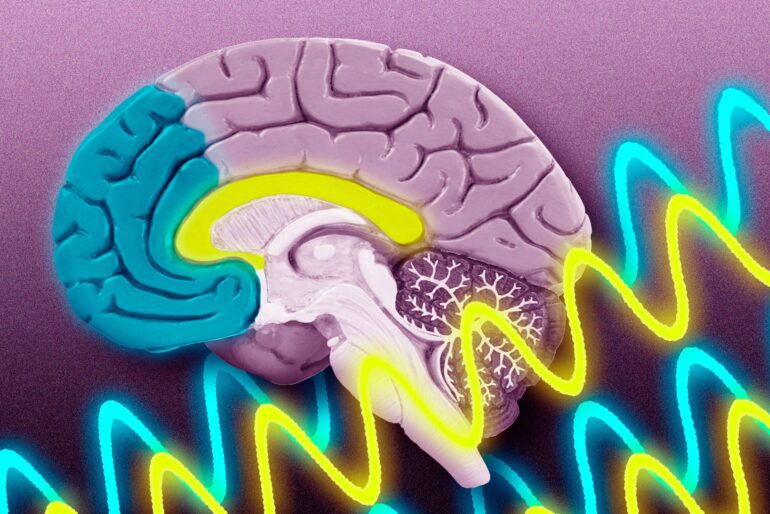

In the quest to unravel the mysteries of the human brain, neuroscientists have made remarkable progress over the past 50 years. By delving into the inner workings of animal brains, through experiments involving cats, rats, and monkeys, researchers have gained valuable insights into neuronal activity. These experiments have shed light on diverse phenomena such as optical illusions, memory formation, and drug addiction. While conducting similar experiments on humans is more challenging, studying animal neurons has proven instrumental in understanding these complex processes.

However, animal brains have their limitations when it comes to comprehending sophisticated human behaviors like mathematical reasoning. Even if animals can be trained to understand numbers, it remains uncertain whether they acquire numerical knowledge in the same manner as humans. The absence of a shared language capacity raises intriguing questions. In pursuit of understanding how children learn about numbers, Vinod Menon, a Stanford professor of psychiatry and behavioral sciences, together with Percy Mistry, a research scholar in Menon’s lab, embarked on an unconventional approach. Rather than exploring biology, they sought to replicate the process of human number learning using a deep neural network.

Initially modeled after the brain, deep neural networks have been extensively employed to explore the inner workings of the visual system. By training a brain-like network to recognize numbers, Menon and Mistry ventured into uncharted territory, gaining insights into human number learning that would have otherwise been unattainable. Their groundbreaking findings, published in Nature Communications, challenge the notion that an innate number sense plays a paramount role, as previously proposed by other researchers.

Given the ethical limitations of conducting neurophysiological experiments on humans, this type of research holds tremendous significance in comprehending the intricate capabilities of the human brain. According to Menon, “Without constructing models like this, it becomes arduous to gain meaningful insights into the neural mechanisms underlying complex human cognitive processes.”

Probing the “Spontaneous Number Neurons”

In a prior study, researchers trained a deep neural network to identify images and stumbled upon a surprising discovery. Certain neurons within the network exhibited sensitivity to numbers. Remarkably, these neurons responded vigorously when presented with images depicting specific quantities, even though they had never been trained to recognize the number of objects within an image. These findings seemed to support the notion that numerosity, to some extent, might be innate. They suggested that children might possess an inherent sense of numbers, without requiring explicit instruction. Consequently, future learning might depend on this innate capacity.

However, no actual experimentation had been conducted to validate whether these “spontaneous number neurons” facilitate number learning. To explore this, researchers needed to identify the number-sensitive neurons within a neural network designed for object recognition. They would then have to retrain this network to report the number of objects in an image. Subsequently, they could assess whether these neurons played a role in the network’s ability to learn the assigned task. This exact procedure was carried out by Mistry, Menon, and their colleagues.

Surprisingly, they discovered that the spontaneous number of neurons did not contribute to learning at all. Most neurons that initially demonstrated sensitivity to numbers either lost this trait during training or became sensitive to a different number altogether. Moreover, the neurons that remained responsive to the same numbers did not exhibit any significant impact on the network’s final performance when removed during the learning process.

Building Bridges Between AI and Human Intelligence

Although the study was entirely conducted using computer simulations, there are compelling reasons to believe that it sheds light on the workings of the human brain. The research team intentionally initiated the study with an object-recognition network that had previously shown similarities to certain aspects of the monkey visual system. After training, the number-sensitive neurons within the neural network exhibited behaviors akin to those found in the monkey brain.

While it is not feasible to conduct invasive experiments on human subjects for direct comparisons between the network and the human brain, the team devised alternative approaches to tackle this challenge. By examining the pool of number-sensitive neurons as a collective entity, they identified two distinct strategies employed by the network to differentiate between different numbers. One strategy entailed utilizing a linear number line, making it easy to distinguish the endpoints, such as 1 and 9, while finding it more challenging to differentiate numbers in the middle, like 4, 5, and 6. The second strategy centered around the midpoint of the number line, perceiving 4, 5, and 6 as markedly distinct from one another. This pattern aligns with the learning process observed in humans. As children develop their number sense, they initially exhibit sensitivity to lower and higher numbers. Over time, they begin incorporating the midpoint of the number line as a reference point. Menon shared his excitement, stating, “It was fascinating to witness the emergence of number line representations similar to those observed in children, despite not explicitly training the neural network for such behavior.”

Nevertheless, it would be premature to conclude that human children learn in precisely the same manner as this neural network. According to Mistry, the model ultimately represents “a very, very simple approximation of what the brain is doing, even with all its complexity.” While the simplicity of the model facilitates study and training, its capacity to reveal insights into human biology remains limited.

Nonetheless, the model’s remarkable ability to approximate the number of learning processes in children has ignited high hopes within Mistry and Menon. Menon’s extensive research on dyscalculia, a learning disability that affects numerical and mathematical skills, has led the team to aspire to employ the network in investigating potential neural mechanisms underlying dyscalculia. By implementing these mechanisms within the network and evaluating their impact on number learning, the researchers hope to formulate hypotheses concerning the various potential causes and explore possible interventions. As Mistry aptly puts it, “We can use this model as a sandbox.“

Conclusion:

This research highlights the potential of deep neural networks to enhance our understanding of the human brain’s complex capabilities. By shedding light on the process of number learning, it challenges existing notions of innate number sense and offers valuable insights into neurological disorders like dyscalculia. For the market, this opens up avenues for developing AI-powered educational tools and interventions that can assist individuals with numerical and mathematical challenges. The fusion of AI and human intelligence continues to drive innovation, bringing us closer to a future where cognitive processes are better understood and harnessed for practical applications.