TL;DR:

- The I2D2 framework revolutionizes AI by empowering smaller language models to compete with larger ones.

- I2D2 overcomes the challenge of lower generation quality in smaller models through neurologic decoding and a critical model.

- It outperforms GPT-3 in accuracy when generating generics and exhibits enhanced diversity in its content.

- I2D2’s findings have significant implications for the natural language processing market, highlighting the potential of smaller and more efficient models.

- Smaller models can rival larger models in specific tasks, and self-improvement is not exclusive to large-scale models.

Main AI News:

In the realm of language models, monumental strides have been made, largely attributed to their colossal proportions that enable awe-inspiring capabilities across various natural language processing endeavors. But a thought-provoking question lingers: does size alone dictate model performance? A recent study has dared to challenge this notion, delving into the realm of smaller models to ascertain if, despite their reduced dimensions, they can contend with their larger counterparts. Through ingenious distillation techniques, constrained decoding, and self-imitation learning algorithms, this groundbreaking study introduces the game-changing I2D2 framework, empowering smaller language models to surpass those that are a hundred times their size.

Empowering Smaller Models: The I2D2 Advantage

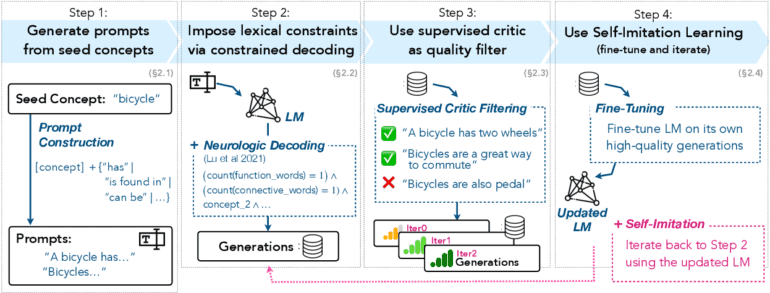

A primary challenge faced by smaller language models lies in their relative inferiority in generating high-quality outputs. The I2D2 framework conquers this hurdle through two key innovations. Firstly, it employs neurological decoding to execute constrained generation, yielding incremental improvements in the quality of generated content. Additionally, the framework incorporates a diminutive critic model, proficient in filtering out subpar generations, thereby paving the way for substantial performance enhancements. By subsequently fine-tuning the language model through a self-imitation process and leveraging the high-quality outputs obtained after undergoing critic filtering, I2D2 ensures continuous improvement of smaller language models.

Revolutionizing Commonsense Knowledge Generation

In the realm of generating commonsense knowledge surrounding everyday concepts, the I2D2 framework showcases remarkable prowess. Unlike other approaches reliant on GPT-3 generations for knowledge distillation, I2D2 stands independently, firmly grounded on a model that is a mere fraction of GPT-3’s size. Nonetheless, I2D2 generates an impressive corpus of high-quality, generic commonsense knowledge.

Outperforming the Giants: I2D2’s Triumph

The comparative analysis unveils I2D2’s superiority over GPT-3 in generating accurate generics. By meticulously scrutinizing the accuracy of generics present in GenericsKB, GPT-3, and I2D2, it becomes unmistakably clear that I2D2 attains higher precision levels, defying the odds despite its smaller stature. The framework’s critical model plays a pivotal role in distinguishing true and false common sense statements, surpassing the performance of GPT-3.

Unleashing Enhanced Diversity and Iterative Advancements

In addition to amplified accuracy, I2D2 showcases greater diversity in its generated content when juxtaposed with GenericsKB. The plethora of generated content is tenfold more diverse, continually progressing with successive iterations of self-imitation. These remarkable findings serve as a testament to the robustness of I2D2 in generating precise and diverse generic statements, all while harnessing a model that is a fraction of the size of its competitors.

Far-reaching Implications

The profound insights gleaned from this study carry far-reaching implications for the field of natural language processing. It underscores the fact that smaller and more efficient language models harbor tremendous potential for improvement. By embracing pioneering algorithmic techniques, as demonstrated by I2D2, smaller models can rival their larger counterparts in specific tasks. Furthermore, this study challenges the preconceived notion that self-improvement is exclusive to large-scale language models, as I2D2 showcases the remarkable capability of smaller models to engage in iterative processes and elevate the quality of their generated content.

Conclusion:

The introduction of the I2D2 framework marks a significant development in the AI market. The ability of smaller language models to rival larger models in terms of performance and generation quality opens up new possibilities for businesses and organizations. This breakthrough demonstrates that size alone does not determine model effectiveness, emphasizing the importance of innovative techniques like neurologic decoding and self-imitation learning. As smaller models continue to improve and refine their capabilities, they present a viable and cost-effective option for companies seeking to leverage AI in their language processing tasks. This paradigm shift signifies a more level playing field, with diverse and efficient language models catering to specific requirements in the market.