TL;DR:

- Deepfakes, artificially generated media, pose a significant threat in today’s digital landscape.

- Intel Labs has developed cutting-edge real-time deepfake detection technology.

- Deepfakes utilize advanced AI models to create highly realistic content, making it challenging to distinguish between real and fake.

- Intel’s detection technology focuses on identifying genuine attributes, such as heart rate, to differentiate between humans and synthetic personas.

- The technology is being implemented across various sectors and platforms to combat the spread of deepfakes and misinformation.

- Deepfake technology has both potential for misuse and legitimate applications, such as enhancing privacy and control over one’s digital identity.

- Intel prioritizes ethical development and collaborates with experts to evaluate and refine its AI systems.

- The deepfake detection technology offers a scalable and effective solution to the growing problem of deepfakes.

Main AI News:

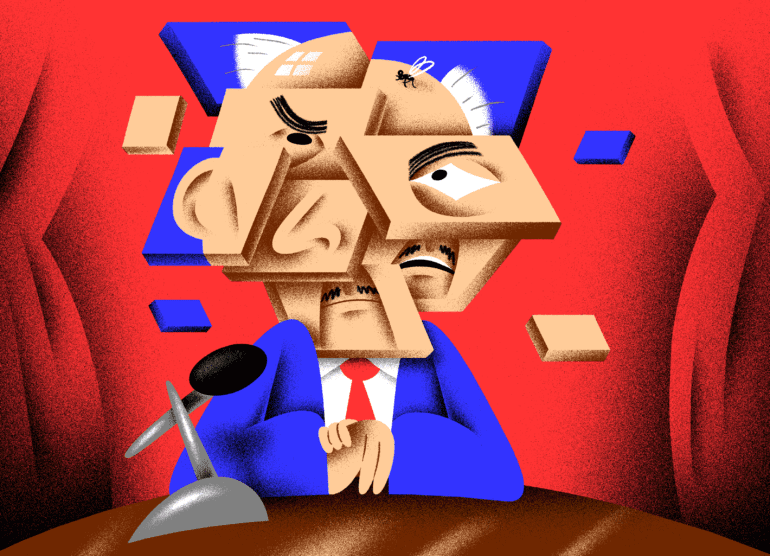

In today’s digital landscape, the proliferation of deepfakes presents a pressing challenge for society. What was once considered a novelty now poses a significant threat, as deepfakes can be easily exploited to spread misinformation, enable hacking attempts, and fuel malicious activities. Recognizing the gravity of this issue, Intel Labs has taken proactive measures to develop a state-of-the-art deepfake detection technology. Ilke Demir, an esteemed senior research scientist at Intel, sheds light on the intricacies of deepfakes, Intel’s innovative detection methods, and the crucial ethical considerations that underpin the development and implementation of such powerful tools.

Deepfakes encompass videos, audio clips, or images where the depicted subject or action is artificially generated using advanced artificial intelligence (AI) algorithms. Powered by intricate deep-learning architectures, such as generative adversarial networks, variational auto-encoders, and other cutting-edge AI models, deepfakes can create astonishingly realistic and convincing content. These sophisticated models have the ability to generate synthetic personalities, synchronize lip movements, and even convert text into lifelike images, blurring the lines between what is real and what is fabricated.

Interestingly, the term “deepfake” is occasionally used to describe manipulated authentic content. A prominent example is the 2019 video featuring former House Speaker Nancy Pelosi, which was altered to portray her in a misleading state of inebriation.

Demir and his team focus on computational deepfakes, which encompass synthetic content generated entirely by machines. “The reason that it is called deepfake is that there is this complicated deep-learning architecture in generative AI creating all that content,” Demir explains. These computationally rendered deepfakes pose a significant risk, as cybercriminals and malicious actors frequently exploit this technology for various nefarious purposes. From political misinformation campaigns and non-consensual adult content involving public figures to market manipulation and impersonation for financial gain, the detrimental impact of deepfakes underscores the urgency to develop effective detection methods.

Addressing this critical concern, Intel Labs has successfully developed one of the world’s pioneering real-time deepfake detection platforms. Rather than searching for telltale signs of forgery, this groundbreaking technology focuses on discerning genuine attributes, such as heart rate, on identifying deepfakes. Employing a method called photoplethysmography, this advanced system analyzes color variations in veins, which are computationally visible indicators of changes in oxygen levels. By leveraging these physiological signals, Intel’s deepfake detection technology can accurately differentiate between real humans and synthetic personas.

“We are trying to look at what is real and authentic. Heart rate is one of [the signals],” explains Demir. “So when your heart pumps blood, it goes to your veins, and the veins change color because of the oxygen content. It is not visible to our eye; I cannot just look at this video and see your heart rate. But that color change is computationally visible.”

With its cutting-edge deepfake detection technology, Intel is spearheading a widespread implementation across various sectors and platforms, including social media tools, news agencies, broadcasters, content creation tools, startups, and nonprofits. By integrating this technology into their workflows, these organizations can effectively identify and mitigate the dissemination of deepfakes and misinformation, safeguarding the integrity of their platforms and content.

While the potential for deepfake misuse remains a concern, it is worth acknowledging the legitimate applications this technology offers. One notable use case is the creation of avatars that better represent individuals in digital environments. Demir highlights a specific example called “MyFace, MyChoice,” which harnesses deepfakes to enhance privacy on online platforms. This innovative approach empowers individuals to control their online appearances by replacing their faces with “quantifiably dissimilar deepfakes,” allowing them to avoid recognition. By providing these privacy controls, deepfakes offer individuals greater autonomy and authority over their digital identities, countering automatic face-recognition algorithms.

In Intel’s relentless pursuit of ethical development and responsible implementation of AI technologies, the company collaborates closely with anthropologists, social scientists, and user researchers to meticulously evaluate and refine their deepfake detection systems. Furthermore, Intel maintains a Responsible AI Council, comprising multidisciplinary experts who scrutinize AI systems for ethical principles, potential biases, limitations, and any possible harmful use cases. Through this holistic approach, Intel ensures that its AI technologies, including deepfake detection, serve humanity’s best interests and contribute positively to society.

“We have legal people, we have social scientists, we have psychologists, and all of them are coming together to pinpoint the limitations to find if there’s bias—algorithmic bias, systematic bias, data bias, any type of bias,” says Demir. The team thoroughly inspects the code to identify potential areas where the technology could harm individuals.

Conclusion:

Intel Labs’ pioneering deepfake detection technology signifies a transformative shift in the market. As the threat of deepfakes continues to escalate, organizations across sectors will seek reliable solutions to combat misinformation and protect their platforms. Intel’s advanced technology, coupled with its commitment to ethical development, positions the company as a trusted leader in the field. By addressing the urgent need for real-time detection, Intel Labs not only safeguards the truth but also paves the way for responsible and secure AI technologies in the market.