TL;DR:

- Meta introduces CM3leon, a groundbreaking multimodal model for text and image generation.

- CM3leon combines text and image processing, surpassing earlier models with superior efficiency.

- Large-scale multitask instruction tweaking enhances CM3leon’s performance across various tasks.

- CM3leon outperforms Google’s Parti text-to-image model, setting a new state-of-the-art.

- CM3leon excels in vision-language tasks, showing promise for generating and comprehending images.

Main AI News:

In the realm of generative AI models, there has been a notable surge of interest in natural language processing and systems capable of producing visuals from textual inputs. Meta, in a recent study, has unveiled CM3leon (pronounced “chameleon”) – a groundbreaking foundation model that possesses the unique ability to generate both text and images.

By incorporating a large-scale retrieval-augmented pre-training phase and a second multitask supervised fine-tuning (SFT) stage, CM3leon emerges as the pioneering multimodal model, drawing inspiration from text-only language models. Leveraging a decoder-only transformer, the CM3Leon architecture mirrors that of popular text-based models. What sets CM3Leon apart is its remarkable aptitude for processing both textual and visual data. Surprisingly, despite undergoing training with a mere fifth of the computational resources used by previous transformer-based approaches, CM3leon achieves unparalleled performance in the domain of text-to-image generation.

What truly distinguishes CM3leon is its fusion of the flexibility and power exhibited by autoregressive models, combined with the efficiency and economy inherent in both training and inference. This unique blend enables CM3leon to generate coherent text and image sequences based on any given textual or visual input. Consequently, the CM3 model meets the criteria for a causal masked mixed-modal model, surpassing earlier models that could only perform one of these tasks.

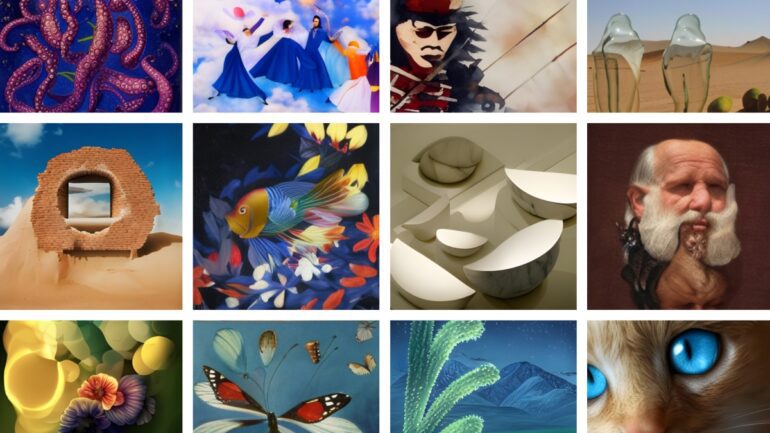

The researchers have discovered that employing large-scale multitask instruction tweaking on CM3leon for both picture and text generation leads to a significant enhancement in performance across various tasks. These tasks include image caption generation, visual question answering, text-based editing, and conditional image generation. Moreover, the team has integrated an independently trained super-resolution stage, which allows CM3leon to produce higher-resolution images compared to the original model outputs.

In accordance with the findings, CM3Leon outperforms Google’s Parti text-to-image model, establishing a new state-of-the-art with an impressive FID (Fréchet Inception Distance) score of 4.88 on the widely recognized zero-shot MS-COCO picture creation benchmark. This remarkable achievement underscores the immense potential of retrieval enhancement techniques and scaling methodologies in shaping the output of autoregressive models. Notably, CM3leon excels in vision-language tasks, such as long-form captioning and visual question answering, exhibiting competitive zero-shot performance when compared to larger models trained on more extensive datasets. Remarkably, CM3leon has been trained using only a dataset comprising three billion text tokens.

CM3leon’s remarkable performance across a broad spectrum of tasks instills the research team with great optimism. They envision a future where CM3leon will be capable of generating and comprehending images with unparalleled accuracy, pushing the boundaries of what AI can achieve in the realm of multimodal capabilities.

Conclusion:

The introduction of CM3leon represents a significant breakthrough in the field of generative AI models. Its ability to generate both text and images sets it apart from previous models. The impressive performance and efficiency of CM3leon open up new possibilities for applications in various industries. Businesses operating in sectors such as content creation, marketing, and e-commerce can benefit from its capabilities for image generation, image captioning, and visual question answering. CM3leon’s success in diverse vision-language tasks and its competitive performance with limited training data demonstrate its potential to revolutionize the market by enabling more accurate and efficient text-to-image generation.