TL;DR:

- Researchers introduce collaborative score distillation (CSD) to enhance text-to-image diffusion models for consistent visual synthesis.

- Text-to-image diffusion models generate high-quality images by utilizing image-text pairings and effective topologies.

- CSD expands the capabilities of diffusion models without altering them, enabling synthesis across complex modalities.

- The technique leverages modality-specific training data and addresses challenges in high-dimensional visual generation tasks.

- CSD ensures consistency in image synthesis and editing by optimizing generative sampling through score distillation.

- The study also introduces CSD-Edit, combining CSD with instruction-guided picture diffusion models for reliable visual editing.

- Applications include panorama picture editing, video editing, and 3D scene reconstruction, showcasing the adaptability of the methodology.

Main AI News:

Researchers from KAIST and Google have introduced a groundbreaking technique known as collaborative score distillation (CSD) in the field of AI. This method extends the capabilities of text-to-image diffusion models, enabling consistent visual synthesis. These models have shown tremendous potential in generating high-quality and diverse images by leveraging billions of image-text pairings and effective topologies. Their applications have expanded into domains like image-to-image translation, controlled creation, and customization. Notably, they can now extend beyond 2D pictures into complex modalities without requiring changes to the diffusion models themselves, thanks to modality-specific training data.

The primary focus of this study is to tackle the challenge of applying pre-trained text-to-image diffusion models to high-dimensional visual generation tasks that go beyond 2D pictures. The researchers propose leveraging the knowledge acquired by these models and utilizing modality-specific training data to overcome this challenge. They hypothesize that complex visual data, such as films and 3D environments, can be represented as collections of pictures with consistency specific to a particular modality. For example, a 3D scene comprises multiple frames with view consistency, while a movie consists of frames with temporal consistency.

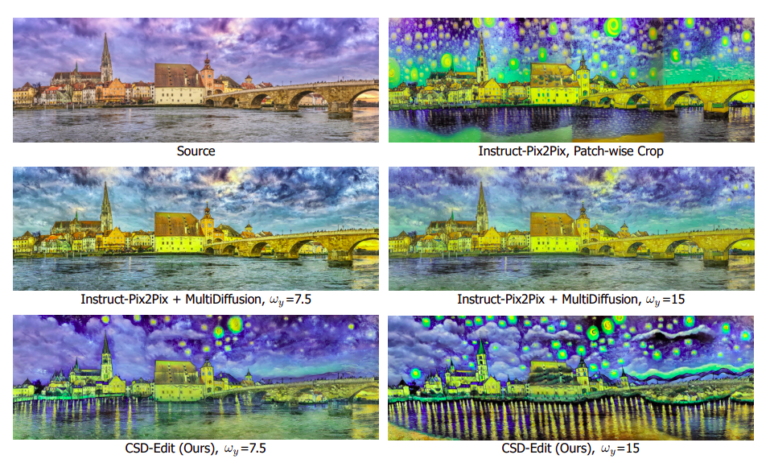

However, the generative sampling method employed by image diffusion models does not consider consistency, leading to a lack of guarantees regarding synthesis or editing across a group of pictures. As a result, when these models are applied to complex data without accounting for consistency, the outcome may appear less coherent, as evident in Figure 1 (Patch-wise Crop), where stitched photos are noticeable.

Similar issues have been observed in video editing. To address this, subsequent research has proposed using picture diffusion models to ensure video-specific temporal consistency. In this regard, the researchers introduce a novel technique called Score Distillation Sampling (SDS), which harnesses the generative potential of text-to-image diffusion models to optimize differentiable operators. By condensing the learned diffusion density scores, SDS formulates generative sampling as an optimization problem. Previous studies have demonstrated SDS’s effectiveness in generating 3D objects from text using Neural Radiance Fields priors, which assume coherent geometry in 3D space. However, its applicability to consistent visual synthesis across other modalities remains unexplored.

To unlock the full potential of the text-to-image diffusion model for reliable visual synthesis, the authors propose Collaborative Score Distillation (CSD), an efficient and straightforward technique. CSD capitalizes on Stein variational gradient descent (SVGD) to extend the capabilities of SDS. By sharing information among numerous samples generated by diffusion models, CSD achieves inter-sample consistency. Additionally, they introduce CSD-Edit, a powerful technique for consistent visual editing that combines CSD with the instruction-guided picture diffusion model, Instruct-Pix2Pix.

The researchers demonstrate the versatility of their approach through various applications such as panorama picture editing, video editing, and 3D scene reconstruction. With CSD-alter, panoramic images can be seamlessly modified while maintaining spatial consistency through the maximization of multiple picture patches. Moreover, their method achieves an optimal balance between instruction accuracy and source-target image consistency, outperforming previous approaches. In the realm of video editing, CSD-Edit ensures temporal consistency by optimizing multiple frames, leading to video edits that are frame-consistent over time. Furthermore, CSD-Edit enables the generation and editing of 3D scenes, promoting uniformity across different viewpoints.

Conclusion:

The introduction of collaborative score distillation (CSD) and its integration with text-to-image diffusion models represents a significant advancement in the market for visual synthesis. This innovative technique allows for the consistent generation of high-quality images across various complex modalities, including video and 3D scenes. By enhancing the capabilities of diffusion models without requiring major changes, CSD opens up new possibilities for industries such as entertainment, virtual reality, and creative content generation. With improved image synthesis and editing techniques, businesses can leverage this technology to deliver more engaging and realistic visual experiences to their customers, thus gaining a competitive edge in the market.