TL;DR:

- Researchers at CMU propose GILL, an approach that combines Large Language Models (LLMs) with image encoder and decoder models.

- GILL enables LLMs to process mixed image and text inputs, producing coherent and readable outputs.

- It fine-tunes a small number of parameters using image-caption pairings to achieve seamless embedding space transfer.

- The method allows LLMs to generate unique images and retrieve images from a dataset.

- GILL outperforms baseline generation models, excelling in longer-form text and dialogue-conditioned image generation.

Main AI News:

The launch of OpenAI’s groundbreaking GPT-4 has brought multimodality to the forefront of Large Language Models (LLMs). Unlike its predecessor, GPT-3.5, which solely processed textual inputs for the renowned ChatGPT, GPT-4 now accepts both text and images as inputs. This exciting development has spurred researchers at Carnegie Mellon University to propose an innovative approach known as Generating Images with Large Language Models (GILL). Their focus lies in expanding multimodal language models to generate stunning, one-of-a-kind images.

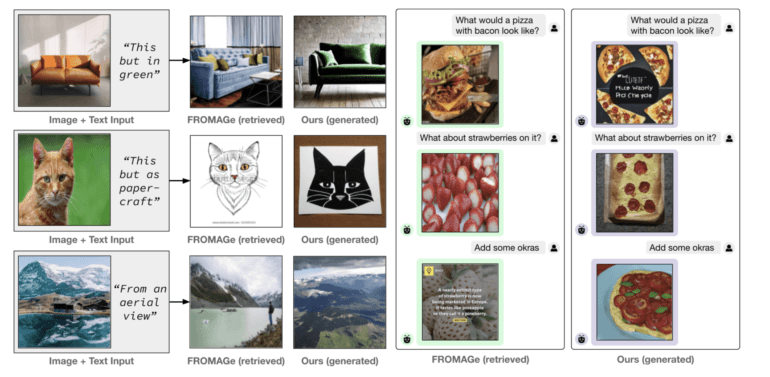

GILL revolutionizes the processing of inputs that combine images and text, enabling it to produce text, retrieve images, and even create entirely new images. The brilliance behind GILL lies in its ability to seamlessly transfer the output embedding space of a frozen text-only LLM into that of a frozen image-generating model. Unlike conventional methods that require interleaved image-text data, GILL achieves this feat by fine-tuning a small set of parameters using image-caption pairings.

The researchers emphasize that GILL effectively combines large language models for text with pre-trained image encoding and decoding models. The result is a powerful tool with a wide array of multimodal capabilities, including image retrieval, unique image generation, and engaging multimodal dialogue. This fusion of modalities is achieved by mapping their respective embedding spaces, leading to coherent and readable outputs.

The backbone of this method lies in an efficient mapping network that grounds the LLM to a text-to-image generation model, yielding exceptional performance in picture generation. By converting hidden text representations into the visual models’ embedding space, GILL utilizes the LLM’s robust text representations to produce aesthetically consistent outputs.

A remarkable feature of GILL is its dual ability to either retrieve images from a specified dataset or generate entirely new ones. At the time of inference, the model intelligently decides whether to produce or obtain an image, utilizing a learned decision module that is conditional on the LLM’s hidden representations. Notably, this approach is computationally efficient, eliminating the need to run the image generation model during training.

In comparison to baseline generation models, GILL exhibits superior performance, especially for tasks requiring longer and more sophisticated language. It outperforms the Stable Diffusion method in handling longer-form text, including dialogue and discourse. Furthermore, GILL excels in dialogue-conditioned image generation compared to non-LLM-based generation models, capitalizing on its multimodal context to create images that precisely match the given text. Unlike traditional text-to-image models limited to processing textual input, GILL effortlessly handles interleaved image-text inputs.

Conclusion:

The introduction of GILL represents a significant advancement in multimodal AI. This method’s ability to combine LLMs with image encoders and decoders opens up new possibilities for generating images and processing mixed image-text inputs. As a result, this technology holds tremendous potential in various markets, including creative content generation, interactive AI applications, and enhanced image-based search and retrieval systems. Businesses should keep a close eye on GILL’s developments, as it may become a transformative tool in revolutionizing multimodal tasks and improving user experiences.