TL;DR:

- Researchers from Cambridge and UCLA introduce DC-Check, a data-centric AI framework.

- DC-Check emphasizes the significance of data in developing reliable ML systems.

- It provides an actionable checklist-style approach for critical thinking at every pipeline stage.

- Data-centric AI views data as key to building trustworthy ML systems, complementing model-centric approaches.

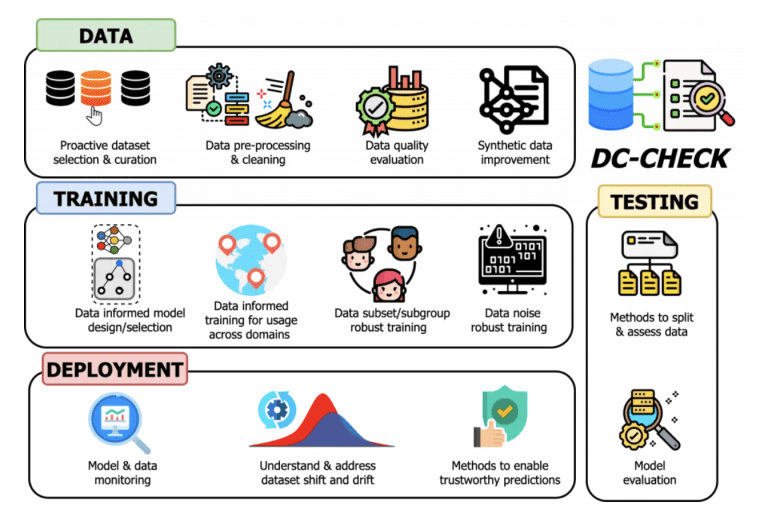

- DC-Check covers four stages: Data, Training, Testing, and Deployment, addressing challenges and promoting data quality.

- The framework targets practitioners, researchers, decision-makers, regulators, and policymakers.

- DC-Check aims to encourage the widespread adoption of data-centric AI for more dependable ML systems.

Main AI News:

Revolutionizing Machine Learning for the Future

The groundbreaking advancements in machine learning (ML) algorithms have revolutionized various industries, from e-commerce and finance to manufacturing and medicine. However, despite these remarkable strides, the development of real-world ML systems in complex data settings remains a challenge. High-profile failures attributed to biases in data or algorithms have brought this issue to the forefront.

A New Paradigm for AI: Data-Centric Approach

To tackle this critical concern, a collaboration between esteemed researchers from the University of Cambridge and UCLA has birthed DC-Check – a transformative data-centric AI framework. The primary focus of DC-Check lies in acknowledging the paramount significance of the data used to train machine learning algorithms. Adopting an actionable checklist-style approach, this framework equips practitioners and researchers with a set of questions and practical tools, fostering critical thinking about the impact of data at every stage of the ML pipeline: Data, Training, Testing, and Deployment.

Uplifting the Value of Data

Traditionally, the machine learning landscape centered around a model-centric approach, heavily invested in model iteration and improvement to achieve optimal predictive performance. However, the data-centric AI philosophy, as advocated by DC-Check, goes beyond this convention. It acknowledges data as the bedrock of building reliable ML systems and seeks systematic enhancement of the data employed by these systems. In their research paper, the scholars define data-centric AI as: “Data-centric AI encompasses methods and tools to systematically characterize, evaluate, and monitor the underlying data used to train and evaluate models.” By prioritizing data, the goal is to foster AI systems that not only exhibit high predictability but also unwavering reliability and trustworthiness.

The Unmet Need: Standardized Processes

While the concept of data-centric AI has garnered immense interest, a critical challenge lies in the absence of standardized processes to design such systems. Consequently, practitioners have faced difficulties in effectively implementing this approach in their work. DC-Check emerges as the pioneering solution, becoming the first-ever standardized framework for engaging with data-centric AI. The checklist it provides empowers users with essential queries to contemplate the impact of data during each pipeline stage, complemented by practical tools, techniques, and a call to address open challenges in research.

Navigating the ML Pipeline with DC-Check

DC-Check comprehensively covers the four fundamental stages of the machine learning pipeline: Data, Training, Testing, and Deployment. For the Data stage, the framework advocates proactive data selection, data curation, data quality evaluation, and the integration of synthetic data to elevate the quality of data used for model training. Under Training, data-informed model design, domain adaptation, and group robust training are emphasized. Testing considerations entail informed data splits, targeted metrics, stress tests, and evaluation of subgroups. Finally, in the Deployment stage, DC-Check accentuates data monitoring, feedback loops, and trustworthiness methods, such as uncertainty estimation.

A Holistic Impact

While DC-Check primarily targets practitioners and researchers, it also extends its utility to organizational decision-makers, regulators, and policymakers. This versatility enables informed decision-making concerning AI systems, ensuring their responsible integration into various domains.

Paving the Way Forward for Reliable AI

The collective ambition of the DC-Check team is that the adoption of this checklist will propel data-centric AI into widespread acceptance, fostering a future where machine learning systems are characterized by their reliability and trustworthiness. Accompanying the DC-Check paper is a dedicated website, featuring the comprehensive checklist and tools, supplemented by additional valuable resources. Embrace DC-Check, and let us embark on a journey toward a new era of dependable and ethical AI.

Conclusion:

The introduction of DC-Check represents a major step forward in the field of data-centric AI. By shifting the focus towards the importance of data in developing machine learning systems, businesses across various industries can now benefit from more reliable, trustworthy, and predictive AI applications. Embracing the DC-Check framework will foster a culture of responsible AI development and integration, instilling confidence among stakeholders and customers alike. As the market increasingly demands transparency and accountability in AI-powered solutions, companies that adopt DC-Check will gain a competitive edge, positioning themselves as leaders in the era of dependable AI.