TL;DR:

- Alibaba AI introduces Composer, a large (5 billion parameters) controllable diffusion model trained on vast (text, image) pairs.

- Compositionality, the key to image control, empowers Composer to seamlessly reassemble visual elements, offering greater control over generative image tasks.

- Composer excels in text-to-image generation, style transfer, pose transfer, virtual try-on, and more, demonstrating encouraging performance.

- The architecture enables decoding unique pictures from unseen combinations of representations, expanding the possibilities of generative models.

- With flexible editable regions and a classifier-free approach, Composer paves the way for diverse picture production and alteration tasks.

- Its zero-shot FID of 9.2 in text-to-image synthesis on the COCO dataset showcases Composer’s exceptional capabilities.

- Alibaba AI’s groundbreaking work with Composer sets new standards for AI-powered image generation and manipulation.

Main AI News:

In today’s cutting-edge landscape of AI, the ability to generate photorealistic images through text-based models has reached remarkable heights. Recent strides have been made in expanding text-to-image models, enabling customized generation by incorporating segmentation maps, scene graphs, drawings, depth maps, and inpainting masks or fine-tuning pretrained models with subject-specific data. Yet, when it comes to real-world applications, designers still seek greater control over these models. The demand for generative models that can reliably produce images while simultaneously fulfilling semantic, stylistic, and color requirements is a prevailing challenge.

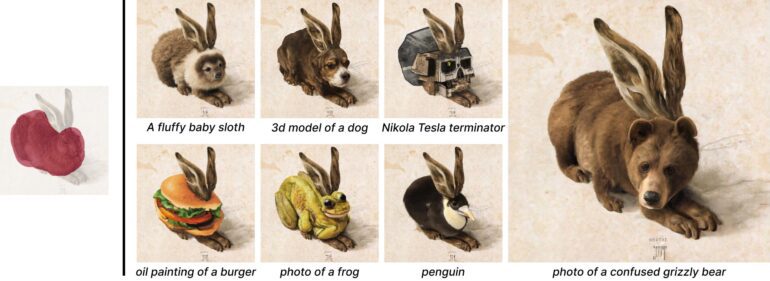

Enter Composer, an extraordinary creation by researchers from Alibaba China. Comprising an impressive 5 billion parameters, this controllable diffusion model is trained on vast volumes of (text, image) pairs. The team proposes that compositionality, as opposed to mere conditioning, holds the key to precise image formation. This notion introduces numerous possibilities, dramatically expanding the control space. Comparable ideas have been investigated in the fields of language and scene comprehension, where compositionality is known as compositional generalization – the ability to recognize or create a finite number of unique combinations from a limited set of components. Leveraging this foundational concept, the researchers develop Composer, a revolutionary implementation of compositional generative models. These generative models possess the remarkable ability to seamlessly reassemble visual elements, birthing entirely new pictures – a testament to their compositional prowess. The underlying framework harnesses a multi-conditional diffusion model with a UNet backbone, forming the backbone of Composer’s architecture.

The training process for Composer involves two distinctive phases. The first is the decomposition phase, where computer vision algorithms or pretrained models break down batches of images into individual representations. Subsequently, in the composition phase, Composer optimizes to reconstruct images from these representation subsets. The result? A decoder that can deftly decode unique pictures from unseen combinations of representations. These representations may originate from multiple sources and might even be inherently incompatible with one another. Remarkably, Composer achieves impressive efficacy despite its conceptual simplicity, offering user-friendly accessibility and yielding encouraging performance across an array of conventional and previously unexplored image generation and manipulation tasks.

The scope of application for Composer is wide-ranging. It excels in text-to-image generation, multi-modal conditional image generation, style transfer, pose transfer, image translation, virtual try-on, interpolation, and image variation from various angles. Additionally, it enables image reconfiguration through modifying sketches, dependent image translation, and image translation tasks. A notable feature of Composer is its ability to restrict the editable region to a user-specified area for all the aforementioned operations, granting greater flexibility compared to conventional inpainting methods. This is achieved through the introduction of an orthogonal representation of masking, effectively preventing pixel modification outside the designated region.

Despite undergoing multitask training, Composer showcases remarkable performance with a zero-shot FID of 9.2 in text-to-image synthesis on the COCO dataset, utilizing captions as the criterion. This outstanding result attests to its capacity to deliver exceptional outcomes. Furthermore, the decomposition-composition paradigm reveals that the control space of generative models can be greatly enhanced when conditions are composable, rather than individually employed. As a result, numerous conventional generative tasks can be reimagined using the Composer architecture, unveiling previously undiscovered generative capabilities and inspiring further research into diverse decomposition techniques to achieve heightened controllability.

Incorporating a classifier-free and bidirectional guidance approach, the researchers demonstrate various methods for employing Composer across diverse picture production and alteration tasks, thereby providing invaluable references for future studies. Mindful of the potential risks, the team aims to carefully examine how Composer can mitigate the danger of abuse and may even provide a filtered version before releasing it to the public.

Conclusion:

Alibaba AI’s Composer represents a significant breakthrough in the market of AI-powered image generation and manipulation. With its revolutionary approach to compositionality, the model offers designers unprecedented control over generative tasks, enabling the production of highly customized and realistic images. As businesses seek more sophisticated and tailored visuals for various applications, Composer’s capabilities open up new opportunities for industries like advertising, design, e-commerce, and entertainment. The extensive range of tasks that Composer excels in showcases its versatility, making it a powerful tool for creative professionals and businesses alike. As the technology matures and becomes more accessible, we can expect to witness a surge in innovative applications and products that leverage the remarkable capabilities of Composer.