TL;DR:

- KAIST and Scatter Lab introduce FaceCLIPNeRF, a revolutionary Text-Driven Manipulation Pipeline for 3D Faces using Deformable NeRF.

- NeRF’s progress in reconstructing 3D scenes lacked fine-grained control over facial expressions, but FaceCLIPNeRF addresses this limitation.

- Previous face editing approaches required laborious procedures, while FaceCLIPNeRF enables text-driven modifications with just 300 training frames.

- Face-specific implicit representation techniques encode facial expressions with high fidelity, but they demand large training sets.

- FaceCLIPNeRF uses multiple spatially variable latent codes to express scene deformations, overcoming the “linked local attribute problem.”

- The approach aligns latent codes with target text in the CLIP embedding space, achieving unparalleled control over 3D face manipulation.

Main AI News:

In the ever-evolving landscape of 3D digital human content, the ability to manipulate 3D face representations with ease has been a critical focus. Recent advancements in Neural Radiance Field (NeRF) technology have made significant strides in reconstructing 3D scenes. However, existing manipulative techniques often revolve around rigid geometry or color adjustments, lacking the finesse required for precise control over facial expressions. Recognizing this limitation, a groundbreaking method has emerged from the collaborative efforts of KAIST and Scatter Lab, unleashing the power of FaceCLIPNeRF – a Text-Driven Manipulation Pipeline for 3D Faces using Deformable NeRF.

The prevailing challenge lies in refining the techniques for jobs that demand meticulous handling of facial expressions. A previous study proposed a regionally controlled face editing approach, but it involved an arduous process of collecting user-annotated masks from various face portions in selected training frames. Subsequently, human attribute control was required to achieve the desired alterations. Such laborious procedures hindered efficiency and demanded extensive resources.

To overcome these obstacles, researchers devised an innovative approach that leverages face-specific implicit representation techniques. By employing morphable face models as priors, observed facial expressions were accurately encoded with high fidelity. However, the drawback of this method was the requirement for large training sets, encompassing a wide range of facial expressions spanning approximately 6000 frames. The data gathering and manipulation processes, thus, became unwieldy.

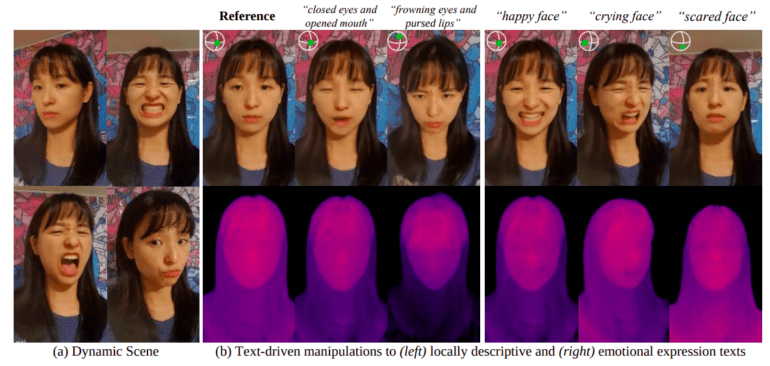

The breakthrough emerged when the research team chose to focus on a dynamic portrait video comprising around 300 training frames, each containing various face deformation instances. This dynamic range allowed for the realization of a text-driven modification process, as depicted in Figure 1.

The core of their approach lies in the utilization of HyperNeRF, a technique that learns and isolates observed deformations from a canonical space before facilitating face deformation control. A common latent code conditional implicit scene network and per-frame deformation latent codes are imparted across the training frames. Notably, the researchers’ key discovery was the adoption of multiple spatially variable latent codes to express scene deformations for manipulation tasks. Departing from the conventional approach of seeking a single latent code to encode a desired facial distortion, they identified and addressed the “linked local attribute problem.” This obstacle stemmed from the fact that a single latent code could not adequately convey facial expressions requiring a blend of local deformations, a common occurrence in many cases.

To surmount this challenge, the team developed a sophisticated solution. Initially, they compiled all observed deformations into a collection of anchor codes, subsequently instructing a Multilayer Perceptron (MLP) to combine these anchor codes to produce numerous position-conditional latent codes. The latent codes’ reflectivity on the visual characteristics of a target text was achieved by optimizing the produced pictures of the latent codes to align closely with the target text in the CLIP embedding space.

Conclusion:

The introduction of FaceCLIPNeRF represents a game-changing advancement in the market of 3D digital human content. This innovative Text-Driven Manipulation Pipeline empowers businesses and creators to efficiently manipulate 3D face representations with remarkable finesse and precision. By bridging the gap between NeRF technology and facial expression control, FaceCLIPNeRF opens up new avenues for immersive digital experiences and transformative content creation. Its ability to achieve superior results with a smaller training dataset translates into cost-effective solutions and increased productivity, making it a highly desirable tool for various industries, including entertainment, gaming, virtual reality, and more. As the demand for realistic and interactive digital human content continues to grow, businesses equipped with FaceCLIPNeRF will be at the forefront of innovation, captivating audiences with captivating and lifelike experiences.