TL;DR:

- Nvidia introduces GH200 Grace Hopper platform, a revolutionary chip solution for AI.

- New HBM3e processor offers 50% better performance than existing HBM3 technology.

- Dual configuration provides 3.5x more memory capacity and 3x more bandwidth.

- Empowers developers to expand Large Language Models (LLMs) effectively.

- CEO Jensen Huang highlights technology’s role in scaling data centers.

- Inference cost of large language models to significantly decrease.

- Nvidia’s valuation soared to $1 trillion due to high AI demand and GPU chips.

- Rivalry intensifies as AMD unveils MI300X GPU chip to challenge Nvidia.

- Nvidia’s Q2 earnings report is expected, with long-term potential projected positively.

Main AI News:

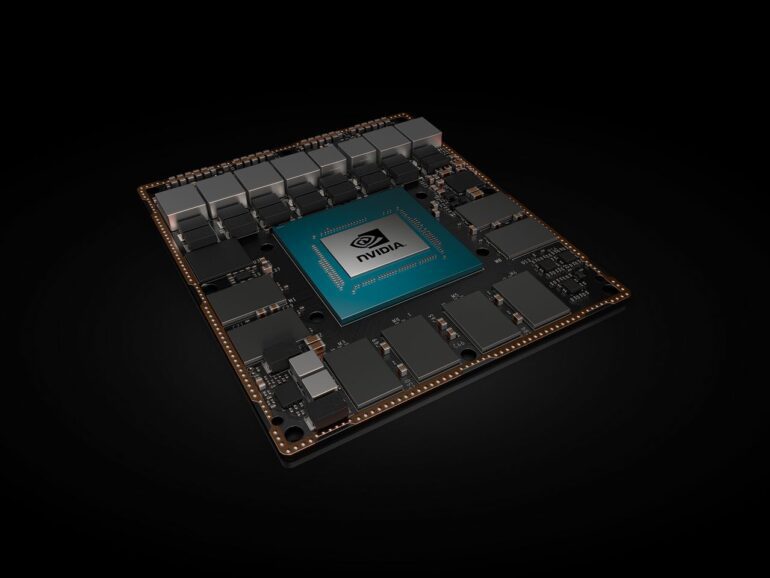

In a strategic move aimed at maintaining a competitive edge in the flourishing AI sector, Nvidia Corp. (NVDA) has officially introduced a revolutionary next-generation chip platform. The tech giant, headquartered in Santa Clara, California, revealed its cutting-edge GH200 Grace Hopper platform on Tuesday, marking a significant step in its ongoing battle to outshine rivals in the dynamic AI landscape.

Central to this pioneering platform is the world’s premiere HBM3e processor, a technological marvel that boasts a remarkable 50% performance boost compared to the existing HBM3 technology. The incorporation of a dual configuration sets the stage for a substantial augmentation, resulting in an impressive 3.5-fold increase in memory capacity and a threefold surge in bandwidth when measured against the current chip offerings. This heightened capability empowers developers with the means to effectively execute expanded Large Language Models (LLMs), pushing the boundaries of AI-driven applications.

During an insightful discourse at a prominent industry conference, CEO Jensen Huang shed light on the transformative impact of this new technology. He emphasized its pivotal role in facilitating the unprecedented scale-out of the world’s data centers, thereby fortifying the infrastructure necessary for AI advancement. Furthermore, Huang highlighted the profound implications for large language models, affirming that the cost of inference during the generative phase of AI computation would undergo a substantial reduction. This pivotal phase follows the intricate training process of LLMs, where the new technology is poised to deliver unparalleled efficiency gains.

This strategic product launch by Nvidia follows an exhilarating period characterized by a surge in AI-driven technological enthusiasm. In a monumental feat, the company’s valuation soared to an astounding $1 trillion in May, largely attributed to the soaring demand for its Graphics Processing Unit (GPU) chips. Additionally, a forecasted transition in data center architecture towards accelerated computing cemented Nvidia’s status as a standout performer in the competitive landscape of 2023.

Nonetheless, the tech giant’s triumph has ignited intensified rivalry within the sector. In a bid to challenge Nvidia’s market dominance, leading chip manufacturer Advanced Micro Devices (AMD) entered the fray by unveiling its own GPU chip, the MI300X, in June. This strategic move underscores the escalating race for innovation and market prominence.

With Nvidia’s highly anticipated second-quarter earnings report scheduled for release on August 23, industry analysts at Bank of America (BofA) anticipate a somewhat tempered response, projecting a departure from the remarkable performance witnessed in the previous quarter. During that period, the company upended expectations by revising its second-quarter revenue projection by a staggering 50%, amounting to a formidable $11 billion. Notwithstanding the projected moderation, industry experts remain steadfast in their optimism regarding Nvidia’s long-term potential. Financial institution Mizuho goes so far as to predict that the tech juggernaut could amass a staggering $300 billion in revenue by 2027—a remarkable tenfold increase compared to its earnings of $27 billion in the preceding year. As the AI landscape evolves, Nvidia remains steadfast in its pursuit of technological innovation and sustained market leadership.

Conclusion:

Nvidia’s unveiling of the GH200 Grace Hopper platform signifies a strategic move to tap into the escalating demand for generative AI. With advanced technology, increased performance metrics, and a potential reduction in inference costs, Nvidia remains poised to maintain its market prominence amidst intensifying competition.