TL;DR:

- AvatarVerse, a collaboration between ByteDance and CMU, introduces a novel AI pipeline for generating high-quality 3D avatars.

- Traditional manual construction of avatars is labor-intensive; AvatarVerse aims to automate the process using natural language descriptions and pose guidance.

- Existing techniques struggle with imaginative avatars from complex text prompts, but AvatarVerse overcomes this limitation.

- AvatarVerse leverages ControlNet and SDS loss for precise view correspondence, eradicating the Janus problem and enabling pose control.

- High-resolution generation techniques enhance realism and detail while minimizing coarseness in avatars.

- AvatarVerse’s contributions encompass automated avatar creation, pose-awareness, elevated realism, and superior performance.

Main AI News:

In the ever-evolving landscape of technology, the realms of 3D avatars have unfolded as key players across diverse sectors, from gaming and social media to augmented reality and human-computer interaction. The intricacies involved in constructing high-quality 3D avatars have sparked immense interest, and addressing these intricacies efficiently is the next leap in this dynamic field. Traditionally, the painstaking process of manual construction, involving skilled artists investing countless hours, has been the norm. But the tides are changing as researchers from ByteDance and CMU introduce AvatarVerse – an ingenious AI pipeline that crafts top-tier 3D avatars through a fusion of textual directives and poses guidance.

The Transformative Power of AvatarVerse

The paradigm shift is clear: to automate the production of premium 3D avatars through natural language descriptions. This endeavor presents a formidable research frontier, promising efficiency and resource conservation. The traditional avenues, such as multi-view film-derived or reference photo-driven avatars, fall short when it comes to innovative avatars stemming from intricate text prompts. The restrictions imposed by visual priors from films or reference images constrain their imaginative potential.

Enter diffusion models, celebrated for their prowess in generating 2D images through text cues. Yet, the transition to 3D models faces hurdles due to a dearth of diverse and comprehensive datasets. Recent strides have seen Neural Radiance Fields emerge as contenders, but challenges linger in crafting avatars with multifaceted poses, appearances, and forms. Conventional approaches stumble in the face of the Janus problem – a concern that AvatarVerse boldly addresses.

The AvatarVerse Strategy: Precision Redefined

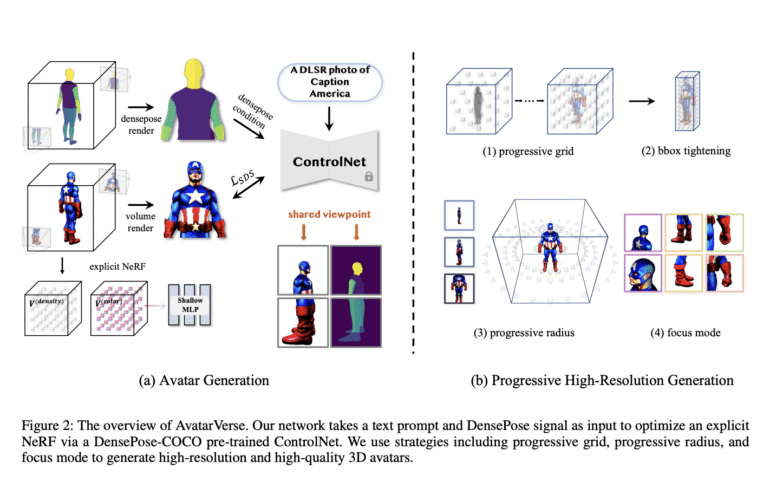

ByteDance and CMU blaze a trail by introducing a novel framework – AvatarVerse – that raises the bar for creating robust, high-quality 3D avatars. The foundation rests upon a newly minted ControlNet, meticulously trained on an expansive array of over 800,000 human DensePose images. Enhancing this framework, they incorporate SDS (Score Distillation Sampling) loss, finely tuned to the 2D DensePose signal. This synergy establishes precise alignment between 2D viewpoints and the 3D domain, effectively conquering a longstanding challenge.

Crucially, the Janus problem fades into oblivion, clearing the path for unhindered avatar generation. Pose control, a critical feature, finds its home within this innovative approach. Avatars birthed through AvatarVerse seamlessly align with the joints of the SMPL model, fortified by the discerning signals from DensePose. This synergy not only simplifies skeletal binding but also amplifies control.

A Leap Beyond: Elevated Realism and Detail

Striving for unparalleled realism, the researchers introduce a progressive high-resolution generation technique. This innovation enriches local geometry’s realism and detail, striking a balance between authenticity and technical finesse. The judicious employment of a smoothness loss cultivates a smoother gradient of density voxel grids within explicit Neural Radiance Fields, countering coarseness and enhancing the generated avatars’ finesse.

A New Dawn in 3D Avatar Generation

The contributions of AvatarVerse are all-encompassing and transformational:

- AvatarVerse Unveiled: The innovation that defines a new era – crafting superior 3D avatars from mere textual descriptions and human stance references.

- DensePose-Conditioned Score Distillation Sampling Loss: The breakthrough methodology that empowers the creation of pose-aware 3D avatars, eradicating the Janus problem and bolstering system stability.

- Elevated Realism through Methodical Generation: A step-by-step journey from coarse to fine, culminating in an exceptional 3D avatar replete with intricate details and accessories.

- Performance that Prevails: AvatarVerse’s supremacy shines through empirical assessments and user feedback, showcasing its prowess in generating high-fidelity 3D avatars.

The Future is AvatarVerse

With AvatarVerse, a new threshold for reliable, unparalleled 3D avatar generation is set. A testament to ingenuity and vision, this breakthrough promises a realm where quality meets efficiency effortlessly. Demos of this revolutionary technique await exploration on their GitHub repository, beckoning a new wave of innovation and exploration in the world of 3D avatars.

Conclusion:

AvatarVerse marks a groundbreaking advancement in the realm of 3D avatar creation. By seamlessly merging natural language descriptions and pose guidance, it not only streamlines the traditionally laborious process but also propels the field into new dimensions of creativity and realism. This innovation is poised to disrupt industries like gaming, social media, and virtual reality, offering a reliable and efficient solution that caters to a diverse range of applications. The meticulous research, tangible results, and user-supported assessments demonstrate a paradigm shift that sets a new standard for avatar generation, thereby shaping a market where quality and efficiency converge harmoniously.