TL;DR:

- OpenAI introduces GPTBot, a web crawler, to enhance language models.

- GPTBot collects online content for training AI, promoting accuracy and safety.

- OpenAI ensures privacy by filtering paywalled sources and sensitive information.

- Websites can block GPTBot’s access using ‘robots.txt’ and customize crawling limits.

- Well-known platforms like Clarkesworld and The Verge opt to block GPTBot.

- Legal concerns arise regarding AI training without explicit permission.

- OpenAI’s GPTBot release signifies a bold strategic move in the AI landscape.

Main AI News:

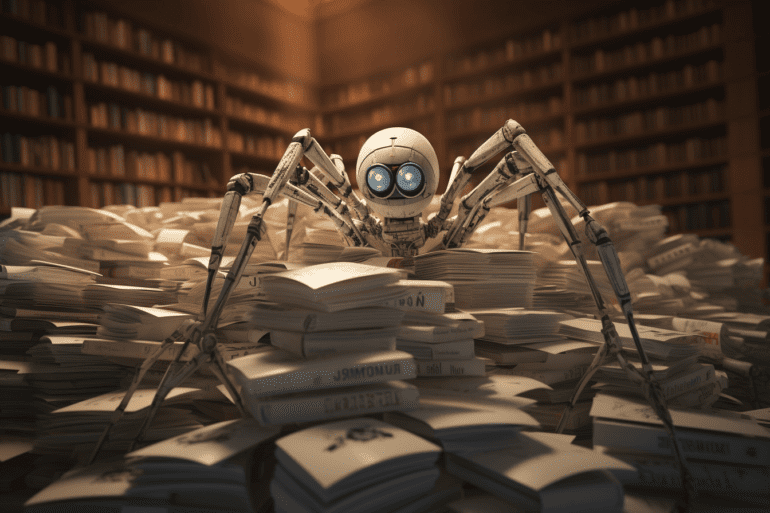

In a strategic maneuver to advance the capabilities of its language models, OpenAI has unleashed a novel web crawler, christened “GPTBot,” designed to scour the digital landscape for valuable content to enhance its sophisticated AI systems such as GPT-4, the powerhouse behind ChatGPT.

Intriguingly, OpenAI asserts that consenting websites permitting GPTBot’s access can contribute to refining AI models, amplifying their precision, and fortifying their overarching proficiency and security. According to OpenAI’s official announcement, “Enabling GPTBot to interface with your website can potentially elevate AI models’ accuracy and augment their holistic aptitude, adhering to regulations and guidelines.”

An essential aspect of this initiative is OpenAI’s commitment to ensure GPTBot’s ethical operations. The AI titan purports that the bot is intelligently programmed to sift through sources while circumventing paywalled content, personal identifiers, and text contravening its set protocols, thereby upholding data privacy and content integrity.

The architecture of this venture incorporates a mitigation mechanism for those who opt out. OpenAI provides a streamlined procedure for websites to restrict GPTBot’s ingress through modifications in the website’s ‘robots.txt,’ an essential protocol guiding web crawlers. Moreover, site administrators wield the power to tailor the extent of GPTBot’s exploration, bolstering customization. A spectrum of IPs is also on offer, rendering the blocking process straightforward.

An intriguing evolution is the transformation of the source landscape for training large language models. Formerly, the data repository up to September 2021 formed the bedrock for training ChatGPT’s mammoth linguistic prowess. OpenAI recognizes the unfeasibility of retroactive data removal prior to the cutoff point. However, by providing the means to thwart the advances of the new web crawler, OpenAI secures a path for websites seeking to safeguard against future data assimilation.

Notably, a cadre of vigilant website owners has been quick to respond to this paradigm shift. Prominent names such as Clarkesworld, a distinguished science fiction publication and tech authority The Verge, have proactively erected barriers against GPTBot’s inquisitive tendrils. The landscape is rife with tutorials elucidating strategies to repel the bot’s advances.

The juxtaposition of web crawlers as conduits of online vitality and the concerns surrounding their utility for AI training forms a compelling dialectic. The ubiquity of web crawlers, particularly those from Google and allied search engines, as vehicles for driving web traffic, stands as a testament to their indispensable role in the digital ecosystem. However, the line between data utilization for search indexing and AI training has spawned apprehensions, with instances like the ongoing lawsuit challenging OpenAI’s appropriation of textual corpus for its chatbot, echoing concerns about intellectual property.

Curiously, OpenAI’s audacious rollout of GPTBot amidst legal turbulence indicates a resolute stance, potentially reflecting confidence in its course of action. Alternatively, by granting the autonomy for websites to forestall GPTBot’s advances, OpenAI could be seen as exercising prudence to avoid protracted legal battles and to accommodate evolving ethical considerations.

Conclusion:

OpenAI’s launch of GPTBot showcases a strategic push to leverage web data for improving language models. The introduction of customization and blocking options empowers website owners while addressing ethical concerns. The market can anticipate heightened discussions about data ethics, legal ramifications, and the evolving role of web crawlers in AI training. This move underscores OpenAI’s commitment to innovation, but also invites closer scrutiny of the intersection between AI, content ownership, and data privacy.