TL;DR:

- Nvidia unveils Grace Hopper super chip for data centers and generative AI.

- Company shifts focus to Arm-based server SoCs integrated with Grace Hopper platform and GPUs.

- Share price soars 200% due to generative AI’s growth.

- GH200 configuration features 144 Arm V-series Neoverse cores and H100 Tensor Core GPU.

- H100 GPUs deliver minimum 51 teraflops of FP32 compute, GH200 achieves 8 petaflops of AI performance.

- Memory architecture upgraded with 282GB HBM3e memory, 50% faster than HBM3.

- GH200 can process models 3.5x larger, boasts the world’s first HBM3e memory.

- Grace Hopper platform scalable with Nvidia’s NVLink interconnect, coherent memory up to 1.2TB.

- Leading server makers to introduce over 100 GH200 configurations in Q2 2024.

Main AI News:

In a strategic move poised to exploit the burgeoning domain of generative AI, Nvidia has introduced its groundbreaking Grace Hopper super chip, purposefully engineered to energize data centers propelling the frontiers of generative AI and other high-velocity computational tasks. While Nvidia’s commitment to crafting Arm-based chips for a spectrum of consumer applications remains steadfast, the corporation deftly shifted its focus several years ago towards elevating Arm-based server System on Chips (SoCs). The masterstroke lay in synergizing these potent SoCs with Nvidia’s preeminent Grace Hopper platform and its formidable server-class GPUs, culminating in the remarkable GH200 configuration. Evidently, this calculated gamble has yielded substantial dividends, with Nvidia’s stock value rocketing by approximately 200 percent since the year’s inception, galvanized by the upsurge in generative AI applications, emblematically exemplified by the meteoric ascent of ChatGPT and Midjourney.

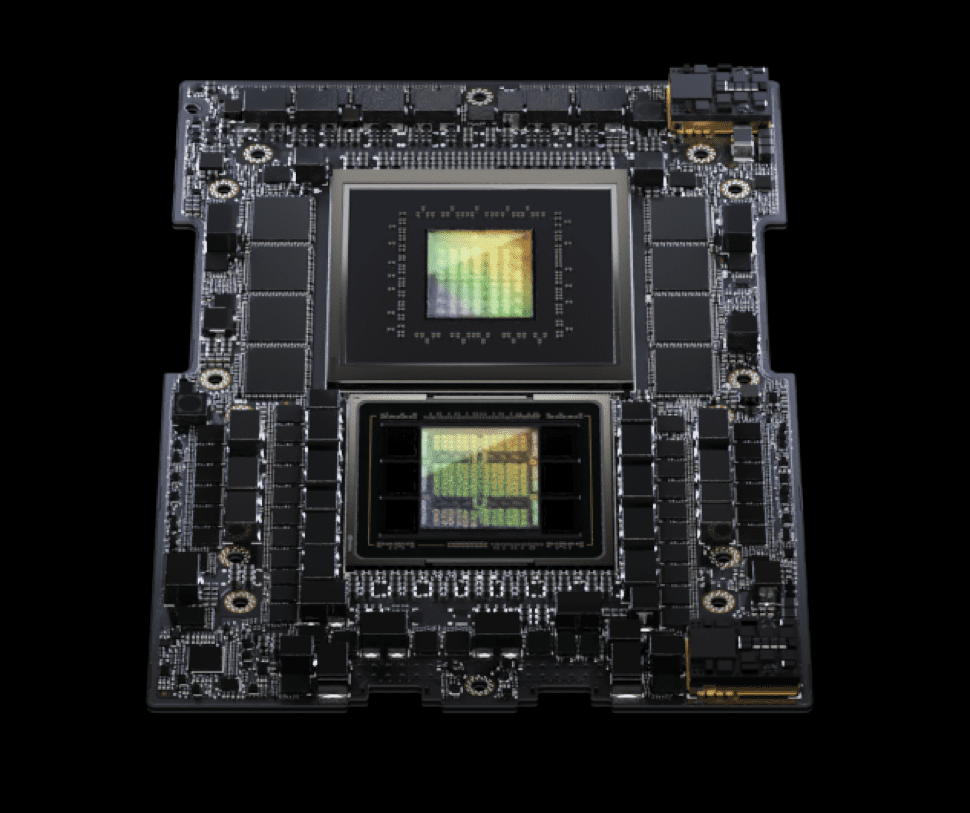

Building upon the foundation established by the trailblazing original Grace Hopper superchip, the recently unveiled GH200, presented in a dual configuration, elevates performance benchmarks with an impressive assembly of 144 Arm V-series Neoverse cores. This innovative iteration, akin to its predecessor, marries the prodigious Grace CPU with Nvidia’s indomitable H100 Tensor Core GPU, aptly named Hopper. Remarkably, the H100 GPUs orchestrate an astounding minimum of 51 teraflops of FP32 compute performance. With the collective prowess of the GH200 architecture, a dazzling 8 petaflops of AI computation potency is harnessed, poised to reshape the landscape of accelerated computing.

The ascendancy to these unparalleled echelons of performance with the GH200, while adhering to the bedrock silicon architecture, necessitated an astute elevation of memory architecture. Encompassing a monumental 282GB of the latest HBM3e memory, this upgrade catapults computational fluidity to the fore. Significantly outpacing its predecessor, the HBM3e variant boasts a 50 percent surge in performance, ushering in a staggering 10TB/sec of bandwidth. Such formidable augmentation empowers the GH200 to effortlessly navigate through models of 3.5 times the magnitude of those manageable by its first-generation counterpart, culminating in its distinction as the world’s inaugural harbinger of HBM3e memory.

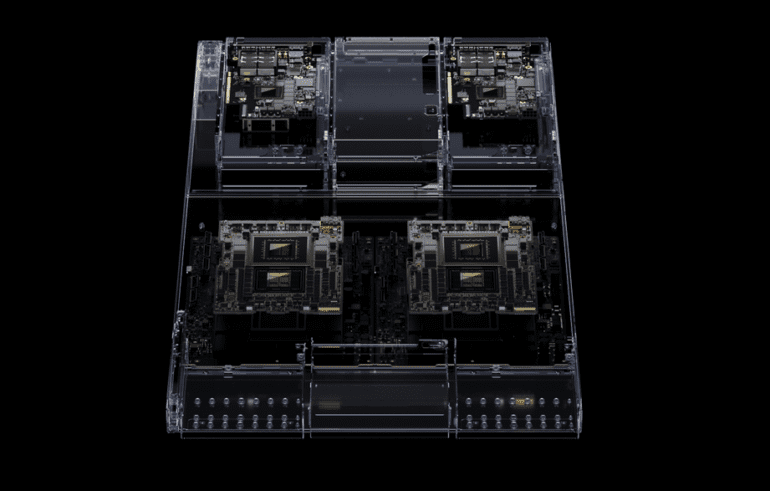

Notably, Nvidia underscores the scalability of the Grace Hopper platform, underscored by the seamless integration of additional Grace Hopper chips via the agency of Nvidia’s cutting-edge NVLink interconnect. This modular architecture’s defining hallmark lies in its coherent memory framework, seamlessly shared amongst all CPUs and GPUs within the cluster, expanding up to an impressive 1.2TB. Anticipatedly, preeminent server manufacturers are poised to introduce a bevy of GH200 configurations, with over 100 variations slated for release in Q2 2024, firmly cementing Nvidia’s paramount position at the vanguard of revolutionary computational architecture.

The Nvidia Grace Hopper GH200 in single configuration. Source: Nvidia

Conclusion:

Nvidia’s strategic focus on the Grace Hopper super chip, synergizing Arm-based server SoCs with GPUs, has propelled the company to the forefront of the generative AI landscape. The GH200’s remarkable capabilities, including enhanced memory architecture and unprecedented processing power, position Nvidia to capitalize on the expanding demand for advanced data center solutions, marking a significant stride in the company’s influence within the market.