TL;DR:

- The battle between language models prefixLM and causalLM unfolds in the realm of in-context learning.

- prefixLM employs unrestricted attention, fostering communication among in-context samples.

- causalLM wields autoregressive attention, maintaining a linear learning trajectory by curbing interactions.

- Numerical tasks like linear and nonlinear regression, multiclass classification, serve as testing grounds.

- Both models excel in training, but causalLM falters with higher test errors due to limited mutual attention.

- prefixLM emerges as the champion, showcasing its prowess in harnessing context for learning.

- The outcome underscores the significance of context in AI learning processes.

Main AI News:

In the annals of history, the legendary clash of the War of Troy resonates, with Achilles etching his name indelibly by triumphing over Prince Hector. But in the dynamic realm of artificial intelligence today, the pursuit of leveraging context to enhance learning and comprehension has taken center stage. In this evolving saga, two contenders, prefixLM and causalLM, have emerged as stalwart combatants in the realm of in-context learning. As the duel between these titans of language models rages on, it becomes evident that their treatment of context stands as the linchpin determining the course of learning outcomes in the domain of machine learning.

The Contenders: Unveiling Theoretical Armaments Taking to the arena, both prefixLM and causalLM are equipped with distinctive theoretical frameworks. PrefixLM dons the mantle of boundless attention, fostering unrestricted communication among in-context instances. It treats each instance as a precursor, channeling complete attention towards the initial n positions in the combat.

In the opposing corner, causalLM steps forth, armed with autoregressive attention—a mechanism that moderates interactions between in-context instances and their future counterparts. This calculated approach preserves a sequential learning trajectory, warding off influences from futuristic spoilers and maintaining a focused trajectory. However, one must ponder, does this method genuinely encapsulate the essence of context? Can it indeed outmatch PrefixLM’s formidable ICL methodology?

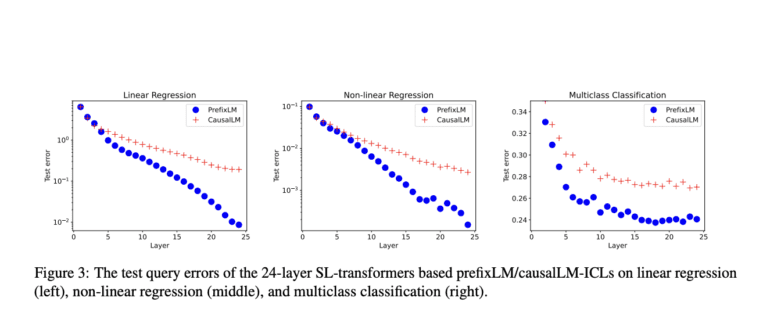

The Battleground of Evaluation To transition from theory to practicality, a synthetic battlefield of numerical tasks serves as the crucible for scrutiny, employing the might of softmax transformers. Within this arena, linear regression, nonlinear regression, and multiclass classification unfurl as the testing grounds where prefixLM and causalLM collide. As the dust settles and echoes dissipate, empirical evidence paints the picture.

In the realm of linear regression tasks, both models exhibit linear decay rates in their training errors, showcasing their commendable learning acumen. Yet, a turning tide emerges as test errors emerge from obscurity. CausalLM falters, confronted with notably larger test errors, prompting questioning glances from observers. The cause? The autoregressive fabric of causalLM imposes constraints on mutual attention among in-context instances, resulting in a suboptimal outcome.

The Ascension of a Champion With empirical revelations illuminating the way, it is prefixLM that ascends the podium as the champion of in-context learning. Its open-armed approach that empowers diverse in-context instances to communicate proves pivotal. Across linear and nonlinear regression, as well as multiclass classification, prefixLM steadfastly asserts its superiority, affirming its commanding grasp on the power of context.

As the curtain descends on this monumental clash, prefixLM stands tall, waving the banner of holistic context comprehension. CausalLM, while gallant in its efforts, might need to reevaluate its strategy within the in-context arena. The battle crystallizes that, indeed, prefixLM is the prevailing champion of this day, poised for a future encounter with a new challenger in the AI battlefield.

Conclusion:

This analysis underscores the critical role of context in AI learning. prefixLM’s open-armed approach and the seamless exchange of information among in-context instances have positioned it as the frontrunner in the battle of language models. This victory signifies that comprehensive context comprehension is paramount for superior learning outcomes, providing valuable insights for AI market players to invest in context-enhancing strategies and technologies.