TL;DR:

- Cornell University introduces QuIP, a groundbreaking AI method for large language models (LLMs).

- QuIP leverages incoherent weight and Hessian matrices for efficient post-training parameter quantization.

- Two-bit quantization techniques are developed, scalable to LLM-sized models.

- QuIP involves coherent Hessian matrices through orthogonal matrix transformations.

- Adaptive rounding minimizes the error between quantized and original weights.

- Empirical results showcase significant improvements in LLM quantization, especially at higher compression rates.

- QuIP achieves remarkable outcomes with only two bits per weight, enhancing LLM inference accuracy.

- Interaction impact among transformer blocks remains an unexplored aspect.

- QuIP’s potential implications for the market warrant attention.

Main AI News:

In the realm of cutting-edge AI advancements, large language models (LLMs) have propelled breakthroughs across text creation, few-shot learning, reasoning, and protein sequence modeling. These models, boasting billions upon billions of parameters, have opened the doors to unprecedented possibilities. However, their colossal scale brings forth complexities in deployment, sparking an intensive exploration of streamlined inference strategies.

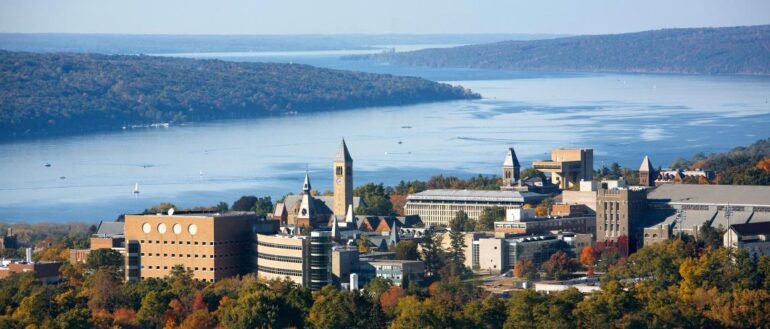

Cornell University researchers have ingeniously embarked on a novel trajectory to enhance LLM performance in real-world contexts. Their pioneering methodology involves post-training parameter quantization, a process that distills the immense LLM parameters for improved efficiency. The pivotal insight behind this approach lies in the recognition that adapting weights to a finite range of compressed values is more seamless when the weight and proxy Hessian matrices are incoherent. This coherence asymmetry stems from the understanding that both weight magnitudes and the precise directions requiring precise rounding aren’t excessively prominent in any given coordinate.

Emanating from this ingenious revelation, the researchers have harnessed their insight to create two-bit quantization techniques, meticulously designed to align with the theoretical underpinnings and scalability demands of LLM-sized models. The result is the birth of “quantization with incoherence processing” (QuIP), a groundbreaking technique presented as a sophisticated solution.

QuIP embarks on a dual-phase journey:

- Heralding Coherence: The initial phase undertakes efficient pre- and post-processing to ensure that the Hessian matrices embrace incoherence. This is achieved by subjecting them to the transformative power of a Kronecker product of random orthogonal matrices, a crucial step in the journey to heightened efficiency.

- Precision Refinement: The second phase orchestrates an adaptive rounding procedure. This procedure minimizes a quadratic proxy objective by matching the quantized weights with the original weights while leveraging an estimate of the Hessian. The term “incoherence processing” beautifully encapsulates both the primary and culminating phases of this innovative method.

Noteworthy is the theoretical study presented by the researchers—a pioneering endeavor of its kind for a quantization algorithm that scales magnificently to LLM-sized models. This study delves into the profound implications of incoherence, firmly establishing the supremacy of the quantization procedure over an expansive array of rounding techniques. The analysis further extends its reach to OPTQ, an antecedent technique, highlighting that QuIP, even without incoherence processing, offers a more efficient incarnation of the former.

Empirical results stand testament to the power of incoherence processing in elevating large-model quantization, with its impact most pronounced at higher compression rates. In a watershed moment, this approach achieves an unparalleled feat—an LLM quantization method that yields tangible results using merely two bits per weight. Notably, the fissures between 2-bit and 4-bit compression remain modest for vast LLM sizes (surpassing 2 billion parameters). As model dimensions expand, these fissures contract, hinting at the tantalizing prospect of impeccable 2-bit inferences within LLMs.

Yet, the intriguing question of interactions among transformer blocks or layers within a block remains unaddressed by the proxy objective. The team acknowledges that the value of incorporating such interactions, given their scale, remains an enigma—leaving the realm of computational effort a territory ripe for exploration.

In a landscape where efficiency drives progress, QuIP emerges as an unparalleled advancement, poised to shape the future trajectory of large language models. Cornell University’s groundbreaking research epitomizes the convergence of profound insight and technical ingenuity, propelling AI ever closer to the pinnacle of efficiency and performance.

Conclusion:

The introduction of QuIP marks a pivotal advancement in LLM efficiency. This innovative technique, backed by Cornell University’s groundbreaking research, holds the potential to redefine the landscape of large language models. As the market for AI technologies continues to evolve, QuIP’s ability to significantly enhance quantization efficiency could lead to more streamlined deployments, increased scalability, and improved real-world applications of LLMs. This advancement aligns with the growing demand for efficient AI solutions, positioning organizations to unlock new dimensions of performance and impact.