TL;DR:

- Deep learning’s impact spans image processing, speech recognition, etc.

- Optical neural networks optimize speed, parallelism and energy efficiency.

- Pluggable Diffractive Neural Networks (P-DNN) solve ONN reconfigurability issues.

- P-DNN swaps pluggable values, enhancing flexibility, reducing resource consumption.

- Architecture: cascaded metasurfaces, common preprocessing, task-specific layers.

- Optical neuron optimization via stochastic gradient descent, error backpropagation.

- Transfer learning optimizes for diverse classification tasks.

- High accuracy (>90%) for digit, fashion classification tasks.

- P-DNN extends to object detection, intelligent object filtering.

- P-DNN reshapes deep learning, offers computational efficiency.

Main AI News:

In the realm of modern technology, the profound impact of deep learning, an innovation mirroring the intricate workings of the human mind, spans an array of sectors encompassing image processing, image recognition, speech comprehension, and language translation. Despite its transformative potential, the reliance on electronic computers, constrained by computational thresholds, and hindered by the bottleneck-laden von Neumann architecture, has cast shadows of limitations in performance and excessive energy consumption. A beacon of promise emerges through optical neural networks, which harness the power of light to proffer solutions that transcend these challenges. These optical marvels usher in a new era of swiftness, parallelism, and energy frugality, reshaping the landscape of machine learning.

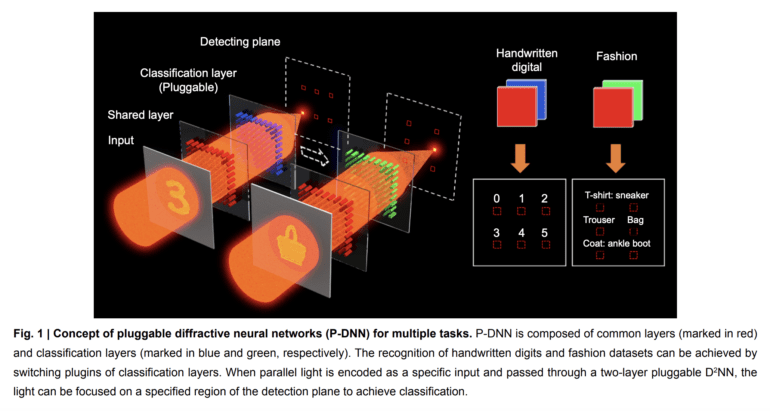

A groundbreaking solution emerges on the horizon, presented by the architects of innovation themselves – Pluggable Diffractive Neural Networks (P-DNN). Casting aside the shackles of rigidity that have plagued traditional Optical Neural Networks (ONNs), P-DNN introduces a transformative approach to address the conundrums of reconfigurability. The old order necessitated complete retraining efforts whenever novel tasks manifested, a time-consuming and resource-intensive endeavor. Enter P-DNN, a revolutionary concept that defies convention by seamlessly shifting between recognition tasks through the mere swapping of pluggable values within the network architecture. This remarkable facet imbues the network’s design with unparalleled flexibility, effectively curbing the voracious appetite for computational resources and streamlining the training timeline.

At the heart of this innovative paradigm lies a two-fold architecture: cascaded metasurfaces, a symphony of light manipulation, and a common preprocessing layer intertwined with task-specific classification layers. Embracing the tenets of optical diffraction theory, each layer’s optical neuron metamorphoses into meta-atoms embedded within the intricate meta-surfaces. The journey from theoretical prowess to real-world efficacy involves a rigorous training phase, meticulously fine-tuning the parameters of these metasurface constituents. This pursuit of excellence is guided by the twin beacons of stochastic gradient descent and error backpropagation methods, culminating in an optimized ensemble poised for the challenges ahead. An illuminating aspect highlighted within the article’s confines is the optimization flow on transfer learning, affording the system the prowess to achieve pinnacle accuracy across an eclectic array of classification tasks.

The prowess of P-DNN is eloquently echoed through tangible results, substantiated via both simulation and experimentation. The orchestrators of this innovation present a symphony of achievements, boasting accuracy rates soaring above the 90% threshold for both digit and fashion classification tasks. This testimony is a testament to the unassailable efficacy of the P-DNN framework, a tapestry woven from the threads of visionaries’ ingenuity and technological precision.

The tapestry, however, extends beyond the realms of classification tasks, unfurling vistas into diverse applications. It strides beyond convention, instating itself as the answer to the shortcomings of traditional deep learning paradigms. The resplendent promise of P-DNN extends its benevolent hand to real-world conundrums: the vigilant gaze for object detection within the confines of autonomous driving and the discerning lens for intelligent object filtration in the domain of microscope imaging. By embracing optical neural networks, P-DNN lights the path toward a harmonious confluence of computational elegance, unbridled potential, and unparalleled efficiency.

Conclusion:

The emergence of Pluggable Diffractive Neural Networks represents a monumental leap in the domain of machine learning. By leveraging the power of light and innovative architecture, P-DNN offers unparalleled flexibility and efficiency in recognizing diverse tasks. This revolutionary paradigm shift is poised to redefine the market landscape, heralding an era where businesses can harness computational elegance to drive precision-driven operations and achieve unprecedented levels of efficiency.