TL;DR:

- IBM Research finds analog chips can dramatically enhance AI speech recognition efficiency.

- Analog chip outperforms traditional chips by 14 times in running AI models.

- Challenges: Demand for GPU chips, escalating AI power consumption, data movement bottlenecks.

- IBM introduced “Computing-In-Memory” (CiM) solution to overcome memory transfer issues.

- CiM analog chip operates computations within its memory, boosting efficiency.

- Chip exhibits remarkable efficiency of 12.4 trillion operations per second per watt in voice recognition.

- Intel’s Hechen Wang acknowledges potential in current AI neural networks.

- Customized chips offer efficiency but may lack versatility.

- Analog AI chips remain valuable as long as CNN and RNN models persist.

- Market impact: Addresses energy concerns, eases digital chip shortage, enhances AI efficiency.

Main AI News:

In a recent study carried out by IBM Research, the application of analog computer chips is poised to usher in a new era of efficiency for artificial intelligence (AI) speech recognition models. This groundbreaking development has the potential to tackle the escalating energy demands of AI research and alleviate the worldwide scarcity of digital chips, a staple in this domain.

Against the backdrop of conventional chips, the analog chip engineered by IBM Research has showcased a staggering 14-fold surge in efficiency when it comes to executing AI speech recognition models. The brains behind this innovation assert that it holds the prowess to surmount several hurdles in the realm of AI advancement.

One of the chief predicaments confronting the AI sector is the soaring demand for graphics processing unit (GPU) chips – initially tailored for gaming but now indispensable for training and operating AI models. Unfortunately, the supply chain falls short of keeping pace with the demand. Furthermore, studies underscore that the energy consumption of AI systems has skyrocketed over time, predominantly reliant on non-renewable energy sources. These quandaries have spurred apprehensions about the conceivable plateauing of AI model expansion.

Another limitation of present AI hardware is the friction in data transfer from memory to processors, leading to significant performance bottlenecks. Addressing this snag, IBM Research has introduced an ingenious solution known as “Computing-In-Memory” (CiM). The CiM analog chip undertakes computations directly within its memory on an extensive scale.

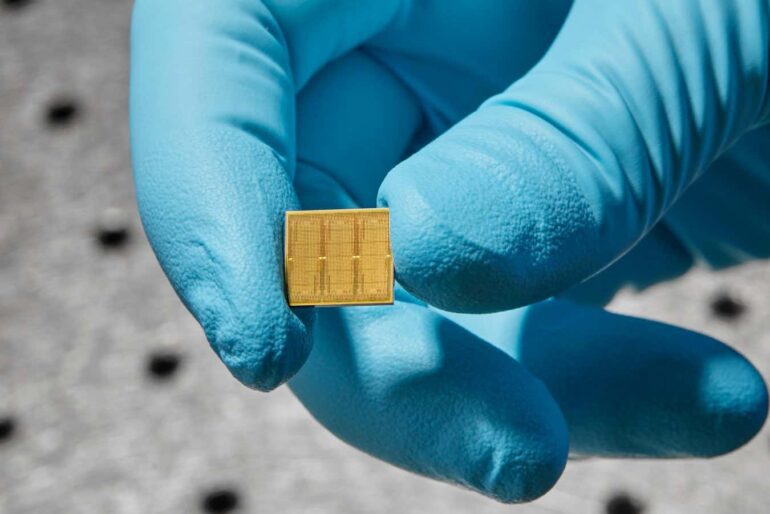

At the heart of the IBM innovation lies a device encompassing a staggering 35 million phase-change memory cells, programmable to two states or gradations between them – akin to transistors on computer chips. This remarkable attribute empowers these diverse states to symbolize synaptic weights between artificial neurons within a neural network. While conventionally stored as digital values in a computer’s memory, these weights can now be stored and processed sans elaborate retrieval or storage operations involving distant memory chips.

In rigorous assessments centered on voice recognition tasks, this chip has demonstrated a striking efficiency quotient of 12.4 trillion operations per second per watt – a noteworthy leap compared to conventional processors.

While Intel’s Hechen Wang concedes that this chip is still in its infancy, trials have highlighted its efficacy in contemporary AI neural networks, including but not limited to Convolutional Neural Networks (CNN) and Recurrent Neural Networks (RNN), both commonly employed today. Wang opines that its potential spans even to bolstering applications like ChatGPT.

Wang further accentuates that although highly specialized chips offer unparalleled efficiency, they might compromise versatility. Much like a graphics processing unit cannot shoulder all tasks within a central processing unit’s realm, analog AI chips, particularly analog in-memory computing chips, harbor limitations. However, if the ongoing trajectory of AI advancement persists, specialized chips could potentially attain a more ubiquitous stature.

Furthermore, Wang asserts that this chip’s utility transcends the realm of speech recognition. As long as CNN or RNN models remain prevalent, the chip won’t be relegated to obsolescence or contribute to electronic waste. Moreover, analog AI or analog in-memory computing boasts augmented silicon and power utilization efficiency compared to CPUs or GPUs, thus harboring the potential for cost reduction.

In a critical assessment, IBM Research’s analog chip emerges as a momentous stride in the AI domain, addressing energy consumption apprehensions and mitigating the dearth of digital chips. While further refinement through research remains imperative, it bears the promise of accentuating AI model efficiency and underpinning a gamut of future applications.

Conclusion:

IBM Research’s breakthrough in analog chip technology marks a significant step towards more energy-efficient AI models. The CiM analog chip’s ability to process computations within its memory and its promising efficiency rates indicate a positive direction for the AI market. As energy consumption concerns grow, this innovation could lead to a more sustainable and effective future for AI applications, while addressing the challenges of chip shortages and power demands.