TL;DR:

- “Nutrition labels” introduced by Twilio to enhance transparency in AI.

- Labels reveal AI models, data usage, optional features, and human involvement.

- Privacy ladder differentiates data usage for internal projects and model training.

- Twilio’s tool encourages industry-wide adoption of AI transparency.

- Salesforce’s acceptable use policy sets bounds on generative AI applications.

- Both companies stress transparency for building customer trust in AI.

- Transparency crucial as research shows limited customer comfort with AI practices.

- Broader regulations and collaboration are advocated for responsible AI usage.

Main AI News:

In the rapidly evolving landscape of artificial intelligence (AI), the push for greater transparency is becoming a central theme. As the adoption of generative AI continues to expand, technology providers are recognizing the importance of building trust with their customers. In this endeavor, the concept of “nutrition labels” has emerged, aimed at shedding light on how AI models utilize customer data. This transparency not only addresses concerns about privacy and security but also lays the foundation for responsible and ethical AI deployment.

The Drive for Transparency

The proliferation of AI features across various software platforms has ignited concerns surrounding privacy and security. To tackle this challenge, businesses are taking a cautious approach, urging employees to exercise discretion while using AI tools. In response to these concerns, Twilio, a communications automation company, has announced a groundbreaking initiative to incorporate “nutrition labels” into their AI services. The goal is to provide clear, accessible information about the data handling practices of these services.

Understanding the “Nutrition Labels”

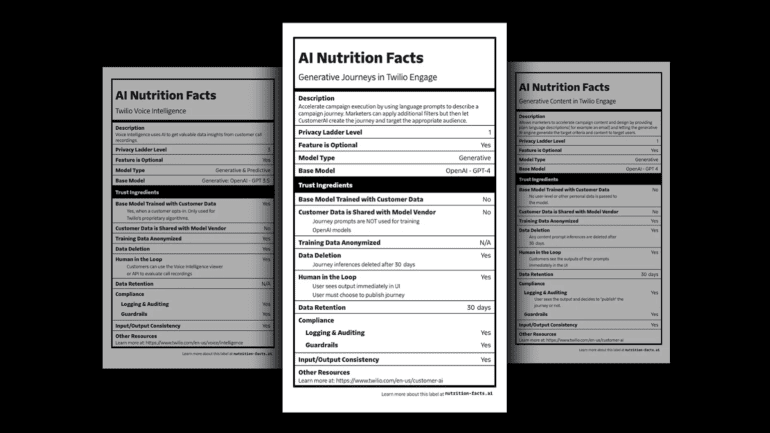

Twilio’s “nutrition labels” offer a comprehensive overview of how their AI models interact with data:

1. AI Models and Training: The labels specify which AI models are employed, whether these models are trained on customer data, and if there is human involvement in the process.

2. Feature Customization: They indicate whether features are optional, giving users the ability to tailor their AI experience.

3. Privacy Ladder: A distinct privacy ladder distinguishes between data used solely for internal projects and data utilized to train models for external customers. Additionally, the presence of personally identifiable information within the data is highlighted.

Promoting Industry-Wide Transparency

Twilio’s effort extends beyond its own services. They’re providing an online tool that empowers other companies to generate similar “nutrition labels” for their AI products. This proactive approach encourages a standardized framework for transparency, promoting trust across the industry.

Salesforce’s Acceptable Use Policy

Salesforce, a leading CRM provider, is also stepping up its transparency efforts. They’ve introduced an acceptable use policy for their generative AI technologies. This policy sets boundaries on the technology’s applications, barring uses such as generating weapons, pornography, or political campaigns. The policy also prevents AI from offering advice that should typically come from licensed professionals. By imposing these restrictions, Salesforce aims to ensure responsible AI usage.

Trust and Transparency

Both Twilio and Salesforce recognize that transparency is paramount for fostering trust. Despite the excitement surrounding generative AI’s potential, research shows that customer trust remains relatively low. Twilio’s research underscores that while businesses are increasingly adopting AI-based personalization, only 41% of customers are comfortable with such practices. Building trust involves displaying and disclosing how data is employed in AI products.

Looking Ahead

The efforts of Twilio and Salesforce serve as exemplars for the industry, inviting other firms to follow suit. However, it’s evident that transparency alone is insufficient to ensure responsible AI utilization. Salesforce emphasizes the need for complementary measures, such as adversarial testing and content filters, to prevent misuse and dissemination of harmful content.

The Call for Regulation and Collaboration

Both companies advocate for broader regulations and ongoing societal dialogue concerning AI ethics. Salesforce’s commitment to industry norms and legislative guardrails reflects its cautious approach to AI deployment. They acknowledge that as technology evolves and societal norms shift, certain usage prohibitions might evolve too.

Conclusion:

The introduction of “nutrition labels” and transparency initiatives by Twilio and Salesforce signifies a significant shift towards fostering trust in AI. By openly disclosing AI practices and data usage, these companies aim to address privacy and ethical concerns. This movement reflects a growing realization that transparency is pivotal for customer trust in AI technologies. The market will likely witness increased adoption of such transparency measures, fostering responsible AI deployment and encouraging collaboration for effective regulatory frameworks.