TL;DR:

- Google Cloud intensifies efforts to remain at the forefront of the booming generative AI trend.

- Updates to Vertex AI unveiled at Cloud Next conference: improved AI models for text, image, and code generation.

- Integration of third-party models from startups, enhancing customization for businesses.

- Expanded language support and context window for PaLM 2 language model.

- Ethical and legal challenges persist regarding copyright and data ownership.

- Introduction of Vertex AI Search and Vertex AI Conversation for AI-powered chatbots and search applications.

Main AI News:

The influence of generative AI continues to surge across the corporate landscape, with over 50% of CEOs worldwide presently engaged in trials involving AI-generated text, images, and other data forms, as revealed by a recent collaborative survey by Fortune and Deloitte. Moreover, a McKinsey report underscores that approximately a third of enterprises now employ generative AI on a routine basis within at least one operational domain.

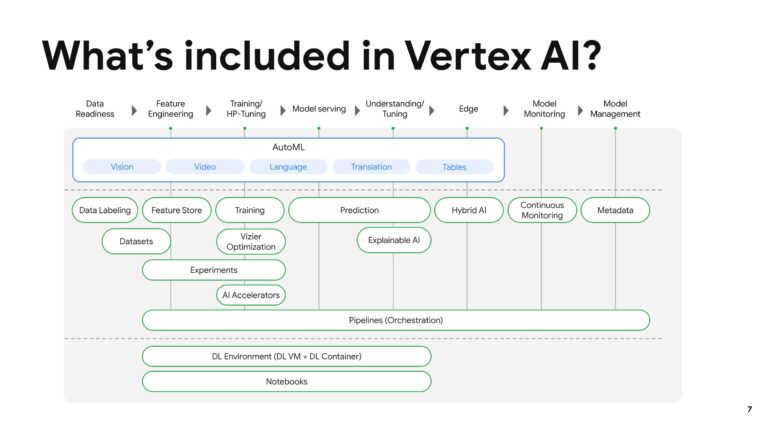

Given the colossal and seemingly expanding market opportunities, it’s unsurprising that Google Cloud is vigorously striving to maintain its competitive stance. In the midst of its annual Cloud Next conference, Google proclaimed a series of upgrades to Vertex AI, its cloud-centric platform designed for constructing, training, and deploying machine learning models. Vertex AI’s array of updated AI models, encompassing text, image, and code generation, along with fresh additions from startups like Anthropic and Meta, reflects Google’s dedication to comprehensively serving evolving industry demands.

June Yang, Google’s VP of Cloud AI and Industry Solutions, articulated the platform’s ethos: “With Vertex, we embrace an open ecosystem strategy, collaborating with a diverse range of partners to provide customers with options and adaptability. Our generative AI approach centers on enterprise readiness, underpinned by robust data governance, responsible AI security, and more.”

On the model front, Google asserts to have significantly elevated the quality of its Codey code-generation model, boasting a 25% advancement in “major supported languages.” Imagen, the image-generating counterpart, has undergone updates to heighten image quality and accommodate Style Tuning, enabling customers to craft brand-aligned images utilizing as few as ten reference images.

Elsewhere, Google’s PaLM 2 language model expands its linguistic horizons, encompassing 38 languages in general availability and over 100 in preview, coupled with an expanded 32,000-token context window. This context window, gauged in tokens (i.e., fundamental units of text), delineates the textual input considered by the model before producing further content. For perspective, 32,000 tokens translate to approximately 25,000 words or about 80 double-spaced text pages.

While Anthropic’s Claude 2 boasts the largest context window at 100,000 tokens, Vertex AI’s decision to opt for 32,000 tokens, according to Nenshad Bardoliwalla, Vertex AI’s Product Leader, is motivated by a balance between capability, cost-effectiveness, and competitive performance ratios.

The intricacies of this landscape extend beyond computational considerations. Google’s move to incorporate third-party models into Vertex AI’s Model Garden, including Claude 2, Llama 2, and Falcon LLM, demonstrates a proactive stance to provide clients with tailored options. This strategic maneuver is aimed, in part, at Amazon Bedrock, an AWS product aimed at constructing generative AI-powered applications.

In this trajectory, Google is also introducing Extensions and data connectors to Vertex AI, akin to OpenAI’s and Microsoft’s AI model plugins. Extensions empower developers to interface models within the Model Garden with real-time or proprietary data, even enabling automated user actions. On the other hand, data connectors facilitate the ingestion of enterprise and third-party data from platforms like Salesforce and Confluence.

Furthermore, Vertex AI now accommodates Ray, an open-source Python-based computing framework, for scaling AI and Python workloads, augmenting the range of supported frameworks.

Amid these strides, ethical and legal quandaries continue to cast shadows. The question of copyright and ownership looms large, with AI models like PaLM 2 and Imagen “learning” from existing data, often drawn from copyrighted web sources. While Google emphasizes data governance reviews to ensure copyright compliance, the question of data ownership and copyrightability of AI-generated content remains unsettled.

Vertex AI augments its offerings with two new products, Vertex AI Search and Vertex AI Conversation, targeting the flourishing domain of AI-powered chatbots and search capabilities. These tools, facilitating the development of interactive search engines, chatbots, and voicebots, are designed for applications spanning food ordering, banking assistance, and semi-automated customer service.

Incorporating multi-turn search and conversation summarization, these products aim to refine user interactions. Playbook, a feature of Vertex AI Conversations, enables users to define responses and transactions in natural language, simulating human-like task handling. The introduction of grounding, a feature that ties model outputs to a company’s data, enhances the accuracy and reliability of generated content.

As the generative AI landscape evolves, challenges like hallucination and toxicity persist. Google’s strategy to assess model outputs for safety attributes, coupled with grounding capabilities, endeavors to mitigate these issues.

Conclusion:

Google’s proactive approach to enhancing Vertex AI showcases its commitment to leading in the expanding generative AI landscape. By introducing upgraded models, embracing third-party innovations, and addressing key ethical concerns, Google positions itself as a pioneer in delivering advanced AI solutions tailored to businesses’ evolving needs. This not only solidifies Google’s competitive edge but also demonstrates its responsiveness to the dynamic demands of the AI market.