TL;DR:

- Large language models (LLMs) are gaining traction for problem-solving.

- LLMs excel in code generation, instruction following, and diverse problem-solving contexts.

- Contemporary research emphasizes linear reasoning and methodical solution search.

- Algorithm of Thoughts (AoT) is a novel approach for enhancing LLM reasoning.

- AoT leverages algorithmic examples to expand concept exploration.

- AoT reduces query requests while rivaling multi-query tactics.

- LLMs can potentially outperform algorithms through AoT training.

- AoT reshapes reasoning approaches and bridges LLMs and algorithmic thinking.

Main AI News:

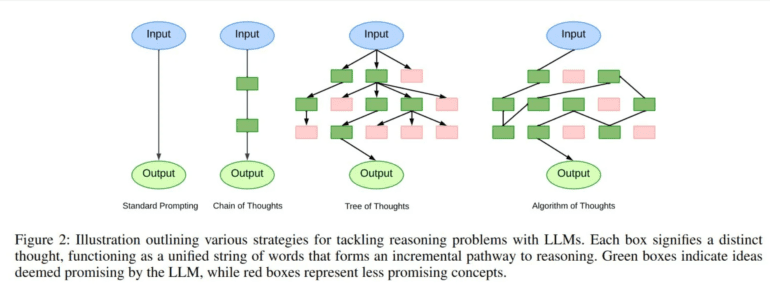

The domain of large language models (LLMs) has recently witnessed remarkable advancements, shedding light on their remarkable potential across diverse problem-solving realms. Demonstrating their prowess in tasks ranging from code generation to instructional compliance and broader problem elucidation, these models have ushered in a new era of problem-solving efficacy. While initial models employed straightforward answer approaches, contemporary research has ventured into more intricate methodologies, embracing linear rationale trajectories. Modern techniques have decomposed intricate quandaries into more manageable fragments, streamlining systematic solution pursuit. Augmenting these endeavors are external mechanisms that alter token generation by manipulating contextual parameters.

Presently, the research landscape predominantly features an extrinsic operational mechanism that temporarily halts, modifies, and subsequently resumes the generative process. This strategic maneuver aims to elevate the reasoning capacity of LLMs. However, its downside is evident in heightened query requests, resulting in escalated expenses, augmented memory requisites, and increased computational overhead.

To surmount these challenges, a dynamic cohort of researchers hailing from Virginia Tech and Microsoft has ushered in a groundbreaking solution: the Algorithm of Thoughts (AoT). This ingenious approach propels LLMs along algorithmic reasoning trajectories, forging an innovative avenue for context-driven learning. Leveraging the intrinsic recurrent dynamics of LLMs through algorithmic paradigms, this technique expands the conceptual exploration while maintaining a minimal query quota.

The core objective of AoT is to educate LLMs through algorithmic instances that encapsulate the essence of exploratory thinking. This technique not only diminishes the query load but also broadens the spectrum of concepts LLMs assimilate. In fact, AoT outshines traditional single-query methodologies and stands toe-to-toe with modern multi-query strategies fortified by intricate tree search algorithms.

This approach’s hallmark is its ability to surpass the confines of single-query methodologies, endowing it with a distinct edge. What’s more, its performance rivals that of recent multi-query tactics driven by sophisticated tree search algorithms. Evidently, this revelation suggests that LLMs possess an innate proclivity to infuse intuition into elevated search procedures.

Conclusion:

The introduction of the Algorithm of Thoughts marks a pivotal moment in the landscape of language models. This innovative approach not only enhances the reasoning capabilities of large language models but also demonstrates the potential to revolutionize problem-solving paradigms. As LLMs increasingly incorporate the Algorithm of Thoughts, we can anticipate a transformative impact on the market, paving the way for more efficient and sophisticated solutions across a wide range of industries.