TL;DR:

- ZeRO++ by DeepSpeed addresses challenges posed by resource-intensive AI model training.

- It accelerates large model pretraining, offering higher throughput and broader accessibility.

- Integration with DeepSpeed chat doubles the pace of reinforcement learning from human feedback (RLHF) training.

- ZeRO++ ensures similar throughput even in low-bandwidth environments, benefiting smaller organizations.

- This advancement paves the way for faster, cost-effective training of large AI models, enhancing innovation.

- Smaller businesses can compete with industry giants, leveraging AI for personalized customer experiences.

Main AI News:

In the ever-evolving landscape of AI, large models such as ChatGPT and multimodal generative models like DALL-E have emerged as game-changers. Their utility is undeniable, but they come with a significant caveat: the hefty demand for computing resources and memory during training. This limitation has created accessibility challenges, especially for smaller businesses lacking extensive tech infrastructure. Addressing this issue head-on, DeepSpeed has unveiled ZeRO++, a strategic breakthrough built upon the foundation of ZeRO. This update effectively conquers the hurdles associated with small per-GPU batch sizes and low-bandwidth clusters.

The Inner Workings of ZeRO++

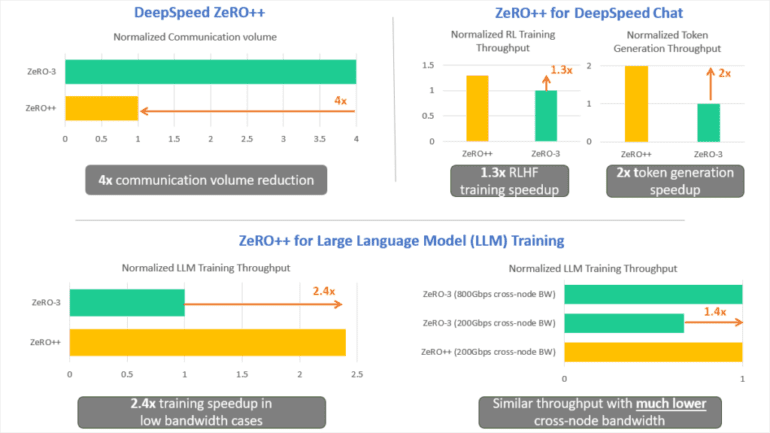

ZeRO++ functions as a turbocharger for large model pretraining, surpassing the capabilities of its predecessor, ZeRO. It democratizes large model training, making it feasible in a broader range of environments. Moreover, it enhances the efficiency of training dialogues by leveraging reinforcement learning from human feedback (RLHF). When integrated with DeepSpeed chat, it accelerates RLHF training by twofold compared to the original ZeRO.

Even in bandwidth-constrained scenarios, ZeRO++ manages to deliver comparable throughput to clusters with higher bandwidth capabilities. This development is a boon for companies operating with limited resources, as it empowers smaller organizations with an efficient solution for training these colossal models without stretching their limits.

Paving the Way for (More Efficient) Innovation

ZeRO++ heralds exciting prospects for the AI and deep learning community. Minimizing communication overhead and optimizing resource utilization significantly boosts training efficiency and accessibility. With the potential for quicker and more cost-effective training, large AI models will become accessible to businesses across various industries, regardless of their size or profile.

What sets ZeRO++ apart is its specialization in RLHF tasks, enabling swift iterations of projects like chatbots and virtual assistants—a demand that customers now expect, irrespective of a business’s scale. As AI continues to integrate itself into every facet of business operations, this advancement will usher in a new era of integration, heightened productivity, and the personalized enhancements that customers crave. Smaller enterprises will gain the ability to compete with their larger counterparts, leveraging cutting-edge tools to leave their mark on the AI-driven landscape.

Conclusion:

ZeRO++ represents a significant leap forward in the democratization of AI, making large model training accessible to businesses of all sizes. Its efficiency improvements and RLHF specialization open doors to faster innovation, ultimately fostering a more competitive market where even smaller players can thrive.