TL;DR:

- DeepMind introduces Reinforced Self-Training (ReST) to improve large language models (LLMs).

- ReST aligns LLMs with human preferences and demonstrates its efficacy in machine translation (MT).

- The methodology generates synthetic training data and fine-tunes LLMs using a reward model.

- Machine translation proves an ideal benchmark due to its high impact and well-defined evaluation.

- ReST’s credibility is enhanced by utilizing reliable scoring and evaluation methods.

- Tests across diverse datasets and language pairs showcase ReST’s versatility.

- Human evaluations validate ReST’s alignment with human preferences.

- ReST significantly enhances translation quality and outperforms online reinforcement learning.

- Its efficiency stems from generating training data offline, allowing for data reuse.

- ReST’s impact extends to various generative learning scenarios, ushering in a new era in reinforcement learning from human feedback (RLHF).

Main AI News:

In a groundbreaking development on August 17, 2023, researchers from DeepMind, a subsidiary of Google, unveiled a pioneering strategy aimed at enhancing the performance of large language models (LLMs) by aligning them with human preferences. This remarkable methodology, named Reinforced Self-Training (ReST), has been strategically tested within the realm of machine translation (MT).

ReST draws its inspiration from the ever-evolving realm of batch reinforcement learning (RL), presenting a paradigm shift in the way LLMs are fine-tuned. Initially, the LLM generates synthetic training data, which is then employed for fine-tuning. This process is expertly guided by a reward model that rigorously evaluates the LLM’s performance and offers constructive feedback to steer its learning journey.

But why did DeepMind opt for machine translation as the litmus test for ReST? The answer lies in the profound impact of LLMs on this domain. Machine translation offers robust benchmarks and a meticulously defined evaluation procedure, making it an ideal litmus test for assessing the efficacy of ReST.

What further enhances the credibility of this research is the presence of several reliable scoring and evaluation methods, such as Metric X, BLEURT, and COMET, which serve as robust reward models. These objective evaluation tools underscore ReST’s effectiveness in a demonstrable manner.

To ensure the versatility of their approach, the DeepMind team subjected ReST to rigorous testing across diverse benchmark datasets, including IWSLT 2014, WMT 2020, and an internal Web Domain dataset. They deliberately selected different language pairs for each dataset to showcase the generalizability of the results.

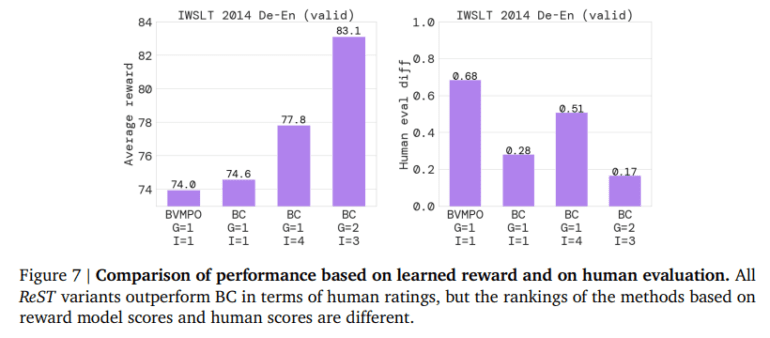

In addition to automated metrics, human evaluations played a pivotal role in validating ReST’s alignment with human preferences. These evaluations involved human assessors rating translations on a scale from 0 to 6, adding a qualitative dimension to the assessment process.

ReST: A Quantum Leap in Translation Quality

The results of these comprehensive evaluations left no room for doubt – ReST stands as a formidable force in improving translation quality. This assertion is substantiated not only by automated metrics but also by the discerning judgments of human evaluators across MT benchmarks.

What truly sets ReST apart is its unparalleled efficiency. It outshines online reinforcement learning methods in terms of both sample and compute efficiency by generating training data offline, a feat that allows for data reutilization and optimization.

As underscored by techno-optimist and AI accelerationist Far El in a tweet, ReST signifies “1 more step towards fully autonomous machines and the beginning of the end of manual finetuning“.

Yet, the impact of ReST transcends the realm of machine translation. Its adaptability shines through in various generative learning scenarios, encompassing summarization, turn-based dialogue, and generative audio and video models. The authors of this groundbreaking research emphasize that ReST heralds a new era in reinforcement learning from human feedback (RLHF) across a broad spectrum of language-related tasks.

Conclusion:

DeepMind’s pioneering Reinforced Self-Training methodology represents a monumental advancement in language models. Its ability to improve translation quality, enhance efficiency, and adapt to diverse generative learning tasks positions it as a transformative force in the market, paving the way for more efficient and high-quality human-AI interactions across various language-related domains. Businesses and industries relying on language models should closely monitor and integrate ReST to stay at the forefront of innovation and meet evolving customer demands.