TL;DR:

- Google DeepMind’s OPRO leverages Large Language Models (LLMs) as optimizers.

- OPRO simplifies optimization tasks by expressing them in everyday language.

- It employs an iterative solution generation process guided by natural language prompts.

- OPRO excels in solving complex optimization challenges like linear regression and the traveling salesman problem.

- It extends its reach to optimize prompts themselves, enhancing task accuracy in natural language processing.

- OPRO-optimized prompts consistently outperform those crafted by humans, with significant improvements in performance observed.

Main AI News:

With the relentless evolution of Artificial Intelligence, its myriad subdomains, encompassing Natural Language Processing, Natural Language Generation, Natural Language Understanding, and Computer Vision, are witnessing a surge in prominence. Among these, Large Language Models (LLMs) have recently assumed a pivotal role as optimizers, leveraging their expansive capabilities to bolster the landscape of natural language comprehension and optimization processes. Optimization, a linchpin in diverse industries and scenarios, has traditionally leaned on derivative-based methods for tackling multifarious challenges.

However, the real world often presents a formidable hurdle, as gradients sporadically remain elusive, casting a shadow over the optimization terrain. To surmount this obstacle, a cadre of enterprising researchers from Google DeepMind has introduced a groundbreaking approach christened “Optimisation by PROmpting” or OPRO. This innovative method harnesses the prowess of LLMs as optimizers, proffering an elegantly straightforward yet remarkably potent technique. At its core, OPRO revolutionizes the optimization process by translating complex conundrums into everyday language, rendering it more accessible and intuitive.

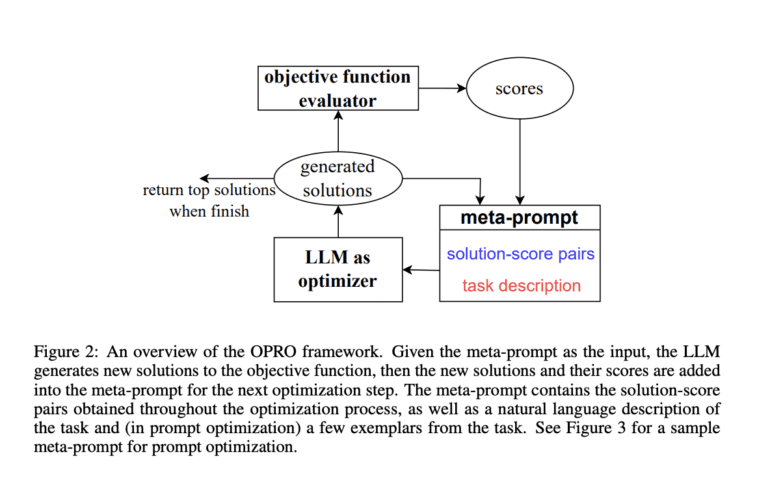

OPRO initiates its journey by articulating the optimization problem using natural language, eschewing intricate mathematical formulations in favor of simplicity and comprehension. Subsequently, it embarks on an Iterative Solution Generation process, wherein the LLM fabricates novel candidate solutions for each optimization step, guided by the user-provided natural language prompt. This pivotal prompt encapsulates vital information pertaining to previously formulated solutions and their corresponding values, serving as a springboard for further refinement.

As the iterative process unfolds, refined solutions take shape and undergo rigorous evaluation. The subsequent optimization steps incorporate these enhanced solutions, progressively elevating the overall performance and quality of the outcome. To illustrate the efficacy of OPRO, practical examples were employed in tackling two quintessential optimization quandaries: the linear regression problem and the traveling salesman problem. These venerable challenges, renowned benchmarks of optimization prowess, succumbed to OPRO’s ingenuity, yielding outstanding solutions.

Moreover, OPRO extends its purview to the optimization of prompts themselves, transcending conventional optimization paradigms. The pursuit of instructions that amplify task accuracy becomes paramount, particularly in the realm of natural language processing, where the structure and content of a prompt wield profound influence over outcomes.

The research team’s empirical findings unequivocally attest to OPRO’s superiority. OPRO-optimized prompts consistently outperform their human-crafted counterparts. In a striking instance, performance on Big-Bench Hard workloads soared by an astonishing 50%, with an impressive 8% improvement on the GSM8K benchmark. These results underscore the remarkable potential of OPRO in elevating optimization outcomes, propelling it to the forefront of AI-driven optimization solutions.

Conclusion:

OPRO’s innovative use of Large Language Models as optimizers represents a transformative leap in the field of optimization. Its ability to simplify complex tasks, produce outstanding solutions, and optimize prompts has the potential to revolutionize the market by significantly improving optimization outcomes across various industries, making it a key player in the evolving landscape of AI-driven optimization solutions.