TL;DR:

- MADLAD-400 is a groundbreaking 2.8T token web-domain dataset encompassing 419 languages.

- Traditional NLP training data has been limited to English, but MADLAD-400 addresses the scarcity of diverse data for less common languages.

- This dataset was meticulously curated with a manual content audit, ensuring high-quality data across linguistic boundaries.

- Researchers prioritized transparency, providing comprehensive documentation of the auditing process.

- Filters and checks were implemented to remove problematic content, enhancing data quality.

- MADLAD-400 is inclusive, embracing underrepresented languages, which can lead to more equitable NLP technologies.

- Extensive experiments demonstrate its effectiveness in elevating translation quality for a wide range of languages.

Main AI News:

In the dynamic realm of Natural Language Processing (NLP), the evolution of machine translation and language models has traditionally pivoted on the availability of extensive training data, predominantly in English. However, a formidable impediment looms large for researchers and practitioners alike—a glaring dearth of diverse, high-quality training data for languages less traversed. This bottleneck hinders the advancement of NLP technologies, casting a shadow over linguistic communities worldwide. Sensing the urgency of this challenge, a dedicated research team took upon themselves the mantle of innovation, thus birthing MADLAD-400.

To appreciate the magnitude of MADLAD-400’s significance, it’s imperative to scrutinize the prevailing landscape of multilingual NLP datasets. Historically, researchers have leaned heavily on data scraped from the web, drawn from numerous sources, to nourish the foundations of machine translation and language models. While this approach has yielded impressive outcomes for languages brimming with online content, it stumbles when confronted with the less-traveled linguistic terrains.

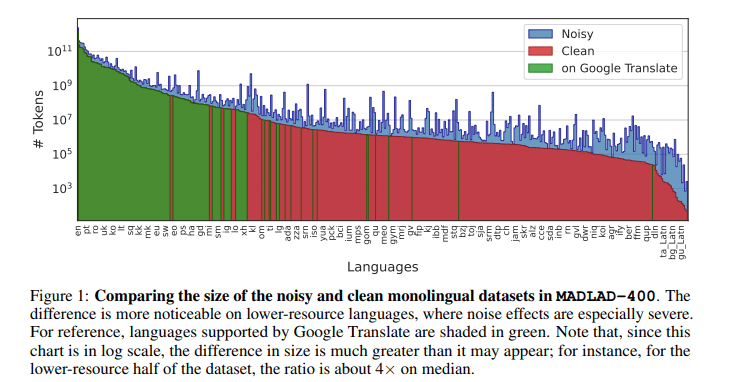

The architects behind MADLAD-400 discerned the constraints of this conventional methodology. They recognized that web-scraped data often arrives fraught with a slew of challenges—noise, inaccuracies, and content of fluctuating caliber, to name a few. These quandaries are exacerbated when grappling with languages dwelling in the digital periphery.

In defiance of these hurdles, the research team embarked on a mission to forge a multilingual dataset that not only spans a diverse linguistic spectrum but also upholds the loftiest benchmarks of quality and ethical content. The fruit of their labor materialized as MADLAD-400, a dataset poised to redefine the landscape of training and developing NLP models for multilingual applications.

MADLAD-400 distinguishes itself as a testament to the unwavering dedication and meticulousness of its creators. What sets this dataset apart is the rigorous auditing process it endured. Unlike many multilingual datasets, MADLAD-400 didn’t solely rely on automated web scraping. Instead, it underwent an exhaustive manual content audit across 419 languages.

The audit was no trifling task. It demanded the proficiency of individuals well-versed in diverse languages, as the research team diligently scrutinized and evaluated data quality, transcending linguistic boundaries. This hands-on approach fortified the dataset’s adherence to the highest standards of quality.

The researchers also meticulously documented their auditing journey. This transparency stands as an invaluable asset for dataset users, offering a glimpse into the meticulous steps taken to ensure data quality. The documentation serves as both a guidepost and a bedrock for reproducibility, an indispensable facet of scientific research.

Beyond manual audits, the research team engineered filters and checks to bolster data quality further. They identified and rectified problematic content, including copyrighted material, hate speech, and personal information. This proactive stance on data cleansing mitigates the risk of unsavory content infiltrating the dataset, instilling confidence in the hearts of researchers.

Moreover, MADLAD-400 underscores the research team’s unwavering commitment to inclusivity. It encompasses a kaleidoscope of languages, amplifying the voices of linguistic communities often relegated to the fringes of NLP research. MADLAD-400 heralds the era of more inclusive and equitable NLP technologies by embracing languages beyond the mainstream.

While the creation and curation of MADLAD-400 stand as formidable achievements in their own right, the dataset’s true worth lies in its real-world applications. The research team conducted exhaustive experiments to showcase MADLAD-400’s efficacy in training large-scale machine translation models.

The results resonate with authority. MADLAD-400 unequivocally elevates translation quality across a broad spectrum of languages, illuminating its potential to propel the field of machine translation to new heights. This dataset erects a robust foundation for models that bridge language chasms and foster communication across linguistic divides.

Conclusion:

MADLAD-400 represents a pivotal development in the multilingual NLP landscape. Its comprehensive coverage, meticulous curation, and improved translation quality promise to reshape the market by making NLP technologies more inclusive and effective for a global audience. Businesses and organizations can leverage this dataset to enhance their language-related applications, opening up new opportunities for diverse linguistic communities.