TL;DR:

- Microsoft Research Asia unveils InstructDiffusion, a revolutionary vision framework.

- It offers a unified interface for various computer vision tasks.

- InstructDiffusion reimagines vision tasks as intuitive image manipulations.

- It operates in a flexible pixel space, aligning with human perception.

- The model responds to textual instructions for tasks like keypoint detection and segmentation.

- Powered by denoising diffusion probabilistic models (DDPM).

- Trained on triplets of instruction, source image, and target output.

- Supports RGB images, binary masks, and keypoints for diverse vision tasks.

Main AI News:

In a groundbreaking leap toward versatile, all-encompassing vision models, researchers at Microsoft Research Asia have introduced InstructDiffusion. This cutting-edge framework is poised to redefine the landscape of computer vision by providing a unified interface catering to a plethora of vision tasks. The paper titled “InstructDiffusion: A Generalist Modeling Interface for Vision Tasks” introduces a model with the capacity to seamlessly manage diverse vision applications concurrently.

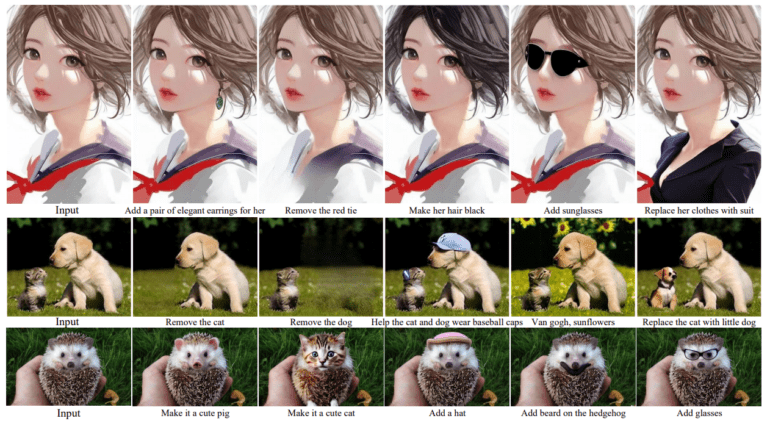

At the core of InstructDiffusion lies a pioneering approach: envisioning vision tasks as intuitive image manipulation processes guided by human input. In stark contrast to traditional methods reliant on predefined output categories or coordinates, InstructDiffusion operates within a malleable pixel space, aligning itself more closely with human perception.

This model is ingeniously designed to manipulate input images in response to textual instructions furnished by the user. For example, directives such as “encircle the man’s right eye in red” empower the model to excel in tasks like keypoint detection. Simultaneously, instructions like “apply a blue mask to the rightmost dog” find their purpose in segmentation tasks.

Beneath the surface of this revolutionary framework lie denoising diffusion probabilistic models (DDPM), which yield pixel outputs. The training dataset comprises triplets, each encompassing an instruction, a source image, and a target output image. The model is meticulously calibrated to tackle three primary output types: RGB images, binary masks, and keypoints. This extensive coverage spans a wide spectrum of vision tasks, including but not limited to segmentation, keypoint detection, image manipulation, and enhancement.

Conclusion:

InstructDiffusion represents a significant leap in the field of computer vision, bridging the gap between human instructions and AI capabilities. Its potential to handle a wide range of vision tasks with precision and adaptability positions it as a disruptive force in the market. This framework has the potential to revolutionize industries reliant on computer vision, from healthcare to autonomous vehicles, by providing a unified and user-friendly interface for complex tasks. Companies looking to harness the power of AI in their vision-related applications should closely monitor developments in InstructDiffusion and consider its integration into their strategies.