TL;DR:

- DeepMind introduces Optimization by PROmpting (OPRO) to optimize AI language models.

- OPRO uses natural language descriptions instead of formal math, making it adaptable.

- Large language models (LLMs) generate solutions guided by problem descriptions.

- OPRO enhances LLM prompts, improving task accuracy iteratively.

- Prompt engineering influences model output but is format and task-specific.

- OPRO opens new possibilities for ChatGPT, PaLM, and similar models.

- It enables LLMs to gradually generate optimal prompts, addressing low initial accuracy.

- Real-world tests show promising results in mathematical optimization problems.

- Similar prompts can yield different LLM behaviors, cautioning against anthropomorphism.

Main AI News:

In the realm of deep learning AI models, the reliance on optimization algorithms to ensure precision has been a longstanding practice. However, derivative-based optimizers often grapple with real-world complexities, leading to performance challenges. Enter Optimization by PROmpting (OPRO), a groundbreaking approach developed by DeepMind that leverages large language models (LLMs) as self-optimizers. What sets OPRO apart is its departure from formal mathematical definitions, using natural language to articulate optimization tasks.

As DeepMind’s researchers explain, OPRO starts with a “meta-prompt” comprising a task description, problem examples, prompt instructions, and solutions. The LLM then generates candidate solutions grounded in the problem description and previous solutions from the meta-prompt. These solutions are assessed and assigned quality scores, enriching the meta-prompt context for the subsequent round of solution generation. This iterative process continues until the LLM stops proposing better solutions.

The true power of LLMs for optimization lies in their proficiency in understanding natural language. Users can specify target metrics, like “accuracy,” and provide additional instructions, such as requesting concise and broadly applicable solutions. OPRO also capitalizes on LLMs’ ability to identify in-context patterns, allowing them to improve upon existing solutions without explicit instructions.

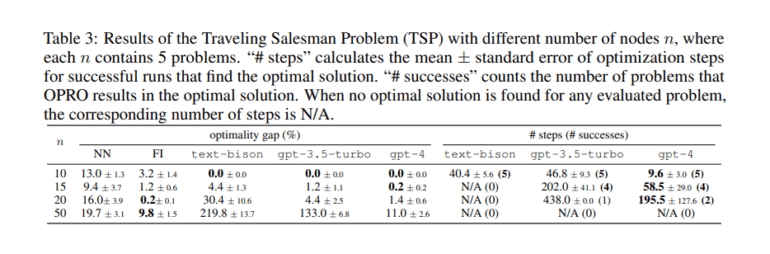

To validate OPRO’s effectiveness, it was tested on well-known mathematical optimization problems, such as linear regression and the “traveling salesman problem.” While it may not always be the most optimal approach, LLMs demonstrated the ability to grasp optimization directions on small-scale problems based on past optimization trajectories in the meta-prompt.

The impact of prompt engineering on model output was also evident. For instance, appending phrases like “let’s think step by step” can guide the model to outline problem-solving steps, leading to more accurate results. However, it’s crucial to note that LLM responses are highly dependent on the prompt format and task, emphasizing the need for model-specific and task-specific prompt formats.

The true potential of OPRO is in optimizing prompts for LLMs like OpenAI’s ChatGPT and Google’s PaLM, enabling them to achieve maximum task accuracy. This approach gradually generates new prompts that improve accuracy over the optimization process, addressing the challenge of low initial task accuracies.

In practical terms, consider using OPRO to find the optimal prompt for solving word-math problems. An “optimizer LLM” iteratively generates different optimization prompts, which are then evaluated by a “scorer LLM” based on problem examples. The best prompts, along with their scores, are incorporated into the meta-prompt, and the process continues until convergence. Remarkably, all LLMs in the evaluation consistently improved prompt generation through iterative optimization.

For instance, when testing OPRO with PaLM-2 on grade school math word problems, the model produced remarkable results, culminating in prompts like “Let’s do the math” for the highest accuracy. These results underscore the need to avoid anthropomorphizing LLMs, as seemingly similar prompts can yield divergent behaviors.

Nevertheless, OPRO offers a systematic approach to exploring the vast space of LLM prompts, ultimately finding the most effective ones for specific problems. Its real-world applications remain to be seen, but this research marks a significant stride in understanding the inner workings of LLMs and their potential to revolutionize AI optimization.

Conclusion:

DeepMind’s OPRO innovation showcases the potential of LLMs to optimize their own prompts using natural language, leading to more precise AI solutions. This breakthrough presents significant opportunities for the market, allowing businesses to tailor AI models for specific tasks with improved accuracy and efficiency. It underscores the importance of careful prompt engineering while highlighting the need to understand the nuances of LLM behavior for optimal results in various applications.