TL;DR:

- SambaNova unveils the SN40L chip designed for 5 trillion parameter models.

- The chip reduces operational costs by 30 times compared to competitors.

- SambaNova provides a comprehensive AI ecosystem encompassing hardware and software.

- Customers retain ownership of their data and models.

- A subscription-based model offers cost predictability for AI projects.

- The SN40L chip maintains backward compatibility with previous generations.

Main AI News:

In the ever-evolving landscape of generative AI and large language models, the demand for computational power has surged. With the advent of models like ChatGPT from OpenAI, the industry has witnessed an insatiable appetite for processing power, often necessitating expensive GPU chips. SambaNova, a rising player in the AI arena, is poised to disrupt the status quo with its groundbreaking SN40L chip, designed to handle the formidable task of powering a 5 trillion parameter model while significantly reducing operational costs.

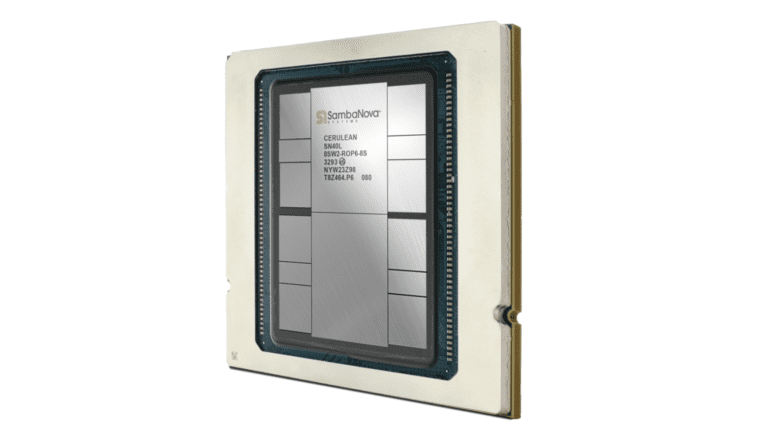

While SambaNova may not yet bear the household recognition of tech giants like Google, Microsoft, or Amazon, it has been diligently crafting a comprehensive AI ecosystem encompassing both hardware and software solutions for several years. Backed by formidable investors such as Intel Capital, BlackRock, and SoftBank Vision Fund, the company has amassed over $1 billion in funding, according to Crunchbase. Today, SambaNova unveils its latest innovation—the SN40L, marking the fourth generation of its proprietary AI chips.

SambaNova’s founder and CEO, Rodrigo Liang, elucidates the rationale behind their chip development strategy: “We recognized the imperative of controlling the underlying hardware to achieve peak efficiency, especially in a landscape increasingly reliant on resource-intensive large language models.” Liang emphasizes a departure from the conventional brute-force approach, advocating for a more elegant solution tailored to the demands of vast language models.

“Our SN40L chip represents a monumental leap in efficiency, reducing the chip count required for running trillion-parameter models by a staggering 30 times,” claims Liang. He asserts that competitors would necessitate 50 to 200 chips for the same task, while SambaNova accomplishes it with a mere eight chips.

The audaciousness of this assertion notwithstanding, SambaNova provides a holistic hardware and software ecosystem, simplifying the development of AI applications. Liang asserts, “We’re in the business of creating AI assets, enabling rapid model training based on proprietary data while ensuring that ownership remains with the customer. Your data, your model, your future.”

SambaNova adopts a subscription-based approach, delivering both hardware and software solutions, offering customers enhanced cost predictability for their AI projects. The SN40L chip, unveiled today, not only ushers in a new era of efficiency but also maintains backward compatibility with previous-generation chips, cementing SambaNova’s commitment to facilitating a seamless transition to cutting-edge AI capabilities.

Conclusion:

SambaNova’s SN40L chip represents a significant leap in the efficiency of large language models, promising to revolutionize the AI market. Its ability to dramatically reduce chip requirements while providing a complete AI solution with data ownership benefits positions SambaNova as a formidable player in the evolving AI landscape. This innovation is poised to reshape the market by offering both cost-effective and efficient solutions to enterprises seeking to harness the power of AI.