TL;DR:

- Adversarial evasion attacks pose a growing concern in AI.

- Existing defenses mainly target image data, leaving text and tabular data vulnerable.

- The Universal Robustness Evaluation Toolkit (URET) offers a versatile solution.

- URET’s graph exploration approach identifies malicious input alterations efficiently.

- Users can customize exploration methods, transformations, and rules with a simple configuration.

- IBM Research demonstrates URET’s effectiveness across diverse data types.

- URET’s metrics assess AI model resilience against evasion attacks.

Main AI News:

In the ever-evolving arena of artificial intelligence, a pressing concern has surfaced—the susceptibility of AI models to adversarial evasion tactics. These crafty exploits can result in deceptive model outputs, achieved through subtle alterations in input data. This peril transcends computer vision models, necessitating robust defenses as AI becomes an integral part of our daily existence.

Existing endeavors to counter adversarial attacks have predominantly centered on images, making them convenient targets for manipulation. While considerable headway has been achieved in this realm, other data types, such as text and tabular data, present distinctive challenges. These data forms must be converted into numerical feature vectors for model consumption, all while preserving their semantic integrity during adversarial modifications. The majority of available toolkits grapple with these intricacies, rendering AI models in these domains susceptible.

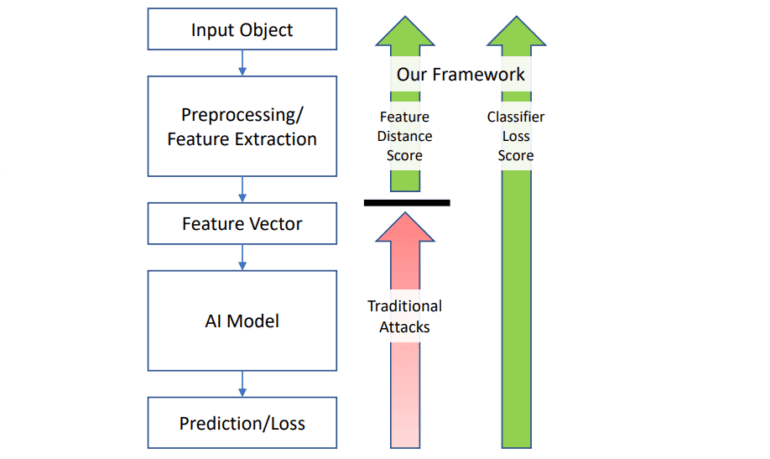

Enter URET—a game-changing solution in the ongoing battle against adversarial attacks. URET approaches malevolent attacks as a graph exploration puzzle. In this framework, each node symbolizes an input state, and each edge signifies an input transformation. It efficiently identifies sequences of alterations that lead to model misclassification. URET’s GitHub-hosted toolkit simplifies the process, enabling users to configure exploration methods, transformation types, semantic rules, and objectives tailored to their specific requirements.

In a recent publication by IBM Research, the URET team showcased its prowess by crafting adversarial examples across tabular, textual, and file input types, all seamlessly supported by URET’s transformation definitions. However, URET’s real strength lies in its versatility. Acknowledging the diverse landscape of machine learning implementations, the toolkit offers advanced users an open canvas to define personalized transformations, semantic rules, and exploration objectives.

To gauge its capabilities, URET relies on metrics that underscore its effectiveness in generating adversarial examples across various data types. These metrics not only showcase URET’s knack for identifying and exploiting AI model vulnerabilities but also furnish a standardized benchmark for evaluating model resilience against evasion tactics.

Conclusion:

The rise of URET signifies a critical development in the AI market. As AI integration expands across industries, the vulnerability to adversarial attacks becomes a pressing concern. URET’s adaptability to various data types and open-source customization options offer a powerful defense mechanism. This toolkit not only enhances AI model security but also instills trust in AI applications, making it an invaluable asset for businesses seeking robust AI solutions in an evolving digital landscape.