TL;DR:

- Stanford introduces PointOdyssey, a large synthetic dataset for long-term fine-grained tracking.

- This dataset bridges the gap between short-range and coarse-grained tracking, offering a unique approach.

- PointOdyssey achieves pixel-perfect annotation through realistic simulations.

- It incorporates motions, scene layouts, and camera trajectories mined from real-world data.

- The dataset includes diverse assets like humanoids, animals, textures, objects, sceneries, and environment maps.

- Innovative domain randomization and dynamic effects enhance realism.

- Researchers expand tracking algorithms to accommodate long-range temporal context.

- Experimental findings show significant improvements in tracking accuracy.

- PointOdyssey is a game-changer for computer vision, fostering innovation and precision.

Main AI News:

In the realm of computer vision, large-scale annotated datasets have long been the bedrock for building precision models. They pave the way for innovation and excellence in various computer vision tasks. In a groundbreaking study, researchers at Stanford University aim to create a highway of data, ushering in a new era of fine-grained long-range tracking – an ambitious endeavor indeed.

Fine-grained long-range tracking, as its name suggests, seeks to persistently follow a specific world surface point, no matter how distant, given a pixel’s location in any frame of a video. While datasets for short-range tracking and various forms of coarse-grained long-range tracking have proliferated over the years, the intersection between these two realms has been sparsely explored.

Recent experiments have seen fine-grained trackers tested on real-world movies with minimal human-provided annotations and trained on synthetic data that often comprises random objects moving haphazardly against unpredictable backdrops. While the adaptability of these models to real videos is intriguing, it’s crucial to recognize the limitations. Such training methods inhibit the development of long-range temporal context and a deep understanding of scene-level semantics.

Long-range point tracking should not merely be an extension of optical flow where realism can be sacrificed without consequence. The movement of pixels in a video, though seemingly random, reflects an array of modellable elements. These encompass camera shakes, object-level shifts and deformations, and intricate multi-object relationships, including both social and physical interactions. The key to progress lies in acknowledging the magnitude of these challenges – both in terms of data and methodology.

In response to these imperatives, Stanford University researchers present PointOdyssey, an expansive synthetic dataset meticulously designed for long-term fine-grained tracking training and assessment. Their collection meticulously encapsulates the intricacies, diversities, and realism found in real-world videos, achieving pixel-perfect annotation through simulation.

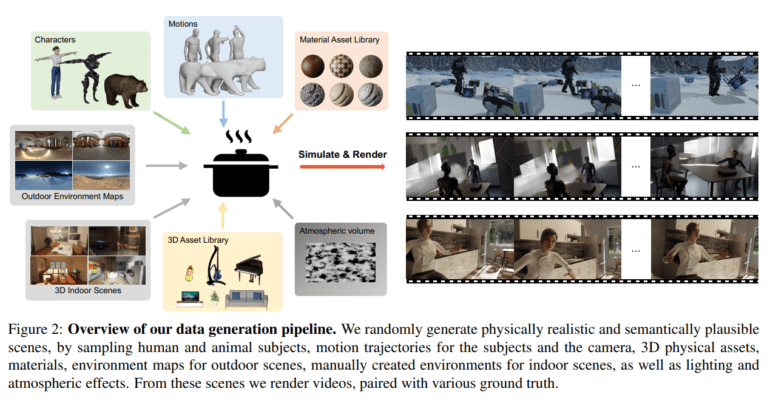

The uniqueness of PointOdyssey lies in its foundation – motions, scene layouts, and camera trajectories mined from real-world videos and motion captures, as opposed to being randomly generated or hand-designed. Domain randomization adds another layer of authenticity, encompassing elements such as environment maps, lighting conditions, human and animal movements, camera paths, and material properties. Thanks to advancements in content accessibility and rendering technology, the dataset achieves a level of photorealism previously unattainable.

The motion profiles within PointOdyssey are derived from substantial human and animal motion capture datasets. In outdoor settings, these motion profiles are combined with randomly dispersed 3D objects on the ground plane, responding realistically to actor interactions. For indoor scenarios, motion captures from indoor settings are utilized to recreate precise environments within their simulator, preserving the scene-aware character of the original data. To provide complex multi-view data, real camera trajectories are imported and additional cameras are connected to the synthetic entities’ heads. In stark contrast to previous datasets with largely random motion patterns, PointOdyssey adopts a capture-driven approach.

This comprehensive dataset promises to catalyze the development of tracking techniques that transcend conventional bottom-up cues. By incorporating scene-level cues, it provides strong priors on tracking. PointOdyssey boasts a rich array of simulated assets, including 42 humanoid forms with artist-crafted textures, 7 animals, over 1,000 object/background textures, 1,000+ objects, 20 original 3D sceneries, and 50 environment maps, ensuring aesthetic diversity. Scene lighting is randomized to create a spectrum of dark and bright settings, and dynamic fog and smoke effects are introduced, introducing a form of partial occlusion previously absent in datasets like FlyingThings and Kubric.

One of the most intriguing challenges posed by PointOdyssey is the utilization of long-range temporal context. For instance, the state-of-the-art tracking algorithm, Persistent Independent Particles (PIPs), originally designed with an 8-frame temporal window, undergoes a transformation. It now accommodates arbitrarily lengthy temporal contexts, significantly expanding its temporal scope and incorporating a template-update mechanism. Experimental results affirm that this solution outperforms all others in terms of tracking accuracy, both on the PointOdyssey test set and real-world benchmarks.

Conclusion:

PointOdyssey’s introduction into the market signifies a pivotal moment for computer vision. Its realistic synthetic dataset, accommodating long-range tracking, promises to drive advancements in tracking techniques, offering new possibilities for industries relying on computer vision, such as autonomous vehicles, surveillance, and robotics. This innovation sets the stage for improved accuracy and performance, positioning stakeholders to harness the full potential of fine-grained long-term point tracking.