TL;DR:

- The Boolformer model, a collaboration between Apple and EPFL, revolutionizes symbolic logic.

- It infers compact Boolean formulas solely from input-output samples.

- Boolformer excels in generalization to complex functions beyond its training data.

- It leverages fundamental logical gates (AND, OR, NOT) for symbolic formula prediction.

- Researchers demonstrate its robustness in handling noisy and missing data.

- Competitive performance in binary classification tasks against traditional ML methods.

- Speedy modeling of gene regulatory networks with remarkable inference times.

- Transparent AI with detailed insights into learned formulae.

- Boolformer outperforms traditional models and provides clear justifications for predictions.

Main AI News:

In the realm of artificial intelligence, the transformational power of deep neural networks, particularly those structured around the Transformer design, cannot be overstated. These neural marvels have significantly expedited scientific breakthroughs in domains as diverse as computer vision and language modeling. However, there’s a compelling challenge that still looms large—tackling intricate logical problems.

Unlike their counterparts in vision and language tasks, these logical conundrums possess a combinatorial complexity that complicates the acquisition of representative data. The deep learning community has thus shifted its gaze towards the realm of reasoning, encompassing explicit logical tasks such as arithmetic, algebra, algorithmic CLRS tasks, and LEGO. Implicit reasoning has also been explored in various modalities, including Pointer Value Retrieval and Clevr for vision models, as well as LogiQA and GSM8K for language models.

But what about tasks hinging on Boolean modeling? Especially in domains as critical as biology and medicine, these tasks demand intricate reasoning capabilities. Standard Transformer structures have often faltered when confronted with such challenges, prompting the quest for more efficient alternatives—ones that harness the inherent Boolean nature of these tasks.

Enter the groundbreaking Boolformer model, a joint venture between Apple and EPFL. It introduces an avant-garde approach to symbolic logic, pioneering the inference of concise Boolean formulas solely from input-output samples. What sets Boolformer apart is its unparalleled ability to consistently generalize functions and data complexities beyond its training regimen—unmatched by any contemporary model.

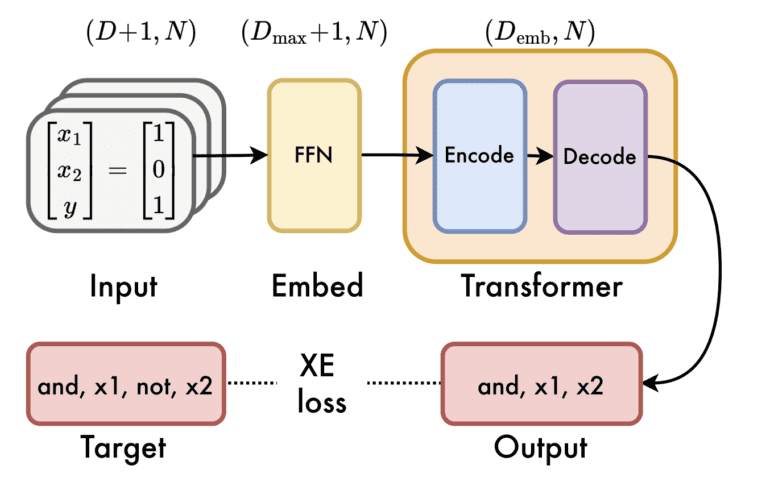

The crux of Boolformer’s prowess lies in its capacity to craft Boolean formulas that encapsulate the essence of a Boolean function using the fundamental logical gates (AND, OR, and NOT). This task is ingeniously cast as a sequence prediction problem, with synthetic functions serving as training exemplars and their truth tables furnishing the input groundwork. By embracing this framework, researchers achieve not only generalizability but also a heightened level of interpretability, thus asserting control over the data generation process.

In empirical tests, the researchers from Apple and EPFL unveil Boolformer’s startling efficacy across a spectrum of logical challenges, spanning both theoretical and practical domains. They also outline a clear trajectory for further refinement and diversified application.

Key Contributions:

- Compact Formula Prediction: Boolformer showcases its prowess by predicting concise formulas when presented with the entire truth table of an unseen function. This is achieved through training on synthetic datasets tailored for symbolic regression of Boolean formulas.

- Robustness to Noise and Missing Data: Boolformer’s resilience shines through as it handles noisy data and missing bits within truth tables. It demonstrates its mettle by thriving in challenging environments.

- Binary Classification Brilliance: The researchers pit Boolformer against binary classification tasks drawn from the PMLB database, revealing competitive performance vis-a-vis traditional machine learning methods such as Random Forests, all while preserving interpretability.

- Modeling Gene Regulatory Networks (GRNs): Boolformer extends its reach into the realm of biology by modeling gene regulatory networks. It not only competes with state-of-the-art approaches but also offers remarkably faster inference times, as substantiated by a recently released benchmark.

For those eager to delve deeper into Boolformer’s capabilities, the code and models are available at https://github.com/sdascoli/boolformer. The boolformer pip package streamlines installation and usage, making it an accessible tool for AI enthusiasts.

Conclusion:

The Boolformer model, born from the collaboration between Apple and EPFL, represents a significant leap in symbolic logic. Its ability to derive concise Boolean formulas from input-output samples, coupled with exceptional generalization and transparency, positions it as a formidable tool. For the market, this signifies a potential shift towards more interpretable and efficient AI systems, particularly in fields requiring intricate logical reasoning and classification tasks. Boolformer’s versatility opens doors to safer and more insightful AI deployment.