TL;DR:

- AI watermarks, crucial for combating misinformation, face significant vulnerabilities.

- University of Maryland research shows current watermarking methods are easily evaded.

- UMD team pioneers a near-unremovable watermark, promising enhanced security.

- Collaborative research reveals two types of attacks on watermarks: destructive and constructive.

- The race between watermark creators and hackers intensifies, demanding robust standards.

- With the 2024 U.S. presidential election approaching, the urgency to innovate in watermarking technology is evident.

- AI-generated deep fake ads pose a significant threat to political discourse.

- The Biden administration acknowledges the risks associated with AI misuse, especially in spreading misinformation.

Main AI News:

In the realm of artificial intelligence, watermarks serve as a critical safeguard against the proliferation of misinformation and deep fakes, a rising concern in today’s digital age. These digital fingerprints, though invisible to the naked eye, can be the bulwark that shields us from the onslaught of AI-generated content designed to deceive. Major tech players like Google, OpenAI, Meta, and Amazon have all rallied behind the development of watermarking technology to counteract this threat.

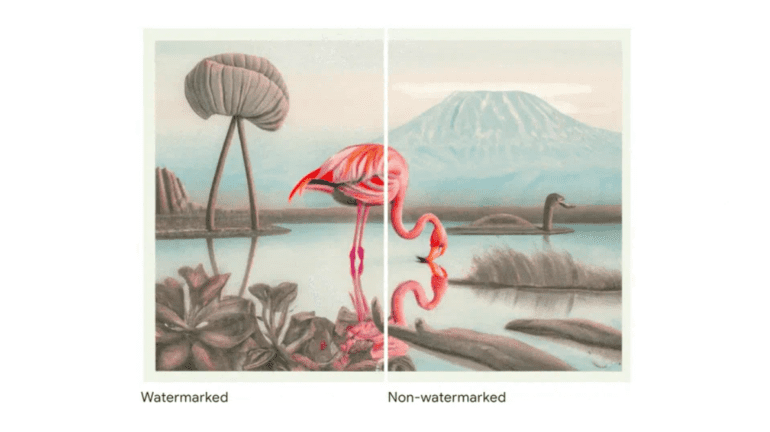

However, recent research from the University of Maryland (UMD) reveals a disconcerting vulnerability in current AI watermarking methods. Soheil Feizi, a UMD professor, underscored the lack of reliable watermarking applications, highlighting the ease with which they can be evaded or manipulated. During rigorous testing, the researchers demonstrated their ability to bypass existing watermarking techniques and even successfully affix counterfeit emblems to non-AI-generated images.

Nevertheless, a glimmer of hope shines through the gloom of vulnerability. A UMD research team has pioneered an exceptionally resilient watermark, nearly impervious to removal without compromising intellectual property integrity. This innovation promises a new era in product theft detection, adding an extra layer of security for businesses and content creators.

Parallel research conducted jointly by the University of California, Santa Barbara, and Carnegie Mellon University explores the susceptibility of watermarks to simulated attacks. Their findings categorize these attacks into two distinct methodologies: destructive and constructive. Destructive attacks manipulate watermarks as if they were inherent to the image, employing techniques such as brightness and contrast adjustments, JPEG compression, or image rotation. While effective in eliminating watermarks, these methods unavoidably degrade image quality.

On the other hand, constructive attacks, exemplified by the venerable Gaussian blur technique, demonstrate a more delicate approach to watermark removal, preserving image quality while erasing the watermark. These findings highlight the ongoing arms race between watermark creators and hackers, necessitating the development of new, resilient standards.

As we stand at the precipice of the 2024 United States presidential election, the urgency for innovation in AI-generated content watermarking has never been more apparent. The potential for AI-driven deep fake ads to sway political opinion underscores the gravity of the situation. The Biden administration has recognized the threat posed by disruptive AI misuse, particularly in the propagation of misinformation. In this dynamic landscape, digital watermarking emerges as a beacon of hope, striving to maintain the integrity of the digital realm amidst ever-evolving technological challenges.

Conclusion:

The vulnerabilities in current AI watermarking systems underscore the need for rapid advancements in digital watermark technology. As the threat of deep fakes and misinformation looms large, businesses and content creators must prioritize the development of resilient watermarking methods to protect their intellectual property and maintain the integrity of digital content. This evolving landscape offers opportunities for innovators to establish new standards and solutions in the burgeoning market for AI watermark technology.