TL;DR:

- Lamini embraces AMD Instinct MI GPUs for LLMs in their new Superstation.

- Lamini conducted secret testing on over 100 AMD Instinct GPUs.

- AMD’s MI GPUs offer impressive computing power and compete with Nvidia.

- Greg Diamos, Lamini CTO, highlights software parity with CUDA.

- Availability becomes a key advantage for Lamini.

- AMD’s MI300 GPU holds promise, potentially enhancing Lamini’s offerings.

Main AI News:

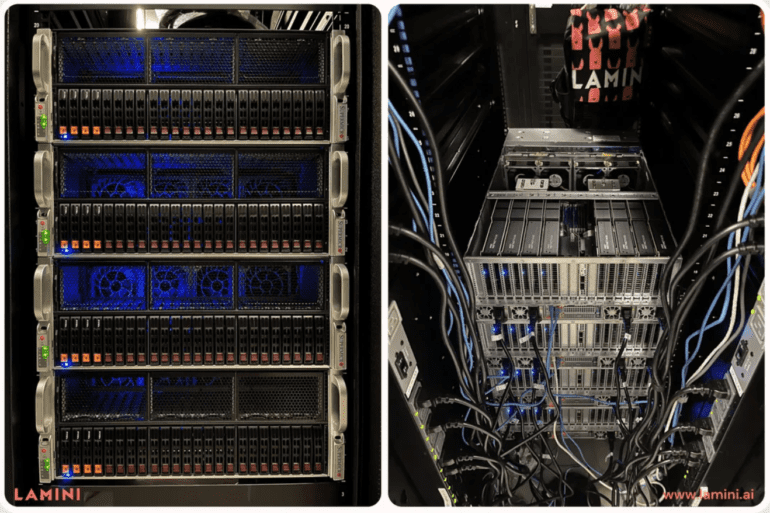

The AI LLM landscape is undergoing a transformative shift, with Lamini, a leading provider backed by MLPerf co-founder, making a strategic move by placing its bets on the mature AMD Instinct MI GPUs. In response to the escalating demand for enterprise-grade large language models (LLMs), Lamini has unveiled its groundbreaking LLM Superstation, meticulously powered by AMD’s formidable Instinct MI GPUs.

What sets Lamini apart is its year-long clandestine operation, where it has been rigorously testing LLMs on a fleet of over 100 AMD Instinct GPUs, even preceding the launch of ChatGPT. Today, with the advent of the LLM Superstation, Lamini invites a broader spectrum of potential customers to leverage its cutting-edge infrastructure for their model deployments.

These robust platforms harness the formidable capabilities of AMD Instinct MI210 and MI250 accelerators, a notable deviation from the dominant industry choice of Nvidia H100 GPUs. Lamini takes pride in its decision, asserting that businesses opting for AMD GPUs can bid farewell to the worries of dealing with lengthy 52-week lead times.

When evaluating the choice between AMD and Nvidia GPUs for LLMs, it’s crucial to note that AMD’s hardware stands as a formidable contender. For instance, the Instinct MI250 boasts an impressive 362 teraflops of computing power for AI workloads, with the MI250X pushing this envelope even further to 383 teraflops. In contrast, Nvidia’s A100 GPU, while certainly potent, offers up to 312 teraflops of computing power.

Lamini’s CTO, Greg Diamos, a co-founder of MLPerf, underscores the significance of their choice, stating, “Using Lamini software, ROCm has achieved software parity with CUDA for LLMs. We chose the Instinct MI250 as the foundation for Lamini because it runs the biggest models that our customers demand and integrates finetuning optimizations. We use the large HBM capacity (128GB) on MI250 to run bigger models with lower software complexity than clusters of A100s.”

In theory, AMD’s GPUs can indisputably compete with Nvidia’s offerings. However, the true differentiator lies in availability, with platforms like Lamini’s LLM Superstation empowering enterprises to take on demanding workloads immediately. Yet, a lingering question surrounds AMD’s forthcoming GPU, the MI300. Presently, businesses have the opportunity to sample the MI300A, while the MI300X is slated for sampling in the months to come.

According to Tom’s Hardware, the MI300X boasts an impressive 192GB of memory, double the capacity of the H100. Though the exact compute performance remains undisclosed, it is poised to be on par with the H100. Lamini’s prospects could be further elevated should it choose to build and offer its infrastructure powered by these next-gen GPUs, marking a potentially game-changing development in the LLM arena.

Conclusion:

Lamini’s strategic shift towards AMD Instinct MI GPUs, backed by extensive testing, positions them as a strong contender in the LLM market. Their focus on eliminating lead time concerns and the potential incorporation of the MI300X indicates a game-changing development that could disrupt the LLM landscape and provide businesses with immediate access to high-performance AI infrastructure.