TL;DR:

- Stanford researchers introduce an innovative AI technique for efficient shading decomposition.

- Traditional methods rely on complex representations, hindering shading editing.

- The new approach utilizes shade tree representations to break down object surface shading into an understandable format.

- It bridges the gap between physical shading and digital manipulation.

- The method combines auto-regressive inference with optimization algorithms.

- Shade tree representations focus on shading outcomes, different from traditional techniques.

- The method has applications in various domains, including urban design and scene representation.

- It outperforms baseline frameworks in inferring shade tree representations.

- Robustness and versatility are demonstrated across synthetic and real-captured datasets.

- Real-world applicability is confirmed, facilitating efficient object shading edits.

Main AI News:

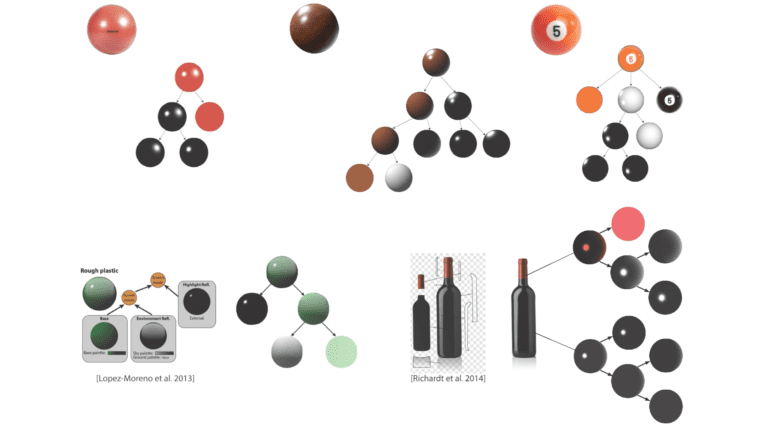

In the realm of computer vision, the task of extracting intricate object shading details from a single image has posed enduring challenges. Past methods often leaned heavily on intricate parametric or measured representations, creating a formidable barrier for shading manipulation. Enter the ingenious minds at Stanford University who have devised a groundbreaking solution that leverages shade tree representations. This pioneering approach seamlessly blends fundamental shading nodes with advanced compositing techniques to deconstruct object surface shading into a comprehensible and user-friendly format. Their innovation empowers users to effortlessly edit object shading, bridging the chasm between physical shading processes and digital manipulation. This method skillfully tackles the inherent difficulty of deducing shade trees by employing a hybrid approach that combines auto-regressive inference with optimization algorithms.

The shade tree representation, hitherto underexplored in the world of computer graphics, differs significantly from conventional decomposition and inverse rendering techniques. Instead of focusing on reflectance properties, this representation delves into modeling shading outcomes. Furthermore, inverse procedural graphics, a field concerned with inferring parameters or grammar for procedural models, holds promise across a spectrum of domains, including urban design, textures, forestry, and scene representation.

In their in-depth exploration, the researchers shed light on the paramount role of shading in computer vision and graphics, underscoring its profound influence on surface appearance. Their approach stands in stark contrast to traditional methods, which are often confined to Lambertian surfaces, and the intricate and less user-friendly realm of inverse rendering techniques. The introduction of the shade tree model, renowned for its interpretability, marks a significant advancement in the field, particularly in the context of single-image object shading recovery. The two-stage methodology encompasses auto-regressive modeling for generating an initial tree structure and node parameter estimate, followed by optimization to refine the inferred shade tree. This approach effectively addresses structural ambiguities while offering non-deterministic inference capabilities.

Central to their method is a tree decomposition pipeline, employing context-free grammar to represent shade trees, recursive amortized inference for initial tree structure generation, and optimization-based fine-tuning to decompose remaining nodes. Multiple sampling strategies are also integrated to enable non-deterministic inference, a key feature in handling structural ambiguities. Comprehensive experimental results spanning various image types unequivocally demonstrate the efficacy of these pioneering methods.

The robustness and versatility of this method were rigorously tested using synthetic and real-captured datasets, encompassing both realistic and toon-style shading nodes. Comparative evaluations against baseline frameworks unequivocally underscore its superior ability to infer shade tree representations. Synthetic datasets, ranging from photo-realistic to cartoon-style shading nodes, served as a testament to the method’s adaptability. Real-world applicability was assessed through the “DRM” dataset, reaffirming its success in inferring shade tree structures and node parameters, thus facilitating efficient and intuitive object shading edits.

Conclusion:

Stanford’s pioneering AI technique for shading decomposition opens up exciting possibilities for the market. This breakthrough method promises to simplify and enhance shading editing processes, making them more accessible and efficient. Its wide-ranging applications across different domains, coupled with superior performance compared to existing frameworks, position it as a game-changer in the field of computer vision and graphics. As businesses increasingly rely on visual content, this innovation holds the potential to revolutionize how we interact with and manipulate digital images, offering new avenues for creativity and efficiency.