TL;DR:

- Video action recognition automates human action categorization in various fields.

- Deep learning, especially CNNs, has revolutionized this domain.

- Spatial and temporal attention modules enhance video feature extraction.

- The FSAN model combines residual CNNs with attention mechanisms.

- FSAN improves action recognition accuracy significantly.

- Market implications: Enhanced action recognition for surveillance, robotics, and sports analysis.

Main AI News:

The realm of action recognition in videos has undergone a remarkable transformation, driven by the surge in deep learning methodologies, particularly the utilization of convolutional neural networks (CNNs). These advancements have propelled the field into new horizons, finding applications in surveillance, robotics, sports analytics, and beyond. The fundamental objective is to empower machines with the ability to comprehend and interpret human actions, facilitating better decision-making and automation.

The evolution of video action recognition has been synonymous with the ascent of deep learning, where CNNs have demonstrated their prowess in extracting spatiotemporal features directly from video frames. Gone are the days of labor-intensive, computationally expensive handcrafted feature engineering, exemplified by early approaches like Improved Dense Trajectories (IDT). Instead, modern methodologies have harnessed the potential of two-stream models and 3D CNNs to harness spatial and temporal information effectively. However, the pursuit of efficiently distilling pertinent video information, particularly the identification of discriminative frames and spatial regions, remains an ongoing challenge. Furthermore, the computational overhead and memory demands associated with certain techniques, such as optical flow computation, pose hurdles to scalability and widespread applicability.

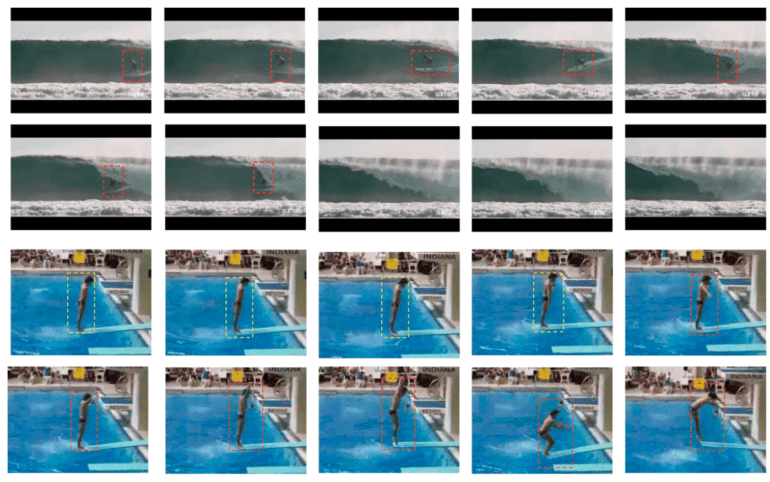

To surmount these challenges, a visionary research team from China has introduced a groundbreaking approach to action recognition. Their innovation harnesses the capabilities of enhanced residual CNNs and attention mechanisms, giving rise to the Frame and Spatial Attention Network (FSAN). The FSAN model introduces a spurious-3D convolutional network along with a two-level attention module. This unique architecture empowers the model to exploit information features across channel, time, and space dimensions, elevating its comprehension of spatiotemporal features embedded within video data. Moreover, a video frame attention module is incorporated to mitigate the adverse effects of similarities between different video frames. By infusing attention modules at various levels, this approach engenders more potent representations for action recognition.

The fusion of residual connections and attention mechanisms within FSAN offers a multifaceted advantage. Residual connections, especially through the spurious-ResNet architecture, augment gradient flow during training, facilitating the efficient capture of intricate spatiotemporal features. Concurrently, attention mechanisms, both in the temporal and spatial domains, enable a targeted focus on pivotal frames and spatial regions. This selective attention augments the model’s discriminative prowess and diminishes the interference of noise, ultimately optimizing information extraction. Importantly, this approach ensures adaptability and scalability, readily conforming to the idiosyncrasies of specific datasets and requirements. Collectively, this integration fortifies the robustness and efficacy of action recognition models, culminating in enhanced performance and heightened accuracy.

To substantiate the efficacy of the FSAN model in action recognition, the researchers embarked on a comprehensive journey of experimentation, employing two cornerstone benchmark datasets: UCF101 and HMDB51. The model found its footing on an Ubuntu 20.04 bionic operating system, leveraging the computational muscle of an Intel Xeon E5-2620v4 CPU and a GeForce RTX 2080 Ti GPU. Training the model spanned 100 epochs, orchestrated through stochastic gradient descent (SGD) and meticulously tailored parameters, all executed on a system outfitted with 4 GeForce RTX 2080 Ti GPUs. The research team harnessed ingenious data processing techniques, encompassing rapid video decoding, frame extraction, and astute data augmentation practices, such as random cropping and flipping. In the crucible of evaluation, the FSAN model was pitted against state-of-the-art methodologies on both datasets, magnificently unveiling substantial enhancements in action recognition accuracy. Through a series of ablation studies, the researchers underscored the pivotal role of attention modules, reaffirming FSAN’s efficacy in elevating recognition performance and delivering precise spatiotemporal feature discernment for impeccable action recognition.

Conclusion:

The integration of spatial and temporal attention modules, as exemplified by the FSAN model, marks a significant leap in the field of action recognition. This advancement holds the potential to bolster the market by providing more accurate and efficient solutions for applications in surveillance, robotics, sports analysis, and beyond, thus enhancing decision-making and automation capabilities.