TL;DR:

- Rapid evolution of AI, exemplified by ChatGPT’s capabilities.

- Optical fiber connectivity’s pivotal role in enabling AI advancement.

- OpenAI’s scaling of AI clusters and NVIDIA’s GPU support.

- GPU cluster architecture and InfiniBand’s significance.

- Transition from AOC to MPO-MPO cabling for streamlined setup.

- Challenges and solutions for scaling GPU clusters.

Main AI News:

In the realm of Artificial Intelligence (AI), the transformation has been nothing short of remarkable. Just a year ago, the interactions with AI models like ChatGPT were akin to conversing with a knowledgeable yet somewhat monotone acquaintance. Fast forward to today, and the AI landscape has evolved at breakneck speed, revolutionizing the way we perceive AI’s capabilities.

The pivotal shift in the AI landscape can be attributed to the advancement of optical fiber connectivity. The journey commenced when ChatGPT made its debut, capturing the imagination of AI enthusiasts and professionals alike. Its initial charm lay in its uncanny ability to generate responses that felt astoundingly human. Yet, it couldn’t escape its own limitations; it was quick to remind users that it was, indeed, an AI creation.

However, the tides have turned dramatically in just six months. ChatGPT now responds with a rapidity that borders on the astonishing. What transpired during this time frame? How did ChatGPT’s creators adapt to meet the soaring demand, with over 100 million subscribers eagerly awaiting its insights?

The answer lies in the convergence of innovation and infrastructure. OpenAI, the driving force behind ChatGPT, has diligently expanded its AI cluster’s inference capacity. Leading the way in AI chip manufacturing, NVIDIA played a pivotal role by supplying approximately 20,000 powerful graphics processing units (GPUs) to support ChatGPT’s development. But that’s not all. Speculation is rife that the upcoming AI model from OpenAI might require an astonishing 10 million GPUs.

The cornerstone of this generative AI revolution is the GPU cluster architecture. The challenge of connecting 10 million GPUs with high-speed, low-latency networks seemed daunting at first. Yet, it was met with ingenious solutions drawn from high-performance computing (HPC) networks.

NVIDIA’s design guidelines paved the way. Their approach involved constructing substantial GPU clusters using 256 GPU pods as scalable units. Each pod comprised 8 compute racks and 2 networking racks, connected through InfiniBand, a high-speed, low-latency switching protocol, powered by NVIDIA’s Quantum-2 switches.

The future of InfiniBand switches is poised to be even faster, with extreme data rate (XDR) speeds on the horizon. This evolution means 64x800G ports per switch, utilizing single-mode fiber for optimal performance.

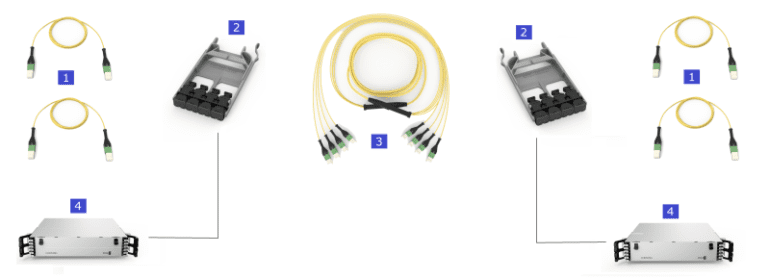

In the realm of cabling, the high-performance computing (HPC) world once favored point-to-point active optical cables (AOCs). However, the advent of 800G NDR ports has shifted the landscape towards MPO-MPO passive patch cords for point-to-point connections, offering a streamlined setup.

But the real challenge emerges when scaling up to clusters of 16,384 GPUs. The rail-optimized nature of compute fabric used in these clusters demands innovative solutions. A structured cabling system with a two-patch panel design, utilizing high-fiber-count MPO trunks, emerges as a viable solution.

By consolidating multiple MPO patch cords into single Base-8 trunk cables, the arduous task of bundling numerous cords is eliminated. This not only streamlines operations but also offers spare connections for backup or additional applications.

Conclusion:

The rapid evolution of AI, driven by optical fiber connectivity and robust infrastructure, has profound implications for the market. It signifies a growing demand for high-speed, low-latency networks and structured cabling systems to support the expansion of GPU clusters, presenting opportunities for companies in the optical connectivity industry to meet these emerging needs.