TL;DR:

- SpaceEvo automates the creation of specialized search spaces for efficient INT8 inference on specific hardware platforms.

- Its lightweight design costs only 25 GPU hours, making it practical and cost-effective.

- The specialized search space enhances the exploration of more efficient models with low INT8 latency.

- SpaceEvo outperforms existing alternatives in INT8 quantized accuracy-latency tradeoffs.

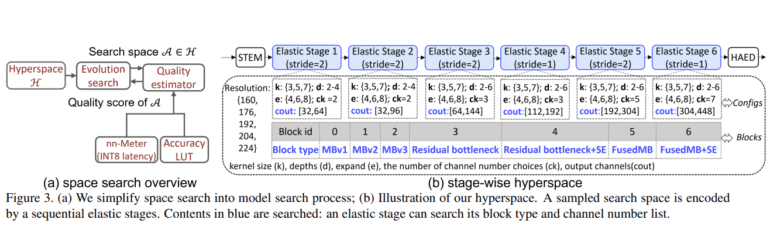

- It incorporates an evolutionary search algorithm, the Q-T score metric, redesigned search algorithms, and a block-wise search space quantization scheme.

- The two-stage NAS process ensures comparable quantized accuracy without individual fine-tuning or quantization.

Main AI News:

In the ever-evolving landscape of deep learning, the quest to craft efficient deep neural network (DNN) models, seamlessly blending top-tier performance with minimal latency on diverse devices, persists as a formidable challenge. Traditionally, the go-to approach has involved the utilization of hardware-aware neural architecture search (NAS) techniques. These methods automate the design of models tailored to specific hardware configurations, complete with predefined search spaces and algorithms. Nonetheless, a crucial oversight has been the optimization of the very search space that underpins this entire process.

Enter “SpaceEvo,” a groundbreaking innovation unveiled by a team of Microsoft researchers. SpaceEvo presents an ingenious solution by autonomously sculpting specialized search spaces that are finely tuned for the realm of efficient INT8 inference on distinct hardware platforms. Its distinguishing feature lies in its ability to orchestrate this design phase with precision, ushering in a new era of hardware-centric, quantization-friendly NAS search domains.

What distinguishes SpaceEvo from the rest is its judicious design, which proves both pragmatic and cost-effective. The creation of hardware-specific solutions demands a mere 25 GPU hours, making it an economical choice. Within this specialized search space, adorned with operators and configurations favored by the hardware, lies the capacity to delve into the universe of more efficient models, characterized by low INT8 latency—an accomplishment that consistently outshines the current alternatives.

The researchers left no stone unturned, conducting an exhaustive examination of the factors influencing INT8 quantized latency on two widely employed devices. Their findings underscored the profound impact of operator types and configurations on INT8 latency. SpaceEvo duly assimilates these insights into its fabric, giving rise to a diverse array of accurate and INT8 latency-friendly architectures within the search space. A key feature of SpaceEvo’s arsenal is its deployment of an evolutionary search algorithm, supplemented by the Q-T score as a metric, revamped search techniques, and an ingenious block-wise search space quantization strategy.

The two-tiered NAS process guarantees that candidate models attain commendable quantized accuracy, all without the need for individual fine-tuning or quantization. Rigorous experimentation, spanning real-world edge devices and ImageNet, has consistently borne out SpaceEvo’s superiority over manually crafted search spaces. In doing so, SpaceEvo not only raises the bar but establishes new benchmarks for the delicate balancing act between INT8 quantized accuracy and latency—a game-changer in the world of deep learning for real-world applications.

Conclusion:

SpaceEvo’s emergence signifies a pivotal moment in the market, as it addresses the pressing need for efficient deep neural network models across diverse hardware. Its ability to autonomously optimize search spaces and deliver cost-effective solutions opens doors for more efficient models, setting new industry standards for INT8 quantized accuracy and latency trade-offs. Businesses can harness SpaceEvo to enhance the performance of their real-world devices, gaining a competitive edge in the dynamic landscape of deep learning applications.