TL;DR:

- Groundbreaking research by Stanford and Microsoft introduces “Self-Taught Optimizer” (STOP).

- Scaffolding programs, utilizing language models like GPT-4, unlock optimization potential.

- STOP methodology employs recursive self-improvement for enhanced program performance.

- Empirical evidence shows the model’s proficiency grows with each iteration.

- Analysis of self-improvement strategies and ethical considerations.

- A significant step in the direction of Recursive Self-Improvement (RSI) systems.

- STOP offers promise for the future of AI-powered software development.

Main AI News:

In a groundbreaking collaboration between Stanford University and Microsoft Research, a revolutionary advancement in the realm of artificial intelligence and software architecture has emerged. The research introduces a paradigm-shifting concept known as “Self-Taught Optimizer” (STOP), leveraging the unparalleled capabilities of GPT-4, the latest language model from OpenAI.

Scaffolding Programs: A Catalyst for Optimization

The fundamental premise of this research is rooted in the potential for optimizing almost any objective described in natural language through interactions with a language model. However, the true breakthrough lies in the development of “scaffolding” programs, meticulously crafted by human programmers using languages like Python, to orchestrate systematic interactions with language models. These scaffolding programs pave the way for transformative improvements in program performance.

Optimization Beyond Boundaries

Researchers have unveiled a profound revelation: the design of a scaffolding program transcends the constraints of any specific optimization problem and language model. It’s a universal concept that holds immense promise for enhancing AI-driven solutions across the board.

The STOP Methodology: A Journey of Self-Improvement

STOP initiates with an initial “improver” scaffolding program, which utilizes a language model to enhance responses to challenges. What sets STOP apart is its recursive nature, wherein the model continually refines the improver program through iterative processes. The results are nothing short of astonishing.

Empirical Validation: Proving the Power of STOP

To gauge the effectiveness of STOP, researchers subjected it to a battery of algorithmic tasks. The findings unequivocally illustrate that with each iteration, the model evolves, becoming increasingly proficient at self-improvement. This phenomenon highlights how language models can seamlessly step into the role of meta-optimizers.

Analyzing Self-Improvement Strategies

This research delves deep into the self-improvement strategies suggested by the model and their translation into downstream tasks. It also investigates the potential risks associated with such self-improvement techniques, ensuring a balanced approach to AI development.

A Half-Century of Progress

While Recursive Self-Improvement (RSI) systems have tantalized researchers for over half a century, this research represents a significant step forward. Unlike previous endeavors that aimed at creating all-encompassing AI systems, STOP focuses on the model’s capacity to enhance the scaffold invoking it iteratively. The mathematical foundation of the RSI-code-generation problem is eloquently articulated in this study.

Unveiling the Potential of RSI-Code Generation

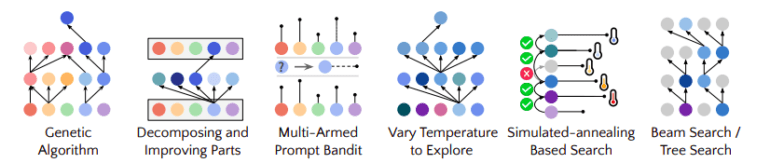

STOP serves as a compelling example of the immense potential of RSI-code generation. Different downstream tasks exhibit marked improvements, even when utilizing a GPT-4 model trained on data up to 2021. Figure 1 showcases some of the intriguing and practical scaffolds STOP can generate, offering a glimpse into the future of AI-powered software development.

Ethical Considerations: Navigating the Frontier

As we embrace these transformative capabilities, it’s paramount to address the ethical dimensions of such technology. The research meticulously examines the ethical development of AI systems like STOP, acknowledging the importance of safeguarding against unforeseen consequences.

Conclusion:

The introduction of STOP and its demonstrated success in optimizing AI-driven solutions signifies a pivotal moment in the market. This innovation opens doors to more efficient and effective software development, with language models like GPT-4 playing a central role in shaping the future of AI technology and its applications. Businesses that leverage these advancements are poised to gain a significant competitive advantage in the evolving landscape of artificial intelligence.