TL;DR:

- AlignProp is a novel method for fine-tuning text-to-image diffusion models.

- It aligns models with reward functions through end-to-end backpropagation.

- This approach mitigates memory requirements using gradient checkpointing.

- AlignProp outperforms alternatives in various optimization objectives.

- It offers simplicity and efficiency in optimizing diffusion models.

- AlignProp has implications for the advancement of generative AI.

Main AI News:

In the realm of generative modeling in continuous domains, probabilistic diffusion models have solidified their position as the industry standard. At the forefront of text-to-image diffusion models stands DALLE, a pioneer in the field. These models have garnered widespread recognition for their remarkable capacity to generate images through training on extensive web-scale datasets. This article delves into the burgeoning landscape of text-to-image diffusion models and their newfound prominence in the realm of image generation. It is imperative to note, however, that despite their unparalleled potential, these models face a formidable challenge when it comes to fine-tuning specific objectives.

The unsupervised or weakly supervised nature of these models poses a significant hurdle in terms of controlling their behavior in downstream tasks such as optimizing human-perceived image quality, image-text alignment, and ethical image generation. Prior research has made valiant attempts to address this challenge by employing reinforcement learning techniques. Unfortunately, this approach is marred by high variance in gradient estimators, making it less than ideal for achieving the desired outcomes.

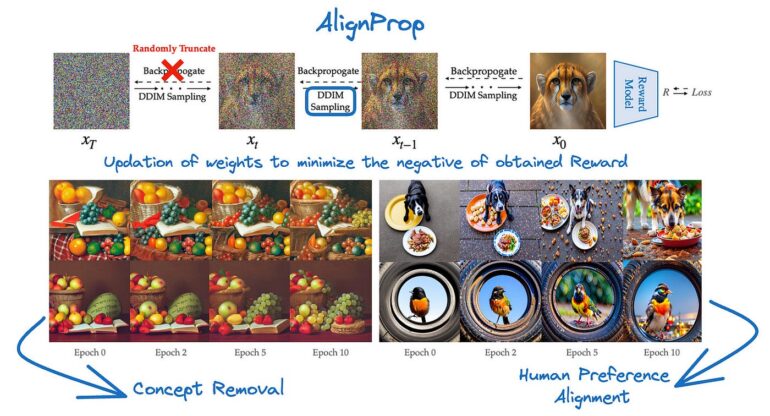

Enter “AlignProp,” a groundbreaking method introduced in a recent paper by CMU and Google DeepMind researchers. AlignProp aims to bridge the gap by aligning diffusion models with downstream reward functions through an ingenious process of end-to-end backpropagation during the denoising phase.

One of AlignProp’s crowning achievements lies in its ability to mitigate the formidable memory requirements traditionally associated with backpropagation through modern text-to-image models. It accomplishes this feat by expertly fine-tuning low-rank adapter weight modules and implementing gradient checkpointing.

The paper meticulously assesses AlignProp’s performance across a spectrum of objectives, including image-text semantic alignment, aesthetics, image compressibility, and the controllability of object numbers in generated images, both individually and in combination. The results are nothing short of astounding, with AlignProp consistently outperforming its competitors by delivering higher rewards in fewer training iterations. Notably, AlignProp distinguishes itself through its conceptual simplicity, rendering it a pragmatic choice for optimizing diffusion models based on different reward functions.

AlignProp’s transformative approach harnesses gradients derived from reward functions to fine-tune diffusion models, ushering in improvements in both sampling efficiency and computational effectiveness. The experiments conducted underpin AlignProp’s effectiveness in optimizing an expansive array of reward functions, even for tasks that are inherently challenging to define through prompts alone. As we peer into the future, it’s tantalizing to consider potential research directions, including the extension of these principles to diffusion-based language models, with the ultimate aim of enhancing their alignment with human feedback.

Conclusion:

AlignProp’s innovative approach to optimizing text-to-image diffusion models promises to redefine the landscape of generative AI. By aligning models with reward functions and streamlining the optimization process, it enhances efficiency and effectiveness. This development signifies a significant step forward in the market, offering researchers and businesses the means to achieve superior results in image generation, alignment, and controllability, ultimately driving advancements in the broader field of AI and image-based applications.