TL;DR:

- Nvidia integrates TensorRT-LLM SDK for Windows and models like Stable Diffusion.

- TensorRT optimizes large language models (LLMs) for faster performance.

- Aim to dominate generative AI, offering both GPUs and software solutions.

- TensorRT-LLM to be publicly available, enhancing accessibility.

- Nvidia’s strong position with GPUs, including H100 GPUs with high demand.

- A new GPU, GH200, has been announced for the future.

- Rivals like Microsoft and AMD are developing alternative chips.

- SambaNova and AMD focus on AI model execution services.

- Nvidia anticipates a future with reduced dependence on its GPUs.

Main AI News:

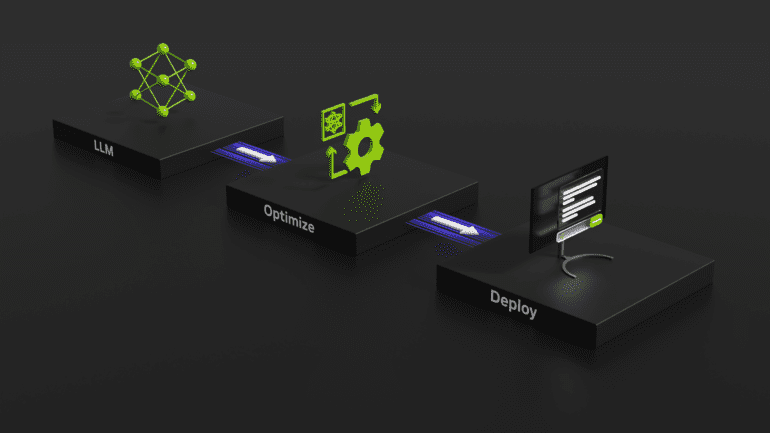

In a strategic move to reinforce its dominance in the generative AI arena, Nvidia has unveiled plans to integrate support for its TensorRT-LLM SDK into Windows and models such as Stable Diffusion. This bold initiative is aimed at enhancing the performance of large language models (LLMs) and associated tools, effectively accelerating the capabilities of generative AI systems. TensorRT, known for its prowess in expediting inference processes, is set to revolutionize the landscape of AI-driven content generation, exemplified by the creation of cutting-edge Stable Diffusion images. With this software in its arsenal, Nvidia aspires to carve a more substantial niche within the realm of generative AI.

TensorRT-LLM, a groundbreaking development, promises to optimize the functionality of LLMs like Meta’s Llama 2 and other AI models like Stability AI’s Stable Diffusion, facilitating lightning-fast execution on Nvidia’s H100 GPUs. The company asserts that by subjecting LLMs to TensorRT-LLM, “this acceleration significantly improves the experience for more sophisticated LLM use — like writing and coding assistants.”

Nvidia’s strategic vision extends beyond providing GPUs for LLM training and operation; it encompasses delivering software solutions that enhance the efficiency of model execution, thereby dissuading users from seeking alternative cost-effective options in the generative AI landscape. The company has underscored its commitment to accessibility, affirming that TensorRT-LLM will be “available publicly to anyone who wants to use or integrate it,” with easy access to the SDK via its website.

Nvidia’s stronghold on the market for powerful chips, indispensable for training LLMs such as GPT-4, is already well-established. Demand for its H100 GPUs has surged to unprecedented levels, with estimated prices soaring to $40,000 per chip. Building on this momentum, Nvidia has announced its plans for a forthcoming GPU iteration, the GH200, slated for release next year. This aggressive pursuit of innovation has translated into remarkable financial gains, with the company’s second-quarter revenues reaching a staggering $13.5 billion.

However, the landscape of generative AI is characterized by rapid evolution, prompting the emergence of alternative methods for executing LLMs without the need for expensive GPUs. Industry giants like Microsoft and AMD have entered the fray, announcing their intentions to develop proprietary chips, thereby reducing their reliance on Nvidia’s hardware. Simultaneously, other players like SambaNova have introduced services designed to streamline model execution.

Nvidia’s current dominance in the hardware segment of generative AI is indisputable. Still, the company appears to be positioning itself for a future where users are no longer compelled to acquire extensive quantities of its GPUs, signaling a strategic shift that reflects its forward-thinking approach to the ever-evolving field of AI.

Conclusion:

Nvidia’s strategic integration of TensorRT-LLM SDK signifies a formidable move to consolidate its position in the generative AI sector. By offering both high-performance GPUs and software solutions, Nvidia aims to ensure efficient AI content generation, potentially reducing users’ reliance on costly hardware. However, the emergence of competition and alternative solutions suggests a dynamic market where adaptability will be key to sustaining dominance.