TL;DR:

- Microsoft Azure AI introduces Idea2Img, a groundbreaking multimodal AI framework for image development.

- Idea2Img aims to streamline the process of creating images from abstract concepts, reducing manual effort.

- It leverages large multimodal models (LMMs) like GPT-4V to enable iterative self-refinement.

- GPT-4V performs prompt generation, draft image selection, and feedback reflection for improved results.

- Idea2Img’s built-in memory module enhances the overall iterative process.

- It excels in scenarios with intertwined picture-text sequences, visual design, and complex usage descriptions.

- User preference studies demonstrate Idea2Img’s impressive efficacy, with a notable +26.9% improvement.

Main AI News:

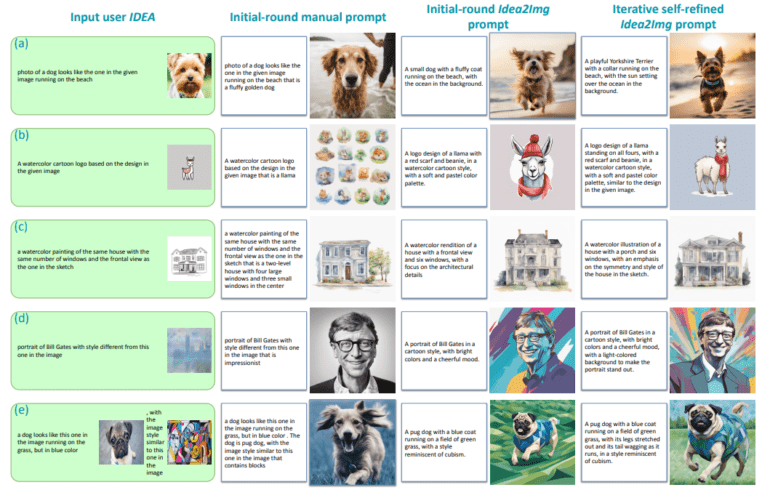

In the realm of “image design and generation,” the ability to transform abstract concepts into visual representations has always been a challenge. Whether it’s deciphering vague references like “the dog looks like the one in the image” or translating intricate instructions like “create a logo for the Idea2Img system,” the process often involves trial and error. Humans resort to text-to-image (T2I) models to bridge this gap, but the journey to pinpointing the perfect representation is often a laborious one.

Enter the era of large multimodal models (LMMs), where researchers are exploring the potential to endow systems with a remarkable self-refinement capability. This could liberate individuals from the arduous task of translating abstract ideas into visual assets. Much like how humans constantly improve their problem-solving methods, these LMMs exhibit promise in tackling natural language processing tasks like acronym generation, sentiment retrieval, and text-based environment exploration. The key to this progress lies in the concept of self-refinement, as demonstrated by large language model (LLM) agent systems.

However, as we transition from text-only tasks to the dynamic realm of multimodal content, new challenges emerge. Multimodal content, characterized by the intricate interplay of images and text, demands a fresh approach to self-refinement.

Researchers from Microsoft Azure have taken a pioneering step in exploring the potential of iterative self-refinement within the domain of “image design and generation.” Their brainchild, Idea2Img, is a self-refinancing multimodal framework engineered to autonomously craft and design images. Idea2Img synergizes the capabilities of an LMM, known as GPT-4V(vision), with a T2I model to unravel the model’s real-world applications and identify valuable T2I cues. The intricate dance between the T2I model’s output (draft images) and the subsequent generation of T2I prompts is orchestrated seamlessly by the LMM.

The multimodal iterative self-refinement process encompasses several pivotal steps. GPT-4V takes center stage by performing the following actions:

- Prompt Generation: GPT-4V generates a set of N text prompts, tailored to the multimodal user IDEA. These prompts evolve in response to previous feedback and refinement history.

- Draft Image Selection: GPT-4V meticulously evaluates N draft images, all representing the same IDEA, and selects the most promising candidate.

- Feedback Reflection: GPT-4V critically examines the divergence between the draft image and the original IDEA. It then provides valuable feedback on what went awry and why it veered off course, and offers insights into how the T2I prompts can be refined for better results.

In addition to these fundamental processes, Idea2Img boasts a built-in memory module that meticulously tracks your exploration history for each type of prompt (picture, text, and feedback). This cyclical interplay between these GPT-4V-based processes is the secret sauce behind Idea2Img’s prowess.

As a refined tool for image creation and generation, Idea2Img offers a refreshing approach. By embracing design directives rather than exhaustive image descriptions, accommodating multimodal IDEA inputs, and delivering images with enhanced semantic and visual appeal, Idea2Img emerges as a standout among T2I models.

The research team has meticulously scrutinized various scenarios of image creation and design. Idea2Img excels in processing IDEA inputs that feature seamlessly intertwined picture-text sequences, encompass visual design elements, and encapsulate intended usage descriptions. It can even extract intricate visual information from input images. Building upon these advanced features and versatile use cases, the team has curated a challenging evaluation IDEA set comprising 104 samples. These complex questions challenge human understanding, often leading to initial errors.

To evaluate Idea2Img’s effectiveness, the team conducted user preference studies, employing Idea2Img in conjunction with various T2I models. The results speak volumes, with user preference scores witnessing substantial improvements across a spectrum of image-generating models. In particular, Idea2Img shines with a remarkable +26.9% increase when paired with SDXL, showcasing its unmatched efficacy in the field.

Conclusion:

Microsoft’s Idea2Img represents a significant leap in the field of image development and design. By harnessing the power of LMMs and enabling iterative self-refinement, it promises to revolutionize the way we transform abstract ideas into visual assets. Idea2Img’s adaptability to complex multimodal scenarios and its substantial user preference improvements signify a game-changing innovation with far-reaching implications for the market. Businesses and industries that rely on image creation and design can expect increased efficiency and higher-quality output, ultimately driving greater competitiveness and customer satisfaction.