TL;DR:

- Cross-Episodic Curriculum (CEC) emerges as a groundbreaking AI algorithm.

- CEC optimizes limited data for enhanced learning and adaptability.

- The algorithm incorporates cross-episodic experiences into Transformer models.

- Two scenarios demonstrate CEC’s prowess in reinforcement and imitation learning.

- CEC’s policies exhibit remarkable generalization in diverse contexts.

- The CEC process involves curricular data preparation and attention model training.

- Visual representations use colored triangles to represent causal Transformer models.

Main AI News:

In the realm of artificial intelligence, where data is often a critical determinant of success, researchers are facing a pivotal challenge. How can we leverage limited data efficiently to enhance the learning and adaptability of Transformer agents? A groundbreaking solution has emerged from the collaborative efforts of experts at Stanford, NVIDIA, and UT Austin, in the form of Cross-Episodic Curriculum (CEC). This cutting-edge algorithm promises to redefine the way we approach sequential decision-making problems in AI.

The Transformation of Decision-Making

The advent of foundation models, exemplified by the transformative capabilities of Transformer models, has ushered in a new era across various domains, spanning planning, control, and pre-trained visual representation. These models have been nothing short of revolutionary. However, their data-intensive nature poses a significant hurdle when applied to fields like robotics, where data availability is limited. The central question emerges: Can we maximize the potential of even sparse and varied data sources to facilitate more effective learning?

Introducing Cross-Episodic Curriculum (CEC)

In response to this pressing challenge, a consortium of researchers has unveiled the ingenious Cross-Episodic Curriculum (CEC) algorithm. CEC capitalizes on the diverse distribution of experiences when structured into a curriculum. Its mission? To enhance the learning and generalization capabilities of Transformer agents. At its core, CEC fuses cross-episodic experiences into a Transformer model, creating a unique curriculum. This curriculum unfolds as a step-by-step progression, encapsulating the learning journey and skill improvement across multiple episodes. Notably, CEC leverages the potent pattern recognition capabilities inherent to Transformer models to forge a robust cross-episodic attention mechanism.

Illustrating CEC’s Effectiveness

To demonstrate the potency of CEC, two illustrative scenarios have been presented:

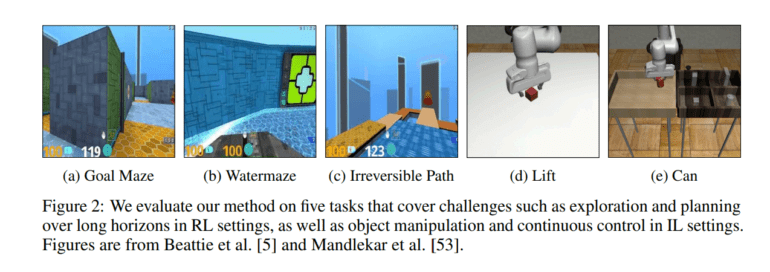

- DeepMind Lab’s Multi-Task Reinforcement Learning with Discrete Control: In this scenario, CEC is harnessed to conquer a discrete control multi-task reinforcement learning challenge. The curriculum devised by CEC captures the learning trajectory within both individualized and progressively complex contexts. This approach empowers agents to gradually master increasingly demanding tasks, evolving their capabilities incrementally.

- RoboMimic, Imitation Learning Using Mixed-Quality Data for Continuous Control: The second scenario, particularly relevant to RoboMimic, employs continuous control and imitation learning with mixed-quality data. CEC’s curriculum aims to document the progression of demonstrators’ expertise.

Achieving Remarkable Generalization

The outcomes achieved by CEC are nothing short of remarkable. The policies generated by this innovative approach exhibit exceptional performance and strong generalization in both scenarios. This underscores the viability of CEC as a strategy to enhance Transformer agents’ adaptability and learning efficiency across diverse contexts.

Key Steps in Cross-Episodic Curriculum

The Cross-Episodic Curriculum method encompasses two pivotal stages:

- Curricular Data Preparation: This initial step involves meticulously organizing events into a structured order. Events are arranged to vividly depict curriculum patterns, which can manifest in various forms, including policy refinement in single environments, progress in progressively challenging environments, and the elevation of demonstrator expertise.

- Cross-Episodic Attention Model Training: The second critical phase centers on training the model to anticipate actions. What sets this method apart is its capacity to look back at previous episodes, in addition to the present one. By assimilating enhancements and policy adjustments recorded in the curriculum data, the model achieves more efficient learning.

- Visualizing Progress

In visual representations of these stages, colored triangles symbolize causal Transformer models, playing a pivotal role in the CEC approach. These models streamline the integration of cross-episodic events into the learning process, with their recommended actions denoted by “a^,” guiding decision-making.

Conclusion:

The introduction of Cross-Episodic Curriculum (CEC) marks a significant milestone in the AI landscape. CEC’s ability to harness limited data for improved learning and adaptability presents exciting opportunities for the market. As AI systems become more efficient and versatile, businesses across various industries can anticipate enhanced decision-making capabilities and greater overall performance. CEC is poised to shape the future of AI applications, unlocking new possibilities and potential for growth.