TL;DR:

- Large language models (LLMs) play a pivotal role in AI applications.

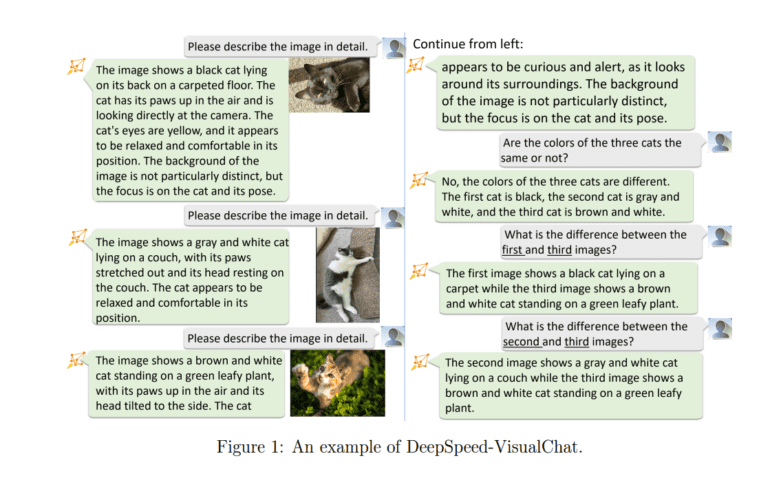

- DeepSpeed-VisualChat enhances LLMs with multi-modal capabilities.

- Outstanding scalability was achieved with a 70-billion parameter model.

- Employs Multi-Modal Causal Attention (MMCA) for improved adaptability.

- Data blending strategies enrich the training environment.

- Integration of DeepSpeed framework for scalability.

- Architecture rooted in MiniGPT4 with separate attention matrices.

- Real-world results demonstrate superior scalability and adaptability.

Main AI News:

In the realm of artificial intelligence, large language models (LLMs) have emerged as indispensable tools, mimicking human language comprehension and generation on an impressive scale. These sophisticated systems find applications in diverse fields, including question-answering, content creation, and interactive conversations. Their prowess is a result of extensive learning processes, where they meticulously dissect vast troves of online data.

The significance of these advanced language models lies in their ability to elevate human-computer interactions, fostering more nuanced and effective communication across various domains. Beyond their traditional text-centric capabilities, ongoing research endeavors aim to imbue them with the capacity to grasp and utilize multi-modal information, encompassing sounds and images. The development of multi-modal capabilities holds immense promise and captivates the AI community.

Modern-day large language models, exemplified by the likes of GPT, have already exhibited remarkable proficiency in a multitude of text-related tasks. They achieve this prowess through additional training techniques such as supervised fine-tuning and reinforcement learning with human guidance. To attain the caliber of expertise demonstrated by human specialists, particularly in domains involving coding, quantitative reasoning, mathematical logic, and engaging in sophisticated conversations like AI chatbots, refining these models through rigorous training is imperative.

The journey towards enabling these models to comprehend and generate content in various formats, including images, sounds, and videos, is in full swing. This endeavor encompasses methods such as feature alignment and model modification. Notably, large vision and language models (LVLMs) represent a significant stride in this direction. However, challenges persist in terms of training and data availability, especially when dealing with complex scenarios like multi-image multi-round dialogues. Furthermore, these models grapple with adaptability and scalability constraints across diverse interaction contexts.

Enter DeepSpeed-VisualChat, a groundbreaking framework pioneered by researchers at Microsoft. This innovative framework bolsters LLMs by infusing them with multi-modal capabilities, showcasing exceptional scalability, even with a staggering language model size of 70 billion parameters. DeepSpeed-VisualChat is purpose-built to facilitate dynamic conversations replete with multi-round exchanges and multi-faceted visual inputs, seamlessly blending text and imagery. To enhance the adaptability and responsiveness of multi-modal models, the framework employs Multi-Modal Causal Attention (MMCA), a technique that independently gauges attention weights across various modalities. The research team has diligently employed data blending strategies to mitigate issues arising from the available datasets, resulting in a rich and diversified training environment.

One of the standout features of DeepSpeed-VisualChat is its unparalleled scalability, made feasible through the thoughtful integration of the DeepSpeed framework. This framework exhibits extraordinary scalability and pushes the boundaries of what can be achieved in the realm of multi-modal dialogue systems. It boasts a 2 billion parameter visual encoder and a 70 billion parameter language decoder from LLaMA-2, a testament to its robust capabilities.

It’s worth noting that DeepSpeed-VisualChat’s architecture is firmly rooted in MiniGPT4. In this structural design, an image is encoded using a pre-trained vision encoder and harmoniously aligned with the output of the text embedding layer’s hidden dimension via a linear layer. These inputs are subsequently fed into language models like LLaMA2, bolstered by the groundbreaking Multi-Modal Causal Attention (MMCA) mechanism. Notably, both the language model and the vision encoder remain static during this process.

In a departure from the conventional Cross Attention (CrA) approach, Multi-Modal Causal Attention (MMCA) takes a distinctive route. It employs separate attention weight matrices for text and image tokens, enabling visual tokens to focus on themselves while permitting text tokens to concentrate on the tokens preceding them.

Real-world outcomes affirm DeepSpeed-VisualChat’s superiority over preceding models. It not only enhances adaptability across diverse interaction scenarios without incurring added complexity or training costs but also scales impressively, accommodating a language model size of up to 70 billion parameters. This monumental achievement serves as a robust foundation for the continual evolution of multi-modal language models, marking a significant stride forward in the AI landscape.

Conclusion:

The introduction of DeepSpeed-VisualChat signifies a substantial leap in multi-modal language model development. Its exceptional scalability and adaptability hold great promise for the AI market, offering improved performance across various interactive applications and opening doors to innovative solutions in human-computer interactions. This development is poised to drive advancements in AI and expand its utility in diverse industries.