TL;DR:

- BOSS (Bootstrapping your own SkillS) is a cutting-edge framework leveraging large language models for autonomous skill acquisition.

- It excels in executing unfamiliar tasks within new environments, surpassing conventional methods.

- BOSS combines reinforcement learning with language models, extending skill repertoires for better task execution.

- It autonomously acquires diverse, long-horizon skills with minimal human intervention.

- Experimental results confirm BOSS’s superiority in executing complex tasks in novel settings.

- It bridges the gap between reinforcement learning and natural language understanding.

- Future research may explore reset-free RL, long-horizon task breakdowns, and expanded unsupervised RL.

Main AI News:

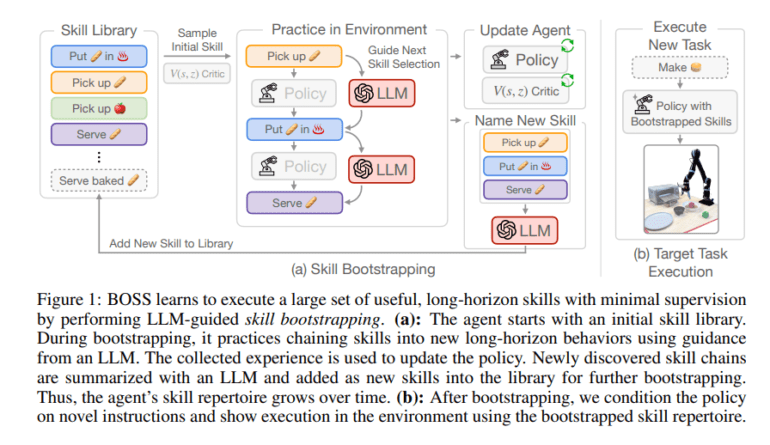

In the realm of autonomous skill acquisition, a groundbreaking innovation has emerged: BOSS, short for Bootstrapping your own SkillS. This revolutionary framework leverages the power of large language models (LLMs) to autonomously construct a versatile skill library, enabling agents to conquer intricate tasks with minimal human intervention. Compared to conventional unsupervised skill acquisition methods and simplistic bootstrapping approaches, BOSS stands out for its ability to excel in executing unfamiliar tasks within entirely new environments. This remarkable leap in autonomous skill acquisition is poised to reshape industries and redefine the capabilities of robotic systems.

At its core, BOSS is driven by reinforcement learning (RL), a technique that seeks to optimize policies within Markov Decision Processes for maximizing expected returns. Historically, RL research has focused on pre-training reusable skills for complex tasks. Meanwhile, unsupervised RL, with its emphasis on curiosity, controllability, and diversity, has learned skills independently of human input. What sets BOSS apart is its integration of large language models for skill parameterization and open-loop planning. This augmentation extends skill repertoires, guiding exploration and rewarding the completion of skill chains, ultimately leading to higher success rates in executing long-horizon tasks.

While traditional robot learning heavily relies on supervision, BOSS introduces a paradigm shift. It positions itself as a framework capable of acquiring diverse long-horizon skills with minimal human intervention. Through a combination of skill bootstrapping and guidance from large language models, BOSS progressively assembles and combines skills to handle complex tasks. Unsupervised environment interactions further enhance its policy robustness, enabling it to excel in solving challenging tasks within entirely new environments.

BOSS operates within a two-phase framework. In the first phase, it acquires a foundational skill set using unsupervised RL objectives. The second phase, known as skill bootstrapping, harnesses the power of LLMs to guide skill chaining and rewards based on skill completion. This innovative approach empowers agents to construct complex behaviors from basic skills, unlocking a new realm of possibilities. Experimental findings within household environments have confirmed the superiority of LLM-guided bootstrapping over naïve bootstrapping and prior unsupervised methods when it comes to executing unfamiliar long-horizon tasks in novel settings.

In rigorous evaluations, BOSS, guided by LLMs, has consistently demonstrated its prowess in solving extended household tasks in unfamiliar settings, outperforming previous LLM-based planning and unsupervised exploration methods. These results, supported by inter-quartile means and standard deviations of oracle-normalized returns and success rates, underscore the transformative potential of BOSS. It has the capacity to autonomously acquire a wide range of complex behaviors from basic skills, making it a frontrunner in expert-free robotic skill acquisition.

BOSS, guided by LLMs, paves the way for autonomous skill acquisition without the need for expert guidance. It excels in executing unfamiliar functions in entirely new environments, surpassing the limitations of naive bootstrapping and prior unsupervised approaches. Real-world household experiments have provided compelling evidence of BOSS’s effectiveness in acquiring diverse, complex behaviors from basic skills, highlighting its immense potential in the realm of autonomous robotics skill acquisition. Furthermore, BOSS offers promise in bridging the gap between reinforcement learning and natural language understanding, harnessing pre-trained language models to facilitate guided learning.

As we peer into the future, several exciting research directions emerge. These include investigating reset-free RL for autonomous skill learning, proposing innovative long-horizon task breakdowns through BOSS’s skill-chaining approach, and expanding the boundaries of unsupervised RL for low-level skill acquisition. Additionally, enhancing the integration of reinforcement learning with natural language understanding within the BOSS framework holds the potential for groundbreaking advancements. Applying BOSS to diverse domains and evaluating its performance across various environments and task contexts promises to unlock new avenues for exploration and discovery. BOSS is more than a framework; it’s a catalyst for a new era of autonomous skill acquisition.

Conclusion:

BOSS represents a paradigm shift in autonomous skill acquisition, with the potential to transform industries. Its ability to autonomously acquire complex skills in unfamiliar environments positions it as a game-changer. This innovation not only empowers robotic systems but also opens doors to new possibilities in the market, where autonomous agents can adapt and excel in dynamic, uncharted territories. As BOSS continues to evolve and integrate with natural language understanding, it stands as a promising force in reshaping the future of automation and robotics.